Project Non-Photorealistic Rendering for Virtual Reality Applications

M.Sc. Adrian Kreskowski

M.Sc. Gareth Rendle

M.Sc. Sebastian Stickert

Prof. Dr. Bernd Fröhlich

Motivation

The term Non-Photorealistic Rendering (NPR) refers to a family of rendering techniques that produce stylized, simplified or abstract images based on 3D geometry. Unlike photorealistic rendering, their purpose is not the simulation of the real world in as much detail as possible, but the abstraction of objects within a specific context. Examples of this are visual simplification of architecture and highlighting of geometric features such as edges and corners [1,2], the creation of blueprints [6] or exploded view diagrams [4] for models of complex mechanical parts, and the transformation of 3D worlds into distinct works of art with a visually consistent style [5,8,9,10].

One of the main challenges in creating NPR effects is to design the algorithms such that the stylizations are consistent between different viewing perspectives to allow for stereoscopic perception [9] and temporal plausibility, for example when models are animated [3,7].

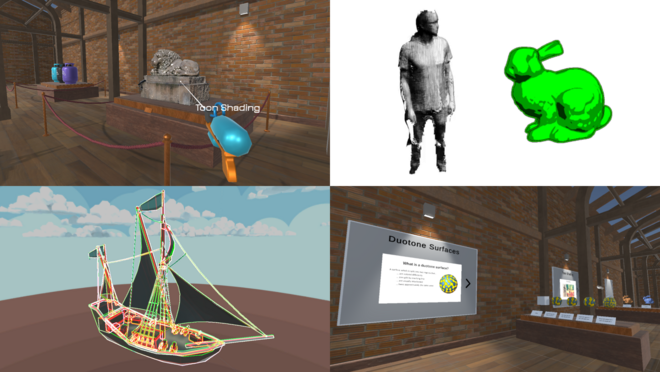

Clockwise from top left: in the NPR gallery, a social VR application, users could apply NPR effects to virtual objects with a gun metaphor. Hatching and outline effects are shown applied to a volumetric avatar and the Stanford bunny, respectively. The NPR gallery also educated virtual visitors about various NPR effects. Blueprint rendering, applied to a ship model, allows the viewer to see geometry that would otherwise be occluded.

Description

In this project, we will review the NPR literature, with a focus on whether existing algorithms are suitable in the context of a variety of virtual reality applications. In order to support a wide range of operating systems, such as Windows, Linux and Android, as well as different display devices such as head-mounted displays and projection-based systems, the techniques will be implemented entirely with Unity, using hand-crafted shader pipelines and Unity's abstract graphics API.

Results

Initially, each project member chose one NPR effect from the literature to present to the group, and implement in Unity. As well as collecting shared knowledge and building up a project code base, this led to the development of the NPR Gallery, a social virtual reality application where users could learn about NPR effects from bitesize exhibit-style information points, as well as applying NPR effects to virtual objects. The NPR Gallery, shown above, was part of the University's annual Summaery showcase, allowing guests to learn about and have fun with NPR effects in VR.

In the second part of the project, we investigated how NPR effects could be applied to real-time volumetric avatars. Our project members worked on implementations of toon shading, outlines, and real-time hatching that were applied to avatars using the Unity rendering pipeline.

We envisage that the work undertaken in this project will pave the way for user studies that assess how the application of NPR effects may be able to improve the user's experience in social virtual reality applications.

Literature

[1] Gooch, A., Gooch, B., Shirley, P., Cohen, E. (1998):

A non-photorealistic lighting model for automatic technical illustration. SIGGRAPH '98: Proceedings of the 25th annual conference on Computer Graphics and interactive techniques, pp. 447-452.

[2] Klein, A. W., Li, W., Kazhdan, M. M., Corrêal, W. T., Finkelstein, A., Funkhouser, T. A. (2000):

Non-photorealistic virtual environments. SIGGRAPH ’00: Proceedings of the 27th annual conference on Computer Graphics and interactive techniques, pp. 527-534.

[3] Kalnins, R. D., Davidson, P. L., Markosian, L., Finkelstein, A. (2003): Coherent Stylized Silhouettes, ACM Transaction on Graphics (Proc. SIGGRAPH): 22, pp. 856-861.

[4] Li, W., Agrawala, M., Salesin, D. (2004): Interactive Image-Based Exploded View Diagrams, Proceedings of Graphics Interface 2004, pp. 203-212.

[5] Santella, A. (2005): The Art of Seeing: Visual Perception In Design and Evaluation of Non-Photorealistic Rendering, Dissertation, New Brunswick, New Jersey.

[6] Nienhaus, M. (2005): Real-Time Non-Photorealistic Rendering Techniques For Illustrating 3D Scenes And Their Dynamics, Dissertation, Potsdam.

[7] Chen, J., Turk, G., MacIntyre, B. (2012): A Non-Photorealistic Rendering Framework with Temporal Coherence for Augmented Reality, 2012 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Atlanta, GA, pp. 151-160.

[8] Hegde, S., Gatzidis, C., Tian, F. (2012): Painterly rendering techniques: a state-of-the-art review of current approaches, Computer Animation & Virtual Worlds, 24: pp. 43-64.

[9] Lee, Y., Kim, Y., Kang, H., Lee, S. (2013): Binocular Depth Perception of Stereoscopic 3D Line Drawings, Proceedings of the ACM Symposium on Applied Perception, Dublin, Ireland, pp. 31-34.

[10] Chiu, C.-C., Lo, Y.-H., Lee, R.-R., Chu, H.-K. (2015): Tone- and Feature-Aware Circular Scribble Art, Computer Graphics Forum 34.