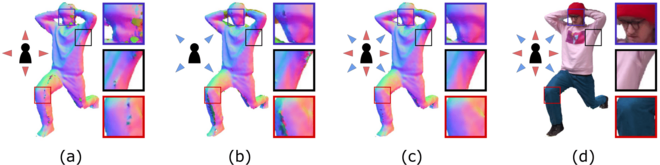

We propose a volumetric avatar capture configuration where cameras are assigned to one of two spatio-temporally offset capture groups, group A (red triangles, image a) and group B (blue triangles, image b). Our reconstruction pipeline consumes images from alternating capture groups and fuses surfaces from previous frames into the current reconstruction frame, mitigating temporal artefacts (c). Colors from previous frames are also blended into the texture applied to the geometry (d).

Abstract

RGBD cameras can capture users and their actions in the real world for reconstruction of photo-realistic volumetric avatars that allow rich interaction between spatially distributed telepresence parties in virtual environments. In this paper, we present and evaluate a system design that enables volumetric avatar reconstruction at increased frame rates. We demonstrate that we can overcome the limited capturing frame rate of commodity RGBD cameras such as the Azure Kinect by dividing a set of cameras into two spatio-temporally offset reconstruction groups and implementing a real-time reconstruction pipeline to fuse the temporally offset RGBD image streams. Comparisons of our proposed system against capture configurations possible with the same number of RGBD cameras indicate that it is beneficial to use a combination of spatially and temporally offset RGBD cameras, allowing increased reconstruction frame rates and scene coverage while producing temporally consistent volumetric avatars.

Publication

Rendle, G., Kreskowski, A., Froehlich, B.

Volumetric Avatar Reconstruction with Spatio-Temporally Offset RGBD Cameras

2023 IEEE Conference Virtual Reality and 3D User Interfaces (VR). DOI: 10.1109/VR55154.2023.00023.

[preprint][supplementary video]