Overview

Big data visualization has become an important and challenging research topic in the last years. The dynamic availability of different scanning techniques generates very large data sets of our real world. The magnitude of such data sets is in the order of billion primitives. We are developing algorithms and strategies to handle different types of large data sets in an integrated rendering system.

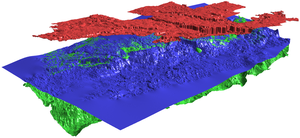

We developed a ray casting-based rendering system for the visualization of geological subsurface models consisting of multiple highly detailed height fields. Based on a shared out-of-core data management system, we virtualize the access to the height fields, allowing us to treat the individual surfaces at different local levels of detail. The visualization of an entire stack of height-field surfaces is accomplished in a single rendering pass using a two-level acceleration structure for efficient ray intersection computations. This structure combines a minimum-maximum quadtree for empty-space skipping and a sorted list of depth intervals to restrict ray intersection searches to relevant height fields and depth ranges. We demonstrate that our system is able to render multiple height-fields consisting of hundreds of millions of points in real-time.

Large Volumes

Ray Casting of Multiple Multi-Resolution Volume Datasets

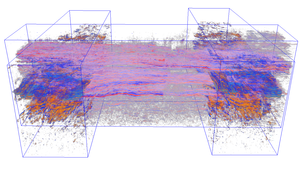

We developed a GPU-based volume ray casting system for rendering multiple arbitrarily overlapping multi-resolution volume data sets. Our efficient volume virtualization scheme is based on shared resource management, which can simultaneously deal with a large number of multi-gigabyte volumes. BSP volume decomposition of the bounding boxes of the cube-shaped volumes is used to identify the overlapping and non-overlapping volume regions. The resulting volume fragments are extracted from the BSP tree in front-to-back order for rendering. The BSP tree needs to be updated only if individual volumes are moved, which is a significant advantage over costly depth peeling procedures or approaches that use sorting on the octree brick level.

A Flexible Multi-Volume Shader Framework for Arbitrarily Intersecting Multi-Resolution Datasets

We present a powerful framework for 3D-texture-based rendering of multiple arbitrarily intersecting volumetric datasets. Each volume is represented by a multi-resolution octree-based structure and we use out-of-core techniques to support extremely large volumes. Users define a set of convex polyhedral volume lenses, which may be associated with one or more volumetric datasets. The volumes or the lenses can be interactively moved around while the region inside each lens is rendered using interactively defined multi-volume shaders. Our rendering pipeline splits each lens into multiple convex regions such that each region is homogenous and contains a fixed number of volumes. Each such region is further split by the brick boundaries of the associated octree representations. The resulting puzzle of lens fragments is sorted in front-to-back or back-to-front order using a combination of a view-dependent octree traversal and a GPU-based depth peeling technique. Our current implementation uses slice-based volume rendering and allows interactive roaming through multiple intersecting multi-gigabyte volumes.

We have developed a hierarchical paging scheme for handling very large volumetric data sets at interactive frame rates. Our system trades texture resolution for speed and uses effective prediction strategies. We have tested our approach for datasets with up to 16GB in size and show that it works well with less than 500MB of main memory. Our approach makes it feasible to deal with these volumes on desktop machines.

Large Point Clouds

In the context of the European research project 3D-Pitoti we are developing a prototype system for appearance preserving and output-sensitive real-time rendering of large point clouds.