So this is our initial post, documenting the work of the project: „Memories of a Syrian Student“

0. What happened before?

After the literature and technology research, we had a bit of struggle defining the goal of our project. We knew quit early, that we all wanted to work together as a big team:

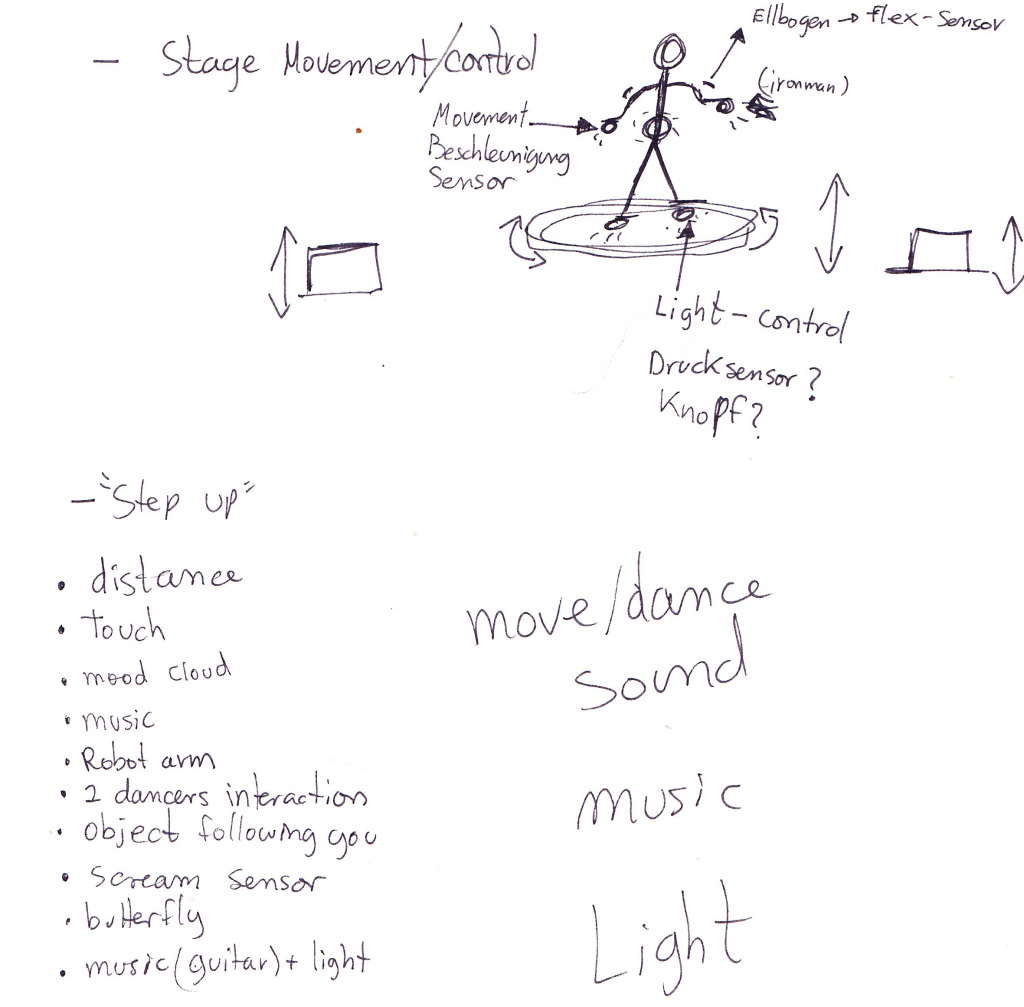

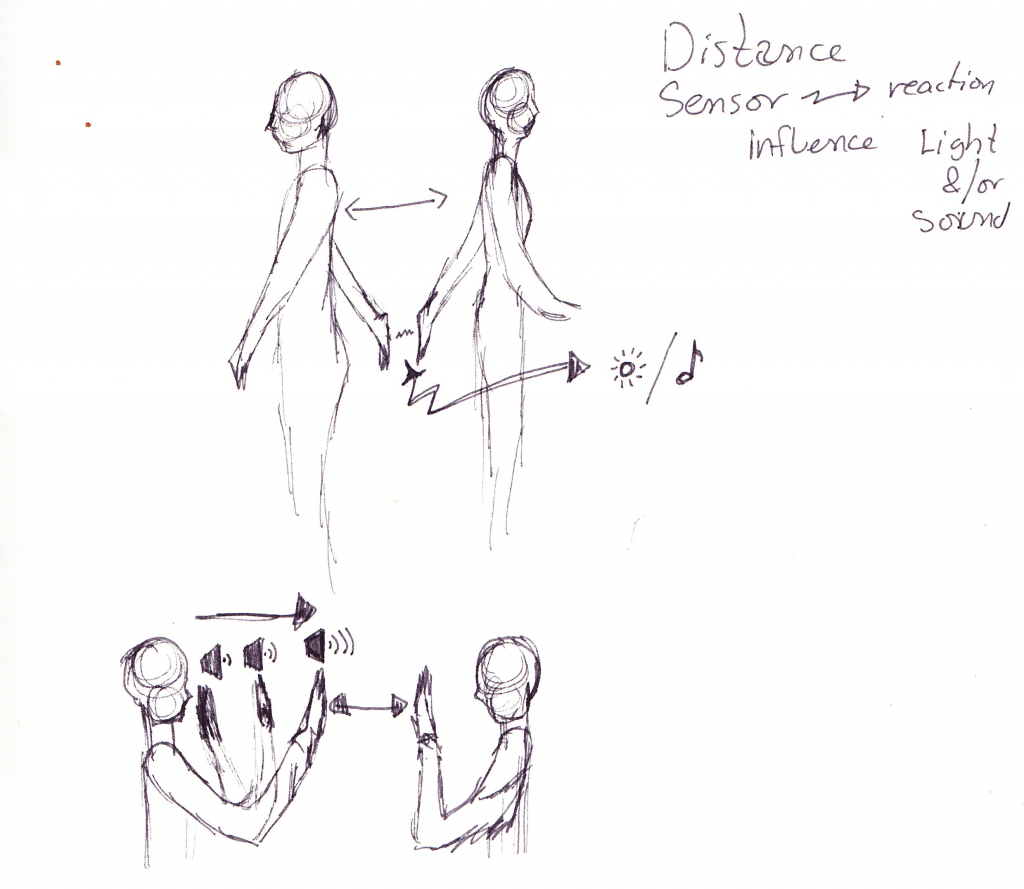

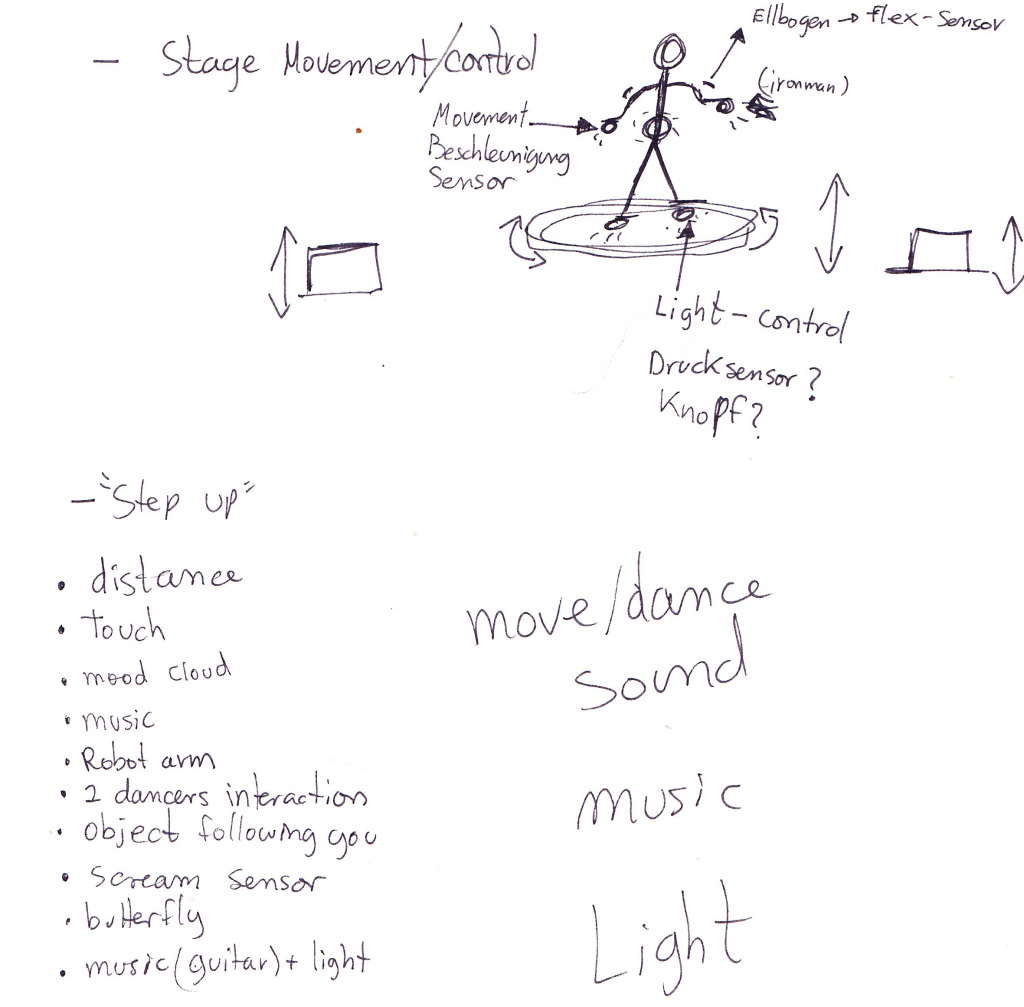

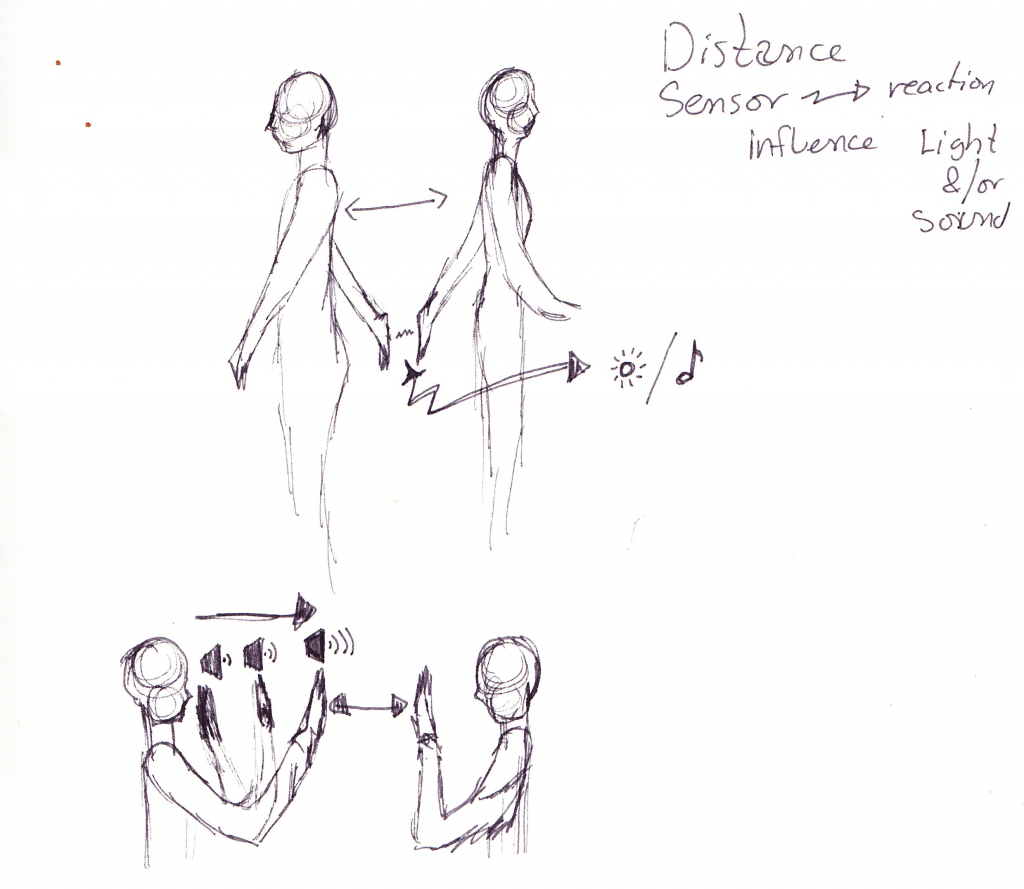

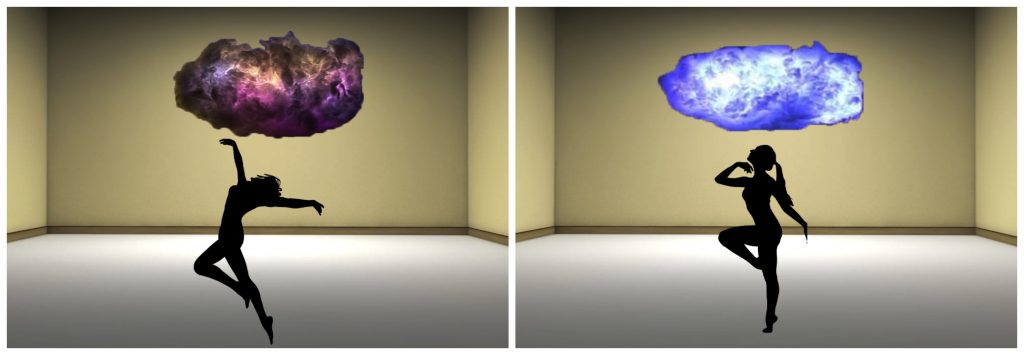

To find the right idea is kind of difficult in such a open project. Clear is, we want a costume with sensors, wearen by a performer, manipulating a stage. Do we want a dance-performance, a theater-scene or another scenario? How can we combine all our skills in the best way? Do we want to craft physical objects, control stage equipments(lights, stage elevator,…) or manipulate audio-visual media?

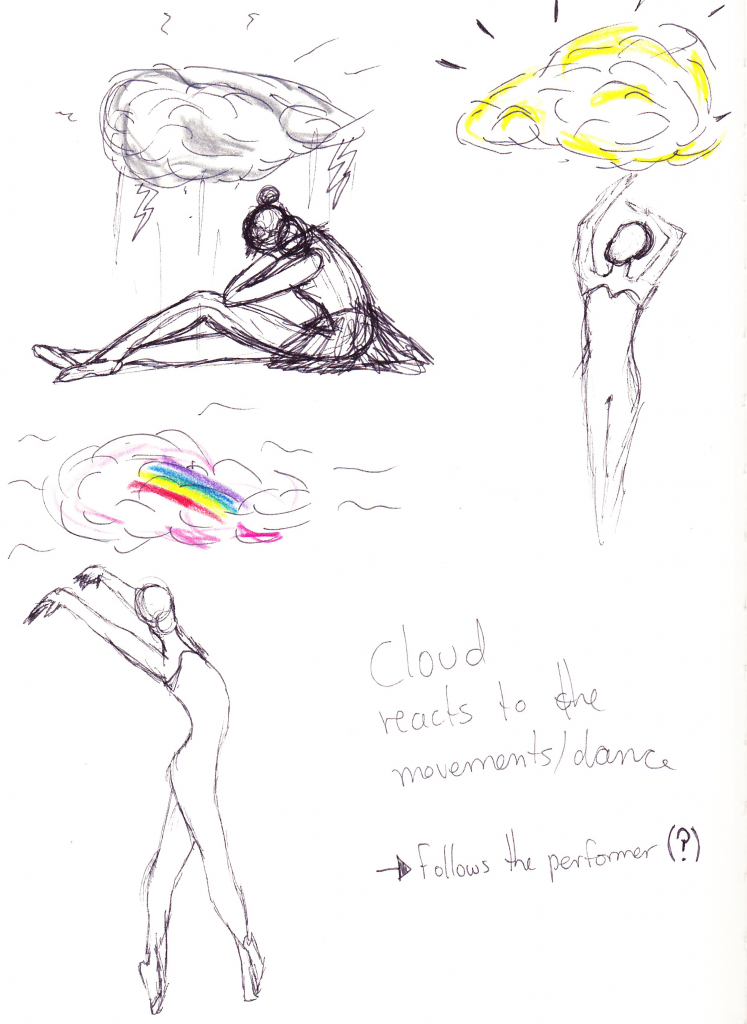

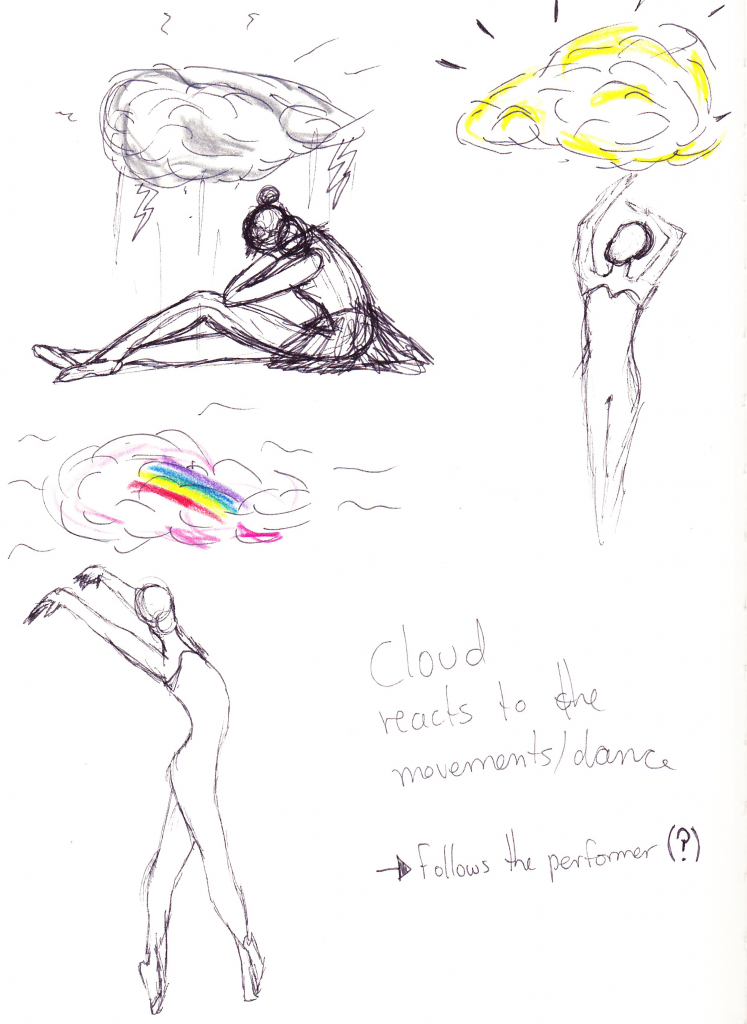

At first we were thinking of a physical object, a cloud flying like a spider cam over the actor, with several interaction features, showing the protagonist’s mood.

Example of SpiderCam System:

After a few weeks of research, we realized, that the top scaffold could be difficult without drilling in the ceiling and that the big step motors would surpass our budget.

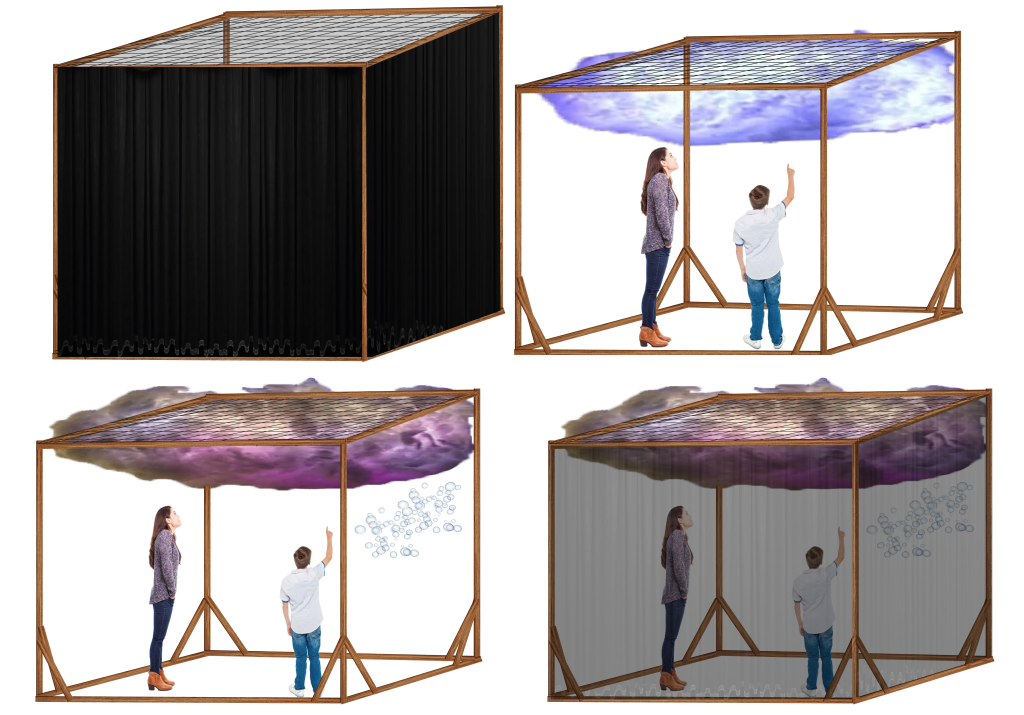

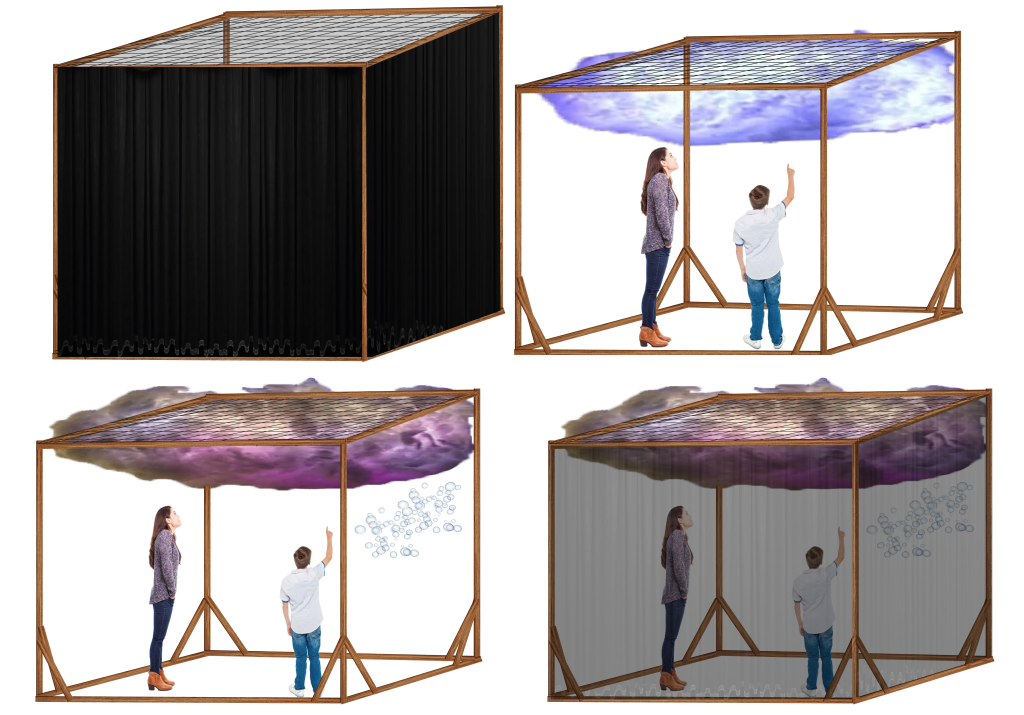

Keeping that cloud concept alive, we thought about a full-cloud-ceiling in order to not have to worry about motors.

There were several problems too, the system would not be transportable, it would be difficult to find a craft/installation-space, it could be hard to integrate the audience,…

So we came up with new ideas. We took the focus more on costume design and visual projection.

1. What is happening?

Now we have a stage performance art concept with the working title „Memories of a Syrian Student“:

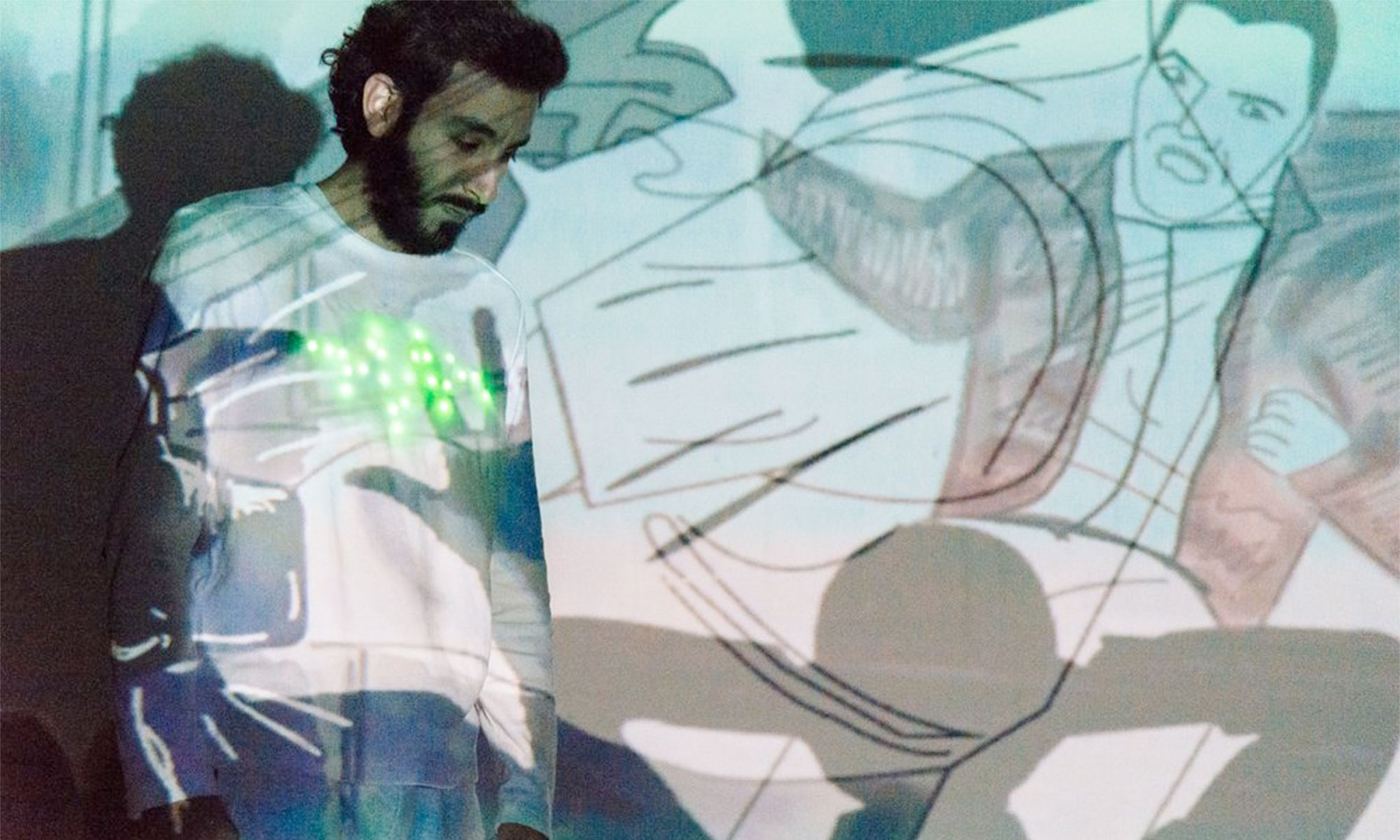

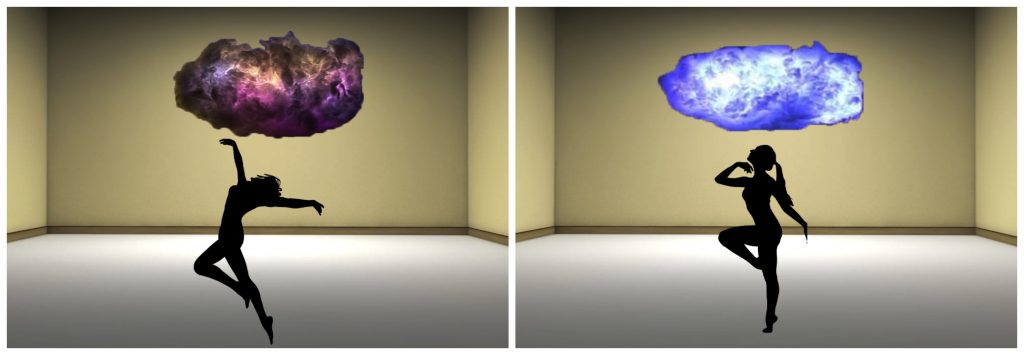

Our Student is just on the flight from Syria to Germany. Our Scenario covers the time between take off and landing. With a projection, we visualize his memories. The actor walks through his flashbacks(Video-loops). By his movement and acting, he controls/manipulates the projection using his costume.

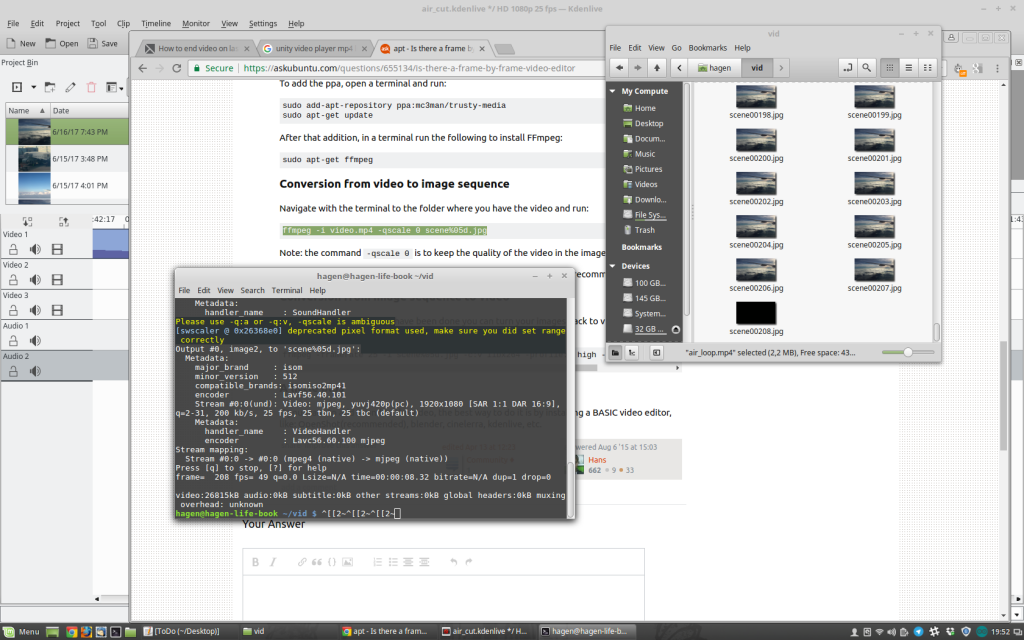

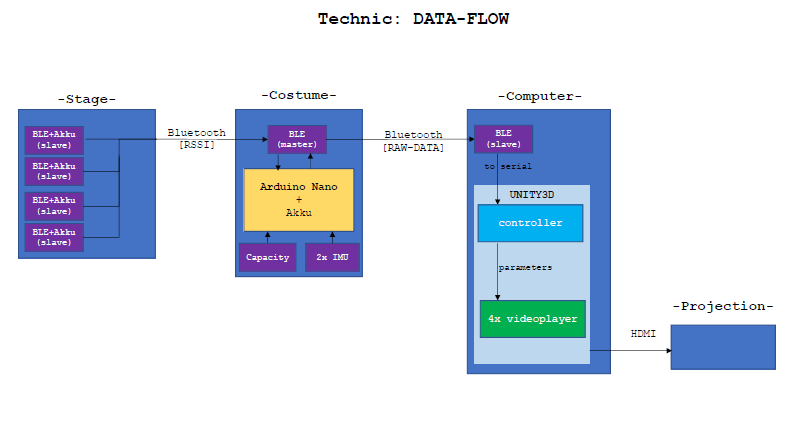

At the moment, Joeng and Phil develop the script. Laura and Joeng develop the stage+costume design and a storyboard. Lucas and Phil set a sensor->actuator list, try to manipulate video-loops and estimate whether Bluetooth communication could serve as an advantage.

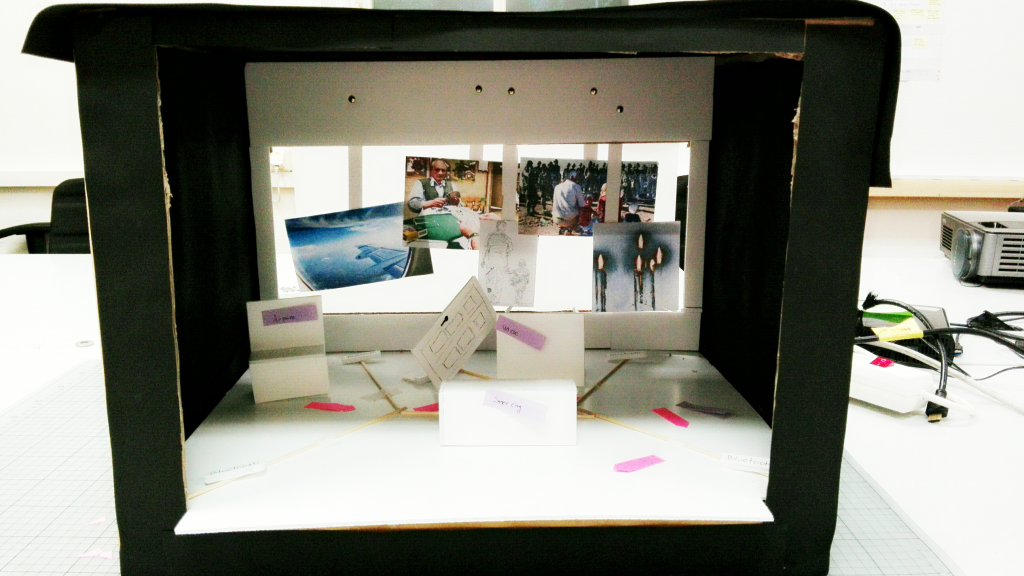

Yesterday I shot some example pictures showing the stage with moody projections:

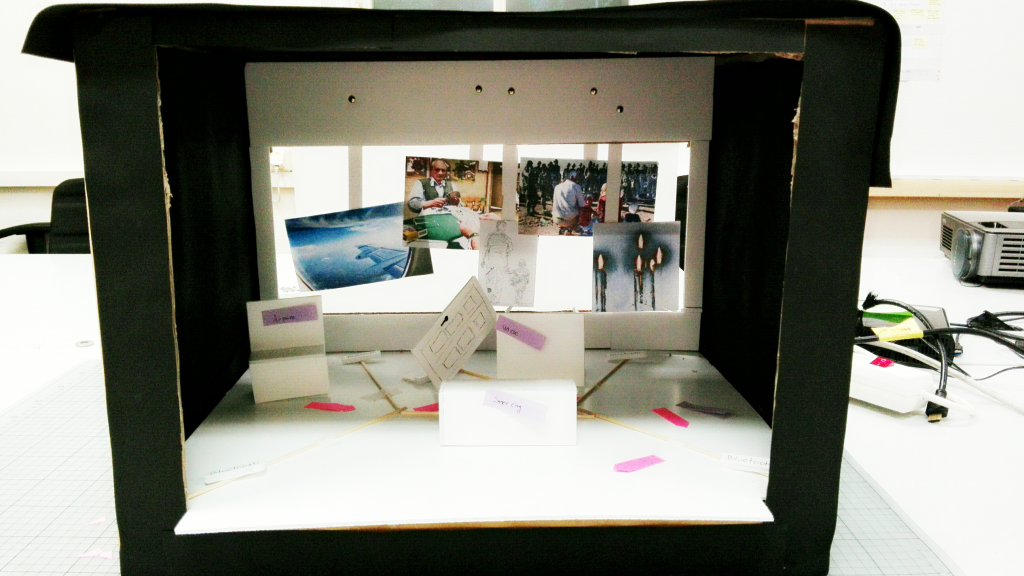

The old stage-model by Jeong & Laura:

(projection picture found at http://cdnfiles.hdrcreme.com/1805/medium/bazzar.jpg?1426885632)

The new stage-model for projections by Jeong:

(projection picture found at http://www.liveshoptravel.com/wp-content/uploads/inside-airplane.jpg)

(projection picture found at https://media1.s-nbcnews.com/j/newscms/2015_41/1250486/151006-palmyra-jpo-627a_128818d31c6c46f432a80b57026affb7.nbcnews-ux-2880-1000.jpg)

The static stage-model by Laura with all the flashback scenes in the back and possible objects on stage:

2. What will happen?

From now on, each step will be posted in the project-category.

There is obviously still a lot to do, some questions are:

- Who will be the actor?

- Who is going to make the off-stage voice?

- Will the projection be archive-footage, self-made/drawn material or a 3D Animation?

- …