Source

Naoyuki Houri, Hiroyuki Arita, Yutaka Sakaguchi. 2011. Audiolizing body movement: its concept and application to motor skill learning. In Proceeding AH ’11 Proceedings of the 2nd Augmented Human International Conference Article No. 13 Tokyo, Japan — March 13 – 13

Summary

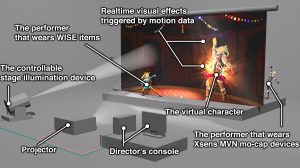

This article deals with a project that transforms the posture/movement of the human body or human controlled tools into acoustic signals and feeds them back to the users in a real-time manner.

The author says that sound effect plays a very big role in our common lives and events: many artificial system such as Video game, Cell Phones, etc, have been proposed for displaying information through auditory channel. (it is common to add sound effect for enhancing realistic sensation, or to give a stronger image and signs through auditory events, which let our brain learn their correlation better).

However, body information such as posture, movement and muscle forces are insensible. And that is why audiolizing these gestures can be effective to enhance and sense our movement better. It is also another method to compare the body states of different individuals or identical person with different occasions.

Practice

- Asistance of Soldering Work (audiolization system measure temperature)

- Audiolization of Calligraphy (a 6-axis force torque sensor attached to a brush)

- Pole Balancing Game (3D posture sensor)

- Acoustic Frisbee (3D acceleration sensor)

Relevance

If we want to work with different sensors as feedbacks system to understand our gesture better (signal and sign of our gestures and movements), this article might be helpful to see how they used different sensors within different subjects and occasions. We can use it as inspirations.