Im Kurs Bild zu Ton, Ton zu Bild entstehende Projekte der Studierenden:

Matthias Breuer: Bild-Ton-Wandler

Der Bild-Ton-Wandler ist ein Gerät zur Übertragung und zum Empfangen von Bildern mittels hörbarer Töne. Der Aufbau besteht aus zwei (oder - nicht der Regelfall - mehreren) Geräten, die jeweils die Rolle des Senders oder des Empfängers einnehmen können. Zu Beginn wird ein Gerät ausgewählt, das zuerst senden darf. Alle anderen Geräten empfangen. Hat der Sender sein Bild vollständig übermittelt, wechseln Sender und Empfänger die Rollen. So entsteht ein kontinuierlicher Dialog zwischen zwei Partnern bei dem die Nachricht sich ständig ändert.

Aufgrund der niedrigen Frequenzen und des einfachen Codierungsalgorithmus, mit dem die Daten übertragen werden, kann nicht störungsfrei kommuniziert werden. Zwischen Sender und Empfänger wird die Nachricht durch äußere Faktoren, z.B. Raum und Umgebungsgeräusche, beeinflusst und verändert.

Georg Frömelt: Die Sieben Todsünden

Das Projekt „Die Sieben Todsünden“ ist eine Annäherung an den angestaubten Begriff der Sünde und die Abgründe des menschlichen Charakters. Die Clips nehmen sich jeweils eines Lasters aus der klassischen Theologie an und verarbeiten dieses zu einer Mischung aus Bildern und Symbolen.

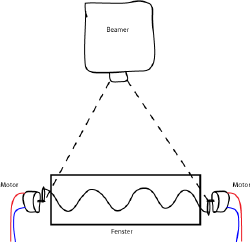

Christian Hellmann: Schwarze Welle

In einer schwarzen Box eine schwarze Welle. die Welle wird erzeugt durch die Rotation zweier Motoren, die mit einer schwarzen Schnur, einem Strick oder ähnlichem verbunden sind. Ein Beamer projiziert eine weiße Linie auf die Welle.

Sabina Kim: Stop motion

Musik video - Stell dir vor, als Fremder durch die Straßen Weimars zu laufen, dabei frägst du dich, was wirklich hinter den schönen Fassaden steckt.

Johann Niegl: Animierte Bildfragmente

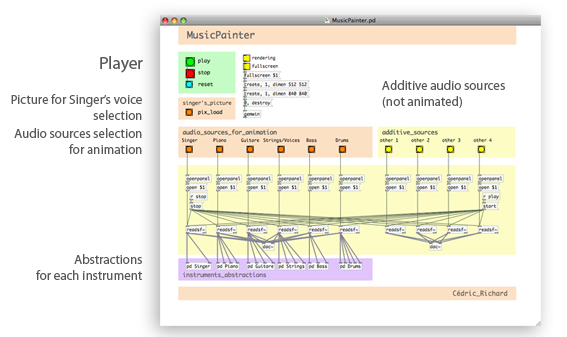

Cédric Richard: MusicPainter

My project is about music generating pictures by computer, and more espacially with Pure Data. The aim is to create a kind of numeric painting illustrating different sources of sounds and music, with simple forms, colors and movements. So I want to create a player on Pure data, using multitracks.

Artists and Influences

For this project, I thought a lot about 3 artists : Kandinsky, Fishinger and McLaren.

Kandinsky, because one of his focus was to translate music by painting, or at least to paint like a composer. His forms, lines, colors act like interruptions, pieces of rhythms, notes, and give a feeling of music in one picture. I could say that my goal would be to recreate a kind of living Kandinsky's painting, but there is a big difference between his work and my project, even bigger than the question of movement itself : the composition. The pictures of Kandinsky are over-composed, really calculated, whereas with computer generating imagery, I can only get a random result, I mean in the way I use it. Here I just can configurate some possible kinds of forms, movements or colors, but the way they appear (size, appearence, location, RGB result or opacity) depends of music and computer more than me. So I can't chose exactly the result, and plan a composition.

However we can notice the code of Kandinsky, which could be the beginning of a automatic process.

With Fischinger and McLaren, the movement is here, even if it's still ofen a question of composition, especially with Fischinger. But we can find a kind of random result with the scratched footages of McLaren, and this idea of savage interruptions or marks.

[1] Here is short-movie directed by Norman McLaren in 1971, and called Syncromie. The visuals ,linked to the sound, is clearly influenced by computer and digital imagery.

I'm also interrested in the work of TVestroy (who creates live sound and music with animations) and Billy Roisz and Dieb13 (who create pictures by sound, and work on feedbacks between them). Those performers work on cable bending. Even if it's not exactly what I do, there is this idea of generation by audio or video signal, using computers.

Pure Data

In my work, I use mainly 3 kinds of informations : the pitch, the amplitude, and the attack. At first, I used to work a lot on the pitch, with a serie of patches working with filters isolating each note on 3 octaves, linked to color changes. But the result was not enough precise. I tried to use this way to animate sounds from a bass guitare, due to the harmonics, it was hard to follow the music movements and changes. So I started to work more on the attack information. The conclusion was that with animation, it's easier to follow shapes changements or movements than colors changes.

Later, I had an other problem. Using a lot particles on Pd, my abstractions (one for each instrument) switched their color information each other (for exemple, guitare colors sent to drums forms). So at first, I tried to switch the color parts in all the abstractions. But it could still change, especially if not all the abstractions are used in the same time. So , with no solution, I decided to use this problem, using the [part_targetcolor] object to have more interactions between colors, and different result. Actually I worked on the colors changes with the "pitch", that was a way to use this information with less impact than on movement. But I noticed that switching the shapes'colors was not a problem, because the movements and shapes' evolutions are the main things that the brain follows and links to the music. So my problem became a way to make the colors evolve. The color changes due to the pitch can still be linked to the music, because in a musical composition, the different instruments play together. The main thing is to have a global picture, evolving globaly in accordance to the music.

I chosed to work on 6 different sources creating 6 elements : singer (main voice), guitare, piano, drums, bass, and strings (or choir voices). Those sources are a good summary of the different sounds of music. Of course, you can take a music using other sources and link those ones to the most similar from this list, for exemple for electronic music.

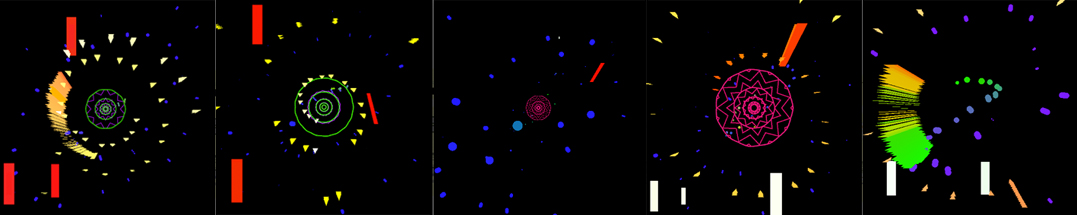

The first source I fixed was the one of the Singer, the only one which doesn't use particles. I chosed to use a kaleidoscope effect, in the middle of the picture. so I worked on different sources and shapes to use. I finally chosed a spiral to get unregular circles with horizontal lines which creates kind of stars inside. This rounded result made the projekt evolve to a global composition around it. So most of the other elements turn around this one. I created different versions of the spiral, with diiferent colors to choose.

For Piano and Guitare, using particles, I started with squares moving from left to right in horizontal, appearing on each detected attack, and using pitch to get their verticle position. I wanted that "notes" appear like touch of paint. But as I said upper, this system based on the pitch was not enough precise. So I let theme on the same line, and the attack information appeared to be enough to follow the music, with pitch acting on color. I finally made the rectangle turning around the middle of the composition. The rectangles also turn slowly aroud themselves (+5 degrees for each detected attack). The amplitude is used for the size.

For Strings (or voices), I used also turning particles, but with a quite unic size. the amplitude acts on the radius of the rotation. The attack acts on the speed, increased at each detection. It gives something really linked to the strings sound, fast and alive, reacting really well visually to the sound. I chosed triangles because it gives a really dynamic effect.

For Bass, I used the same system, but slower, and with an oblique obit and a deeper color. It give easily a background mood. I chosed circles because it gives something stable, like is bass for a tune.

For Drums, I used a system primary similar to the piano and guitare one. but not turning. The rectangles appear around the singer voice, but the vertical position is randomly got. The horizontal position is given by the pitch.

You can download the patch here: File:MusicPainter.zip.

For more information on my work on Pure Data, look here: GMU:Audio+Video/projekte#Cédric Richard: MusicPainter.

Result

<videoflash type="vimeo">10915919|400|265</videoflash>

<videoflash type="vimeo">10914603|400|265</videoflash>

Maria Schween: Was ist eine Frau?

Nil Yalter, eine 1965 aus der Türkei nach Paris emigrierte Feministin (vor allem durch ihre ethnologischen Arbeiten mit türkischen Gastarbeitern und, gemeinsam mit der „Groupe de 4“, durch den Film über das Frauengefängnis „La Roquette¡ bekannt) widmete der Unterdrückung und Leugnung der Klitoris in der türkischen Kultur einen halbstündigen Bauchtanz vor dem VideoMonitor. Zunächst zentrisch um den Nabel und dann linear auf den Bauch schreibt sie den aufklärenden Text einer Ethnologin. Während sie ihren Bauch „konkav und konvex“ bewegt, erfaßt die Kamera das Zentrum ihres Körpers ruhig und ohne Distanzveränderung. Mehr ereignet sich nicht. Diese Replik auf ein oppressives Kulturerbe kann nur durch eine Erklärung der Künstlerin erschlossen werden. ... Projektbeschreibung