All posts by Tamim

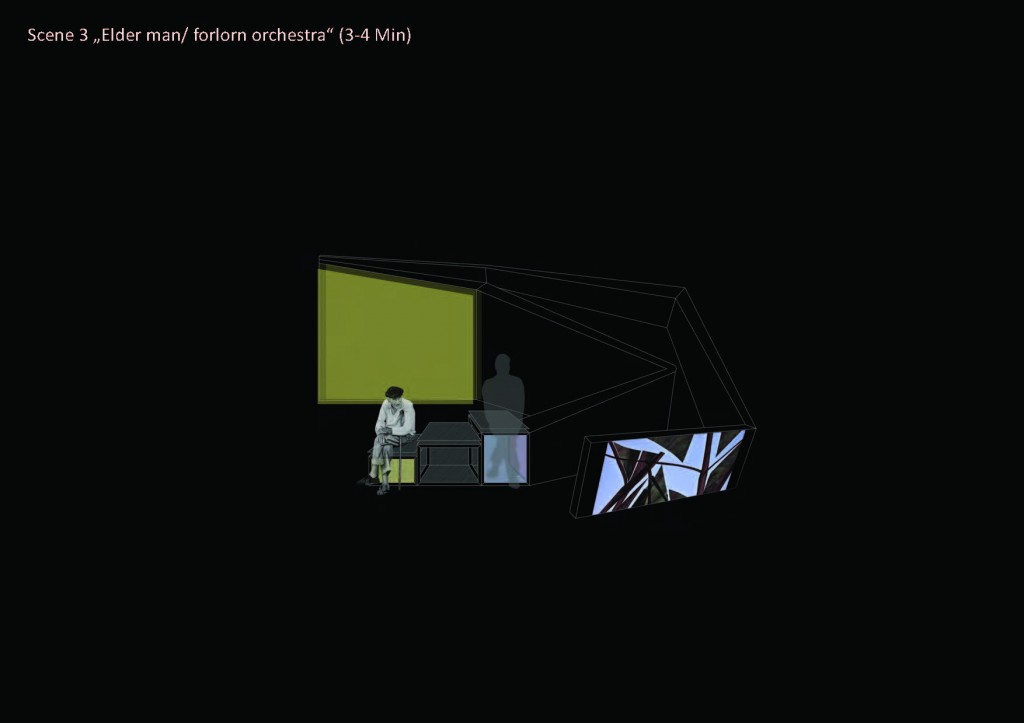

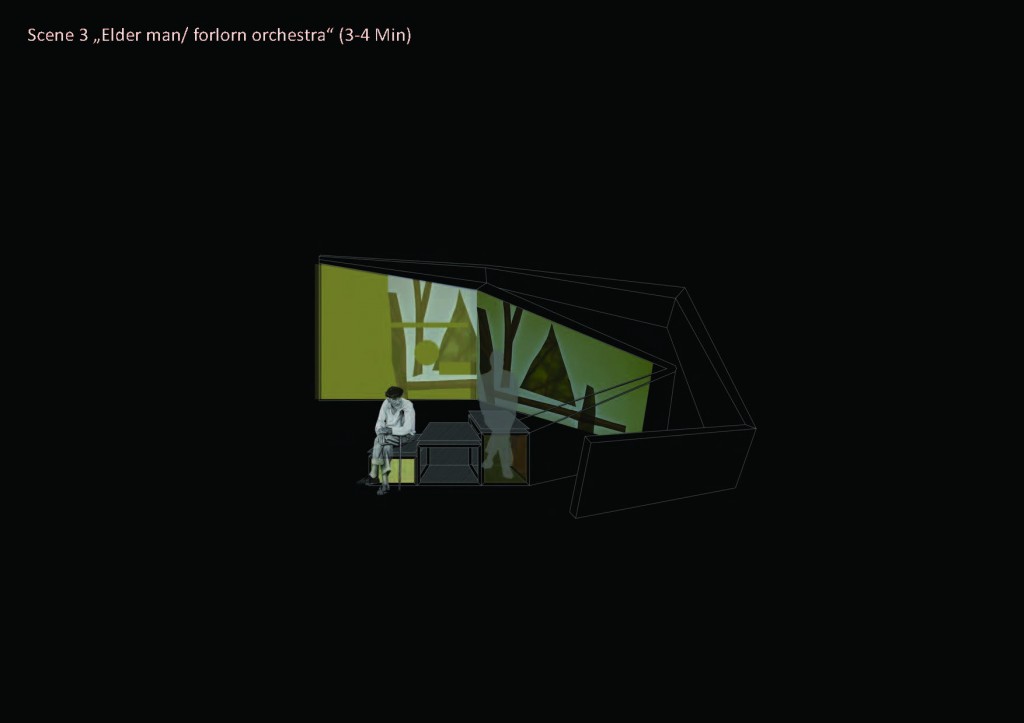

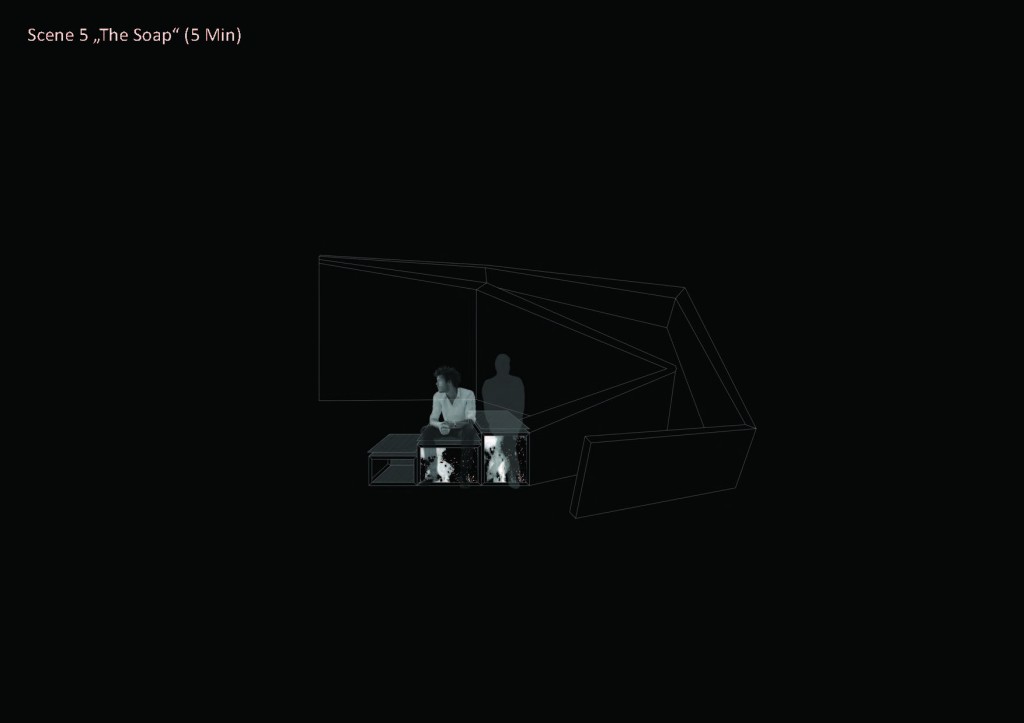

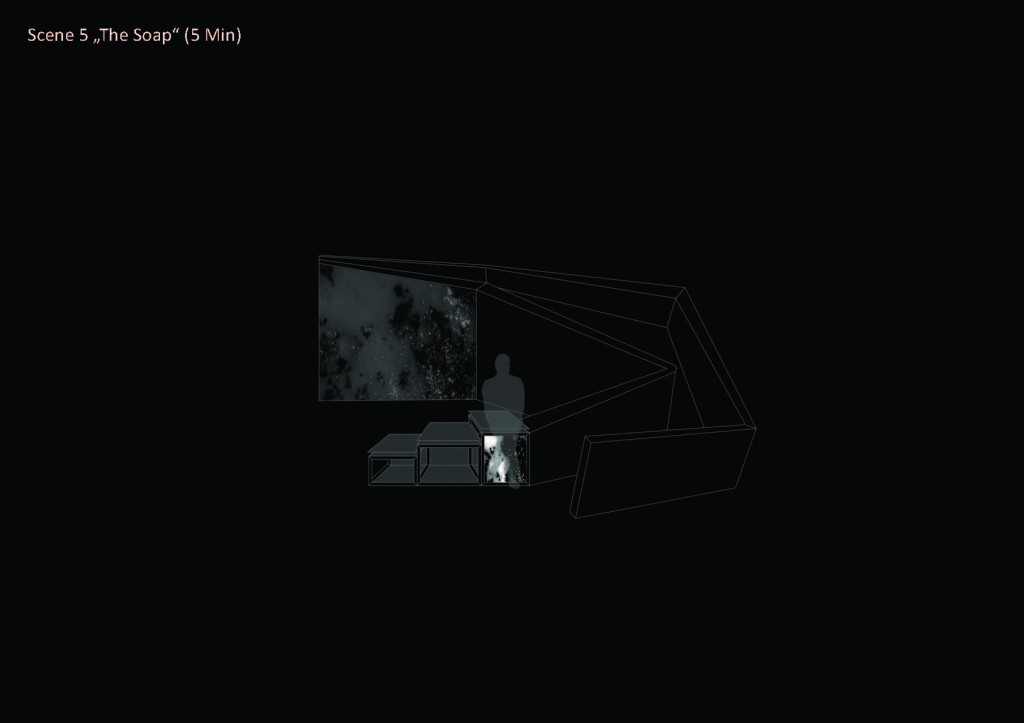

Bench Storyboard

BenchTech!

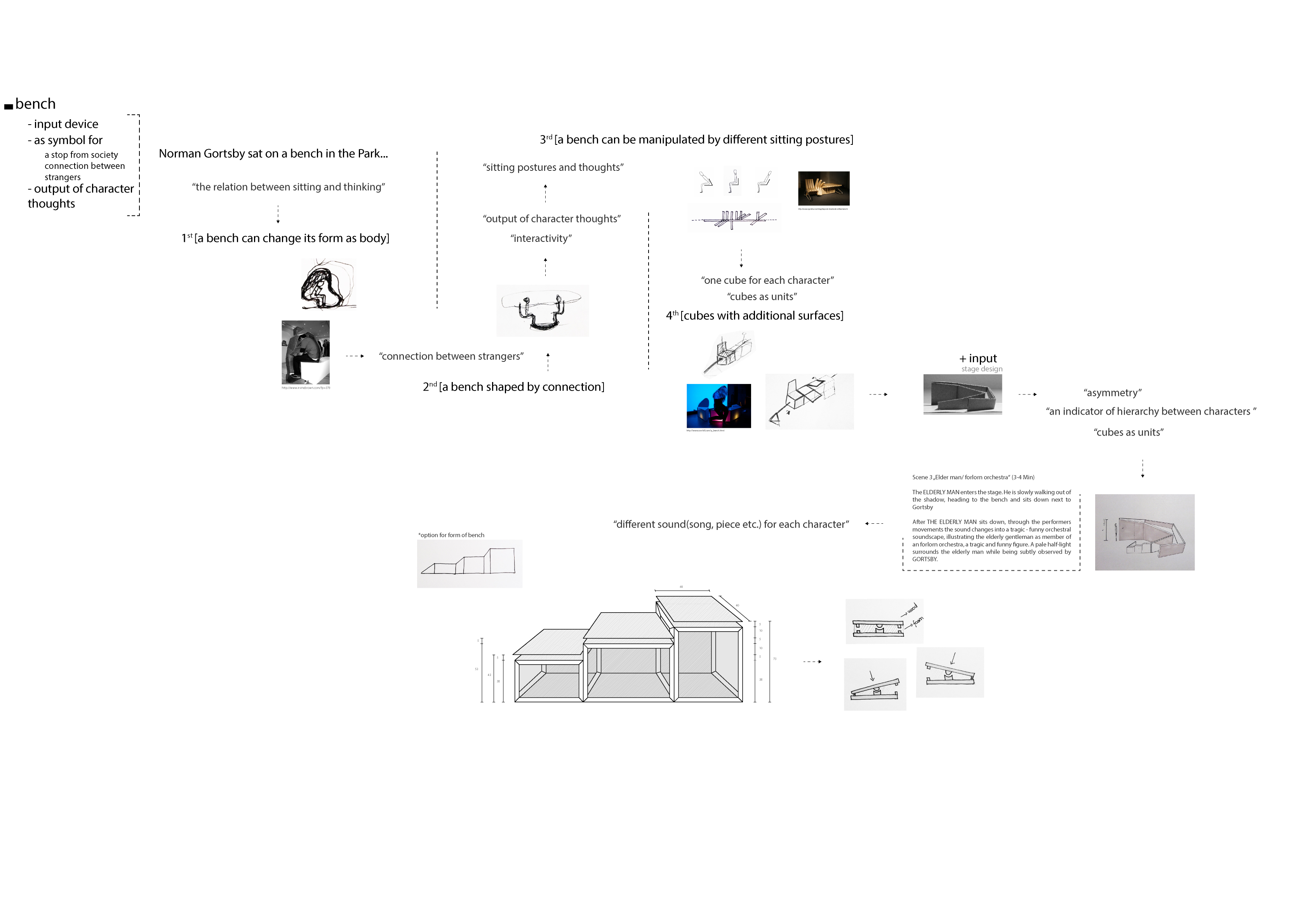

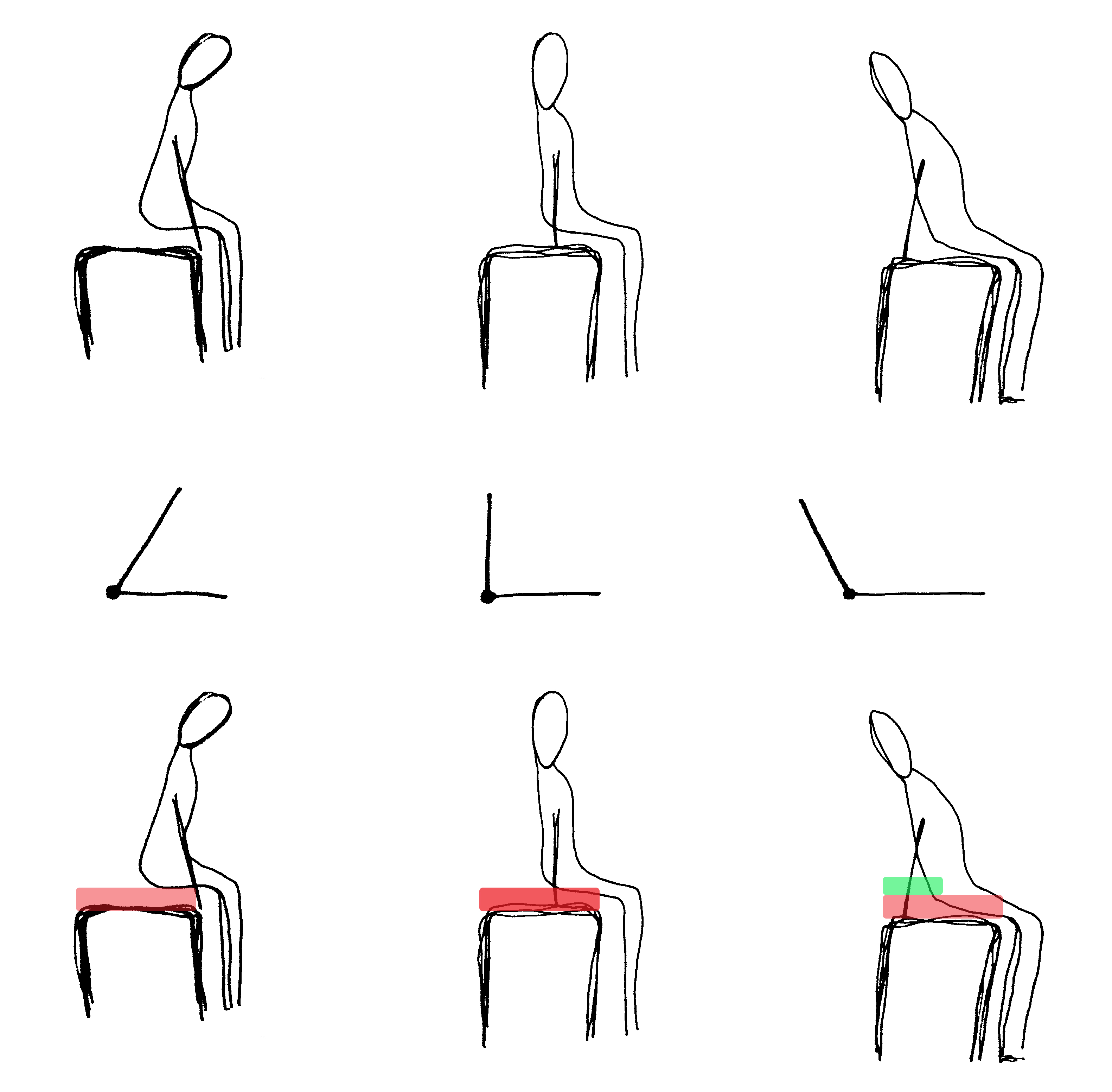

A bench can serve many purposes! Any one can sit on it, can lay down or stand on it, nevertheless, touch it. For an interactive theatre show, it is a very prop which can be interacted in many ways. To get inputs from a bench, we should consider how an actor interact with it according to the script. According to our chosen script, three actors will sit on it followed by different sitting gestures. To get inputs from the bench, we can see that in different sitting gestures the actors produce different pressure on a bench as well as touching the surface. So, from a technical perspective to get inputs from bench, we considered two different method of inputs, one is pressure and second one is touch.

Image 1: Showing different sitting gestures and interaction

Photo Credit: Bahar

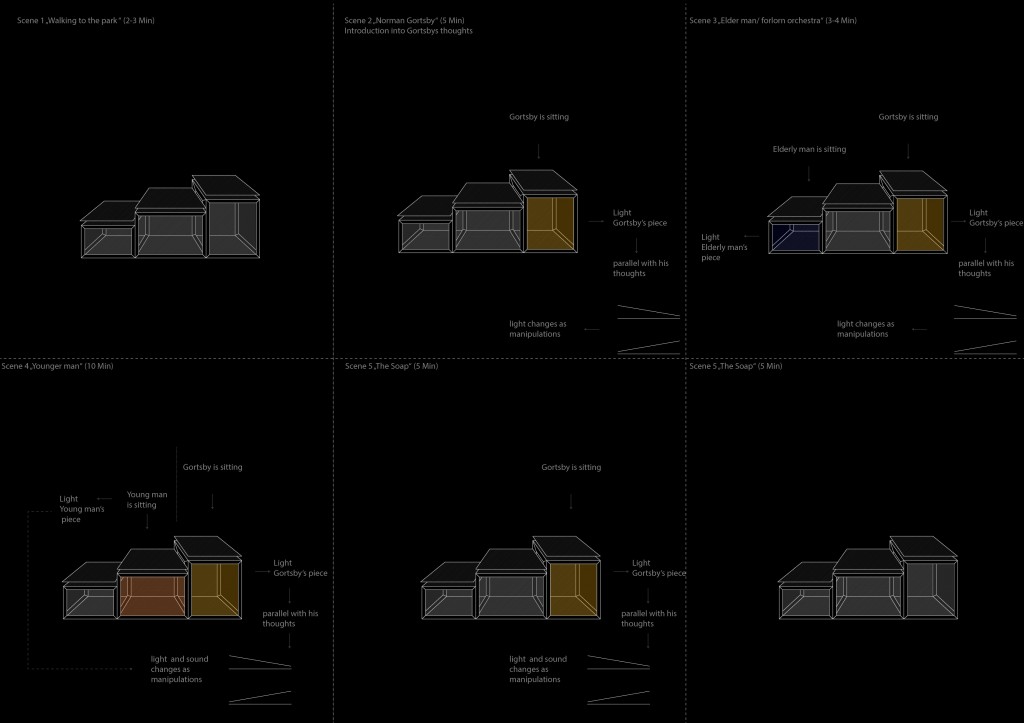

To measure the pressure we put three switches under the sitting surface on the bench. If one switch is connected, we can detect that someone is sitting on it, if more than one switch connects, we can assume that more pressure is applied. Therefore, we put three different switches under the surface to measure three different pressure on the bench. This inputs will produce some outputs that are necessary to make an theatre show interactive. Thus, we have decided to produce two major influencer in a show, lights and sounds. The following diagrams will give a brief idea about the interaction.

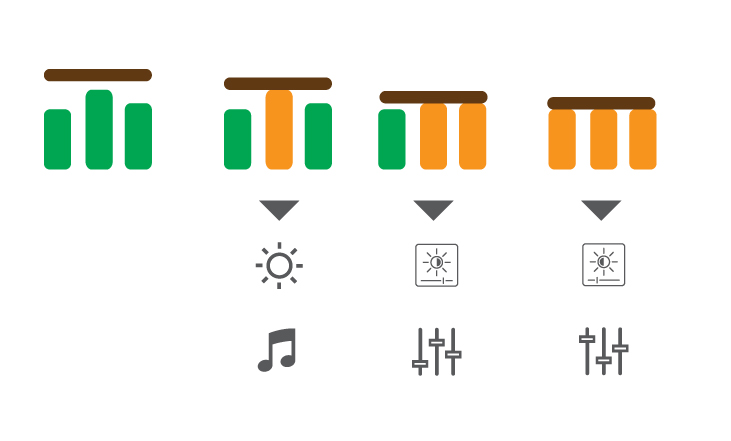

Image 2: Three switches on a single bench to trigger lights and sounds

If a person sits on a bench, it will trigger illumination of the bench, as well as playing a music. Moreover, depending on different sitting gesture (which will increase or reduce pressure), two other switches will be triggered which can be programmed to influence lights and music depending on the storyboard of the play.

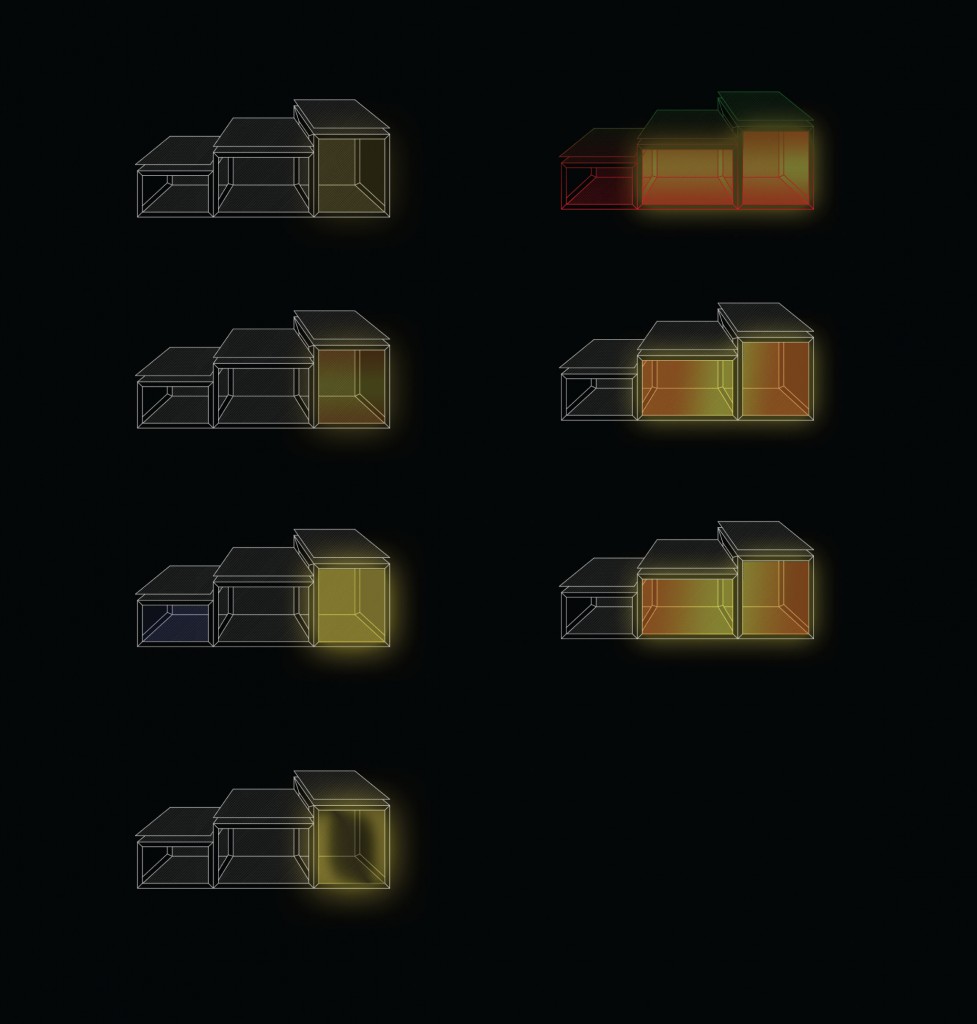

The second input can be achieved from the touch. The following diagram will provide an idea about the interaction.

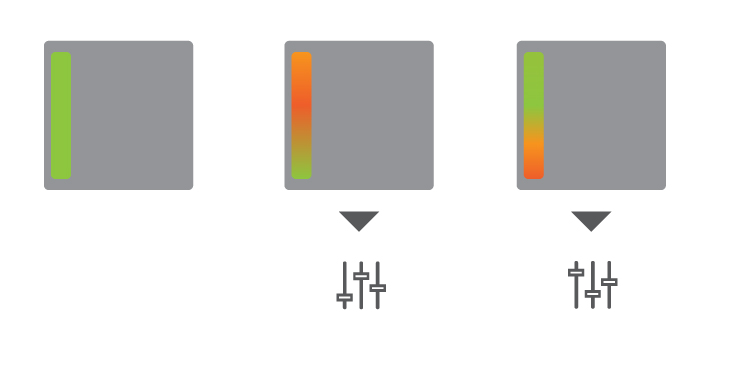

Image 3: Capacitive plate on the bench surface that can influence the music.

A conductive strip on the bench surface can be touched by the actors which can influence the sounds and lights according to the storyboard.

Switch:

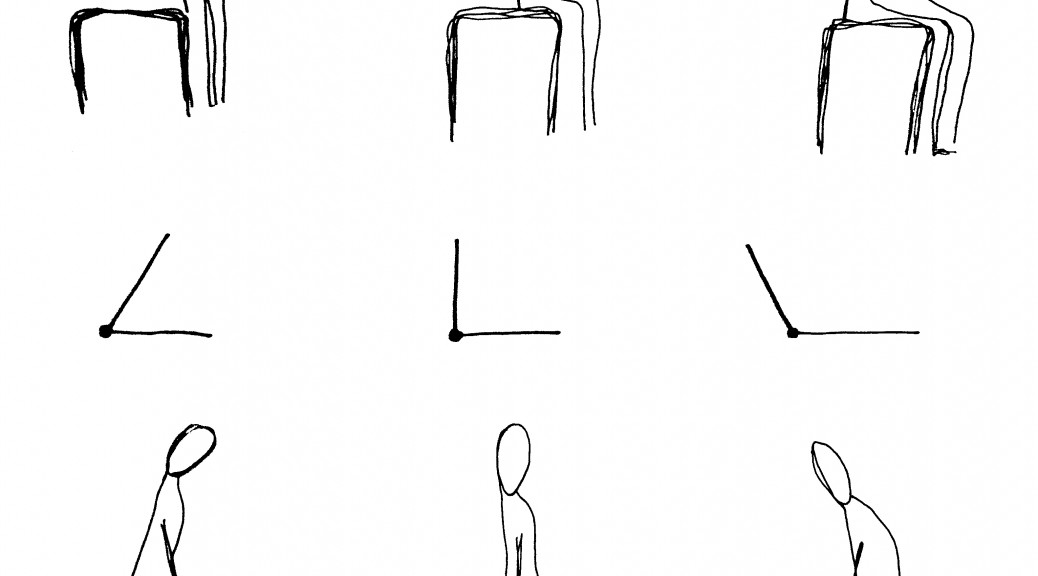

We are going to produce 9 switches ( 3 for each bench) using copper tape which can be attached under the sitting surface of the bench specially designed by the designer team of our project. If two copper tapes are connected, it will send a signal to the microprocessor which will be counted as a input signal followed by an immediate output: turning on the lights attached inside the bench and playing a pre recorder music from the soundbox.

Image 4: Copper tape used as switch

Capacitive Touch Sensor using Graphite:

A simple pencil drawing can be used as a capacitive touch plate which can measure how long it takes for a conductive material to go from a ground state to a high potential state when its been pulled up to that high state through a resistor. It takes longer to reach the higher state if the capacitance of the material is high. We will influence the pre recored music using this easy, simple, cheap technology. The actor can drag their finger along the surface to increase the tempo of the music or reduce it.

Lights:

To lit up the bench we started working with RGB Lights which can produce multiple colors using a single LED.

As we need multiple LEDs, it would be more complex to use single RGB LEDs to produce a LED Matrix to illuminate the benches. Thus, we started working with RGB Led Strips which are designed and produced dedicatedly for the purpose we are working on. It will give a complete control on the lights, can produce any color, high luminance as well as cheap. We considered WS2801 and LPD6803 then we moved on to the more convenient product from adafruit, analog RGB Led strips.

As we need multiple LEDs, it would be more complex to use single RGB LEDs to produce a LED Matrix to illuminate the benches. Thus, we started working with RGB Led Strips which are designed and produced dedicatedly for the purpose we are working on. It will give a complete control on the lights, can produce any color, high luminance as well as cheap. We considered WS2801 and LPD6803 then we moved on to the more convenient product from adafruit, analog RGB Led strips.

Connections:

We are using Arduino based microcontroller using Arduino IDE for the circuit connections and using Processing to play music. Following diagram will show the idea.

Technical Difficulties we need to overcome:

- Proper “Serial handshaking” between arduino and processing

- Working with Analog RGB Led strips

- Working with switch matrix

- Working with capacitive touch sensor.

Kinect Hack Makes Laser Dance Show, Turns Your Living Room Into a Nightclub

Sick of going to the club to party it up and dance the night away? No longer do you have to leave your own home; now you can bring the party to you and pump it up with a Kinect hack laser show and some sweet beats to go with it.

Using the Kinect’s motion tracking and mapping feature to his advantage, Kinect hacker Matt Davis made his body into an audio-visual controller. As Matt explains, by moving his left hand along the z-axis (from the front of his body to the back of his body), he controls where the focal point of the lasers moves (they aim forward or back); using his right hand x-axis (left to right) controls lateral (side-to-side) motion for the lasers; and on the right y-axis (up and down) is the size and so-called “craziness” of the lasers.

Also associated with each of these movements are various audio effects: Moving his left hand on the z-axis adjusts something called the master filter; moving his right hand up or down changes the frequency of the sound, and so on. Make sure to check out the video above for all the details.

The Kinect communicates with OpenNI (an interface that allows communication between devices like the Kinect and middleware), which talks to Max/MSP (avisual programming language used in music and multimedia), which finally talks to Ableton Live (a music sequencer that can utilize digital audio and/or MIDIdata). Matt also explains that the system is also talking to a laser control system by Henry Strange (yes, very strange indeed, but seriously, his music his pretty cool!).

With all of these audio and visual effects joined together, Matt can make some interesting dance music just by moving his body. Since Kinect is capable of simultaneously tracking up to 20 joints on two players, you could take things up a notch by inviting a friend over. Just imagine what the possibilities of this would be if you added in a kaossilator (a wicked awesome synthesizer)!

[Matt Davis on Vimeo via Create Digitial Music and Engadget]

Follow James Mulroy on Twitter and on StumbleUpon to get the latest in microbe, dinosaur , and death ray news.

Original Post:

http://www.techhive.com/article/229402/Kinect_Hack_Makes_Visual_Audio_Laser_Dance_Show.html

The sense of sensors

Tilt Sensor

A tilt sensor can measure the tilting in often two axes of a reference plane in two axes. In contrast, a full motion would use at least three axes and often additional sensors. One way to measure tilt angle with reference to the earths ground plane, is to use an accelerometer. (Source: Wikipedia)

Accelerometer & Gyroscope

An accelerometer is a device that measures proper acceleration (“g-force”). Proper acceleration is not the same as coordinate acceleration (rate of change of velocity). For example, an accelerometer at rest on the surface of the Earth will measure an acceleration g= 9.81 m/s2 straight upwards. (Source: Wikipedia)

A gyroscope (from Greek γῦρος gûros, “circle” and σκοπέω skopéō, “to look”) is a device for measuring or maintaining orientation, based on the principles of angular momentum. Mechanical gyroscopes typically comprise a spinning wheel or disc in which the axle is free to assume any orientation. (Source: Wikipedia)

Photo sensor

A photoelectric sensor, or photo eye, is a device used to detect the distance, absence, or presence of an object by using a light transmitter, often infrared, and a photoelectric receiver. They are used extensively in industrial manufacturing.

Motion sensor

A motion detector is a device that detects moving objects, particularly people. A motion detector is often integrated as a component of a system that automatically performs a task or alerts a user of motion in an area. (Source: Wikipedia)

Pressure sensor

A pressure sensor measures pressure, typically of gases or liquids. Pressure is an expression of the force required to stop a fluid from expanding, and is usually stated in terms of force per unit area. A pressure sensor usually acts as a transducer; it generates a signal as a function of the pressure imposed. For the purposes of this article, such a signal is electrical. (Source: Wikipedia)

Thermal flashlight (temperature sensor + RGB lights)

Mixed Reality Performance and Interaction

Interaction as Performance (Proc. CHI’05, ACM Press 2005)

Steve Benford & Gabriella Giannachi

The goal of this paper is to discuss about the strong relationship between interaction design, theatre and performance to form a conceptual framework as well as a language to express the possible way of computation that can be involved into performative experiences for the practitioners and researchers. Furthermore, the goal is to create a boundary object that might sit at the intersection of HCI and theatre and performance studies. This paper documents the mixed-reality performance, an emerging theatrical genre, which is intended to convey two major ideas: creating experience which will mix rich and complex real and virtual worlds; and combining live performance of participants in interactive digital media.

A series of landmark mixed-reality performances by various artists are discussed and analysed for further discussion.

Desert Rain (1997), based on first Gulf War, provides a great example of mixtures of real and virtual worlds, forms a hybrid theatrical set. It uses series of “rain curtains” (projection screens made of fine water spray through which participants can pass). Ethnographic studies showed how the artists orchestrated the experience to create key climatic moments in the performance.

The following example shows the hybrid city streets model where online players are chased by actors equipped with GPS along with streaming live audio to catch them. The researcher’s study revealed how the experience needed to accommodate so-called seams (in the underlying technical infrastructure.

Similar to previous work, Uncle Roy All Around You (2003), connected an online virtual world to city streets but focusing on how a performance might exploit the ambiguity of relationships between street players, online players and public spectators. The street players were engaged with props, locations and actors in the city. Ethnographic studies expressed the significant challenges of orchestrating a distributed mobile experience on city streets.

To explore the nature of mobile engagement furthermore, researchers worked with another mixed reality performance called “Rider Spoke (2007) where cyclists explored a city at night, recording a series of stories, leaving them at key locations, and then listening to the stories of others.

Rider Spoke (2007)

To explore complex temporal relationships between real and virtual world a text-messaging adventure game for mobile phones called “Day of the figurines (2006) took a different tack. Ethnographic studies showed the challenges of managing highly episodic engagement, especially how participants had to be responsible for interruption and changing pattern of phone use with other people like family, friends and colleagues.

This study focused on the multilayered nature of the instructions which managed to address the four different aspects of location, sequence of actions, public comportment and relationships to content.

The role of HCI researchers is not only to develop interfaces and supporting technologies for the participants but also to create authority and orchestration tools for the artists. Another major role is to study the participants’ experience that will provide “thick description” of experience from participant’s point of view. These studies have informed theoretical work through a series of conceptual frameworks that generalise key aspects of performative interaction for the wider HCI audience. Examples are:

- Potential benefits of ambiguity in interface designing that produce reflection and interpretation

- Seamful design

- Approaches to design the spectator experience

- Moulding discussions of how experiences are framed

First observation of the researchers is that the mixed-reality performances tend to be inherently hybrid in their structure as they are combination multilayered timescales and participants with different roles to integrate a diverse form of interface into a single experience. According to the arguments of the researchers, such task can be described in terms of three fundamental kinds of trajectory:

- Canonical Trajectory: Plan for the experience using different subjective forms such as scripts, set design, media and code designs, stage management instructions. Multiple canonical trajectory may have branching and rejoining structures.

- Participant Trajectory: Actual path that a particular participant makes through a particular instance of the performance. It may diverge from pre-planned canonical trajectories as participant makes individual choices.

- Historic Trajectory: Documentation of the experiences providing the reflections and retelling. Although being a vital role, it has often been neglected in the mixed-reality performance scenario.

Introducing trajectory forwards us to some controversial questions. Does the notation of trajectories have wider relevance to other domains of HCI? Studying and analysing different cultural experiences such as visits to museum, galleries and theme parks or playing pervasive games which share many of the characteristics of mixed-reality performance, researchers of this paper argues that it has relevance. They suggested that HCI needs to consider how a user experience expands across many Interface spaces, timescales and roles. Mixed-reality performances provides a concrete projection of trajectories which is considered to be the glimpse of future user experience.