process: other peoples work to investigate

project: theremin

Assignment: Please do your own first patch and upload onto the wiki under your name

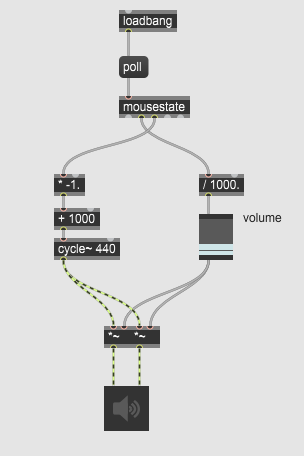

I did build a simple Theremin to get to know the workflow with Max. Move your mouse cursor around to control the pitch and volume.

File:simple_mouse_theremin.maxpat

Objects used:

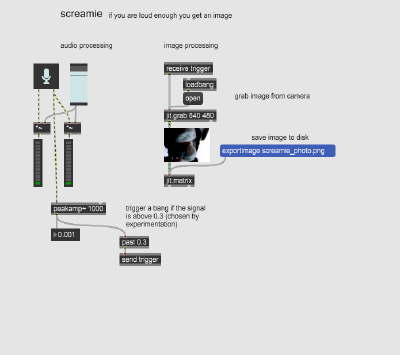

project: screamie

If you scream loud enough ... you will get a selfie.

This little art is a first shot. Also: snarky comment on social media and selfie-culture.

File:2021-04-25_screamie_JS.maxpat

Objects used:

- levelmeter~ - to display the audio signal level

- peakamp~ - find peak in signal amplitude (of the last second)

- past - send a bang when a number is larger then a given value

- jit.grab - to get image from camera

- jit.matrix - to buffer the image and write to disk

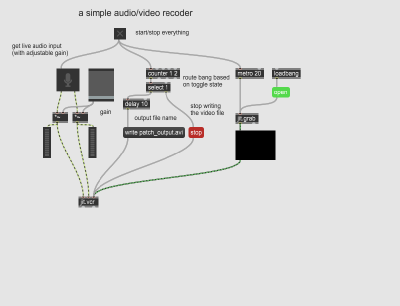

process: record and save audio and video data

This patch demonstrates how to get audio/video data and how to write it to disk.

File:2021-04-23_audio_video_recorder_JS.maxpat

Objects used:

- ezadc~ - to get audio input

- meter~ - to display (audio) signal levels

- metro - to get a steady pulse of bangs

- counter - to count bangs/toggles

- select - to detect certain values in number stream

- delay - to delay a bang (to get DSP processing a head start)

- jit.grab - to get images from camera

- jit.vcr - to write audio and video data to disk

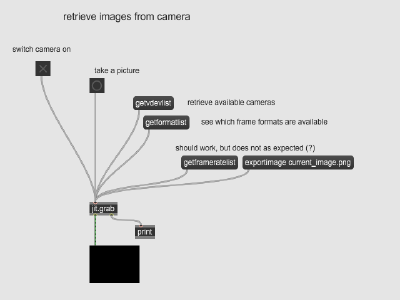

process: retrieve images from a camera

This simple patch allows you to grab (still) images from the camera.

File:2021-04-23_retrieve_images_from_camera_JS.maxpat

File:2021-04-23_retrieve_images_from_camera_JS.maxpat

Objects used:

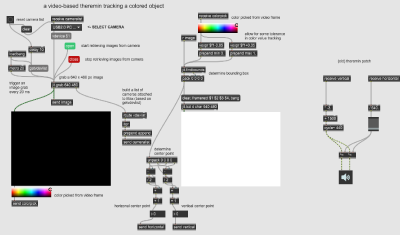

project: color based movement tracker

This movement tracker uses color as a marker for an object to follow. Hold the object in front of the camera an click on it to select an RGB value to track. The object color needs to be distinctly different from the background in order for this simple approach to work. This patch yields both a bounding box and its center point to process further.

The main trick is to overlay the video display area (jit.pwindow) with a color picker (suckah) to get the color. A drop-down menu is added as a convenience function to select the input camera (e.g. an external one vs. the build-in device). The rest is math.

File:2021-04-23_color_based_movement_tracker_JS.maxpat

The following objects are used

- metro - steady pulse to grab images from camera

- jit.grab - actually grab an image from the camera upon a bang

- prepend - put a stored message in front of any input message

- umenu - a drop-down menu with selection

- jit.pwindow - a canvas to display the image from the camera

- suckah - pick a colour from the underlying part of the screen (yields RGBA as 4-tuple of floats (0.0 .. 1.0))

- swatch - display a colour (picked)

- vexpr - for (C-style) math operations with elements in lists

- findbounds - search values in a given range in the video matrix

- jit.lcd - a canvas to draw on

- send & receive - avoid cable mess

project: video theremin

Assignment: Combine your first patch with sound/video conversion/analysis tools

This patch combines the previously developed color-based movement tracker with the first theremin.

project: i see you and you see me

The project did not grew legs upon more detailed examination. It therefore was not persued further.

- in collaboration with Luise Krumbein

Video conference calls replaced in-person meetings on a large scale in a short amount of time. Most software tries to mimic a conversation bus actually either forces people into the spotlight and into monologues or off to the side into silence. One to one communication allows a user to concentrate on the (one) other, but group meetings blur the personalities and pigeonhole expression into a cookie-cutter shape of what is technically efficient and socially barely tolerable. The final project in this course seeks to explore different representations in synchronous online group communication.

To shift the perception data will be gathered from multiple (ambient) sources. This means tapping into public data sets for broad context. It also means gathering hyper-local/personal data via an arduino or similar microcontroller (probably ESP8266) for sensing the individual. These data streams will be combined to produce a visual output using jitter. This result aims to represent the other humans in a manner to emphasize personality and not the emulation of the technicality in face to face exchanges.

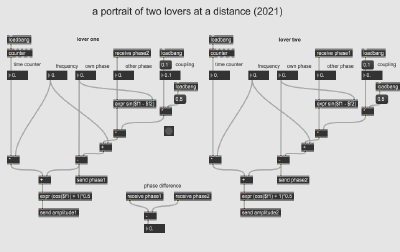

project: distance communication

Different implementations of the algorithm were tried in Max. None yielded a convergence of the phases of the oscillators. Therefore the project was cancelled.

The installation "distance communication" explores and reflects on (romantic) relationships at a time where partners are apart. Their lives don't take place in the same environment anymore and are open to different influences. It takes effort to stay in sync even though a distance may be bridged by modern communication means. Two blinking LEDs represent the partners, the blinking rhythm their respective lives.

The technical setup consists of two LEDs driven by a microcontroller and sensed via a light-dependent resistor. A small computer (laptop or small form factor PC) runs both Max and a video conference software to display "the other". The Max patch and the "other" are displayed on a screen or video projector. The Max patch tries to synchronize the respective blinking pattern by leveraging the well studied behaviour of fireflies. A microphone on the floor picks up ambient sound. If this audio level exceeds a given a threshold the blinking of one LEDs is triggers / reset to introduce disturbance in the process. The installation may be presented with both parts in the same room or one part off-site somewhere else.

implementation notes

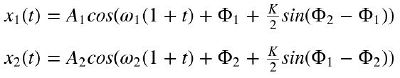

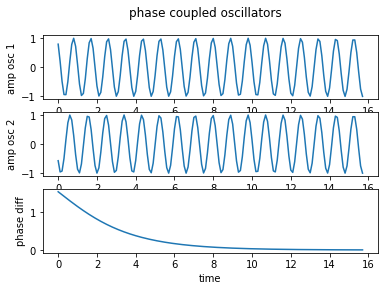

The firefly model turned out to be insufficient for the current setup due to the small number of agents. The interaction has been remodeled from first principles based on two harmonic oscillators and the Kuramoto–Daido model for phase locking.

Observing the equation system over time shows the expected behavior (when implemented in Python).

Max implementation of the Kuramoto-Daido model

references

- Kuramoto, Yoshiki (1975). H. Araki (ed.). Lecture Notes in Physics, International Symposium on Mathematical Problems in Theoretical Physics. 39. Springer-Verlag, New York. p. 420.)

Buck, John. (1988). Synchronous Rhythmic Flashing of Fireflies. The Quarterly Review of Biology, September 1988, 265 - 286.Carlson, A.D. & Copeland, J. (1985). Flash Communication in Fireflies. The Quarterly Review of Biology, December 1985, 415 - 433.Lloyd, J. E. Studies on the flash communication system in Photinus fireflies 1966Nobuyoshi Ohba: Flash Communication Systems of Japanese Fireflies