My project this semester would be writing a Max Patch for an interactive sound installation prototype I want to finish the next weeks (so time is short).

The main idea of the installation is, that the visitor doesn't need to know of the installation when entering, but should realise she or he can interact with the sound (and later maybe even lights and visual content) in the installation space.

This means I need a controlling device that doesn't require the visitor to touch or carry anything like for example a game controller or a head tracking device, the installation should be "playable" hands free.

A logical but kind of complicated idea would be to use an infrarot camera that can track the movement of the visitor in the space. I'd like to use an infrarot camera instead of a normal one, to be able to track even in total darkness, because I plan to later add interactive visual content to the installation (maybe next semester). Simply said, if the lights off, a normal camera would be blind... A nice solution (as suggested by Leon) would be a XBOX 360 Kinect Sensor, it can do just what I need it to.

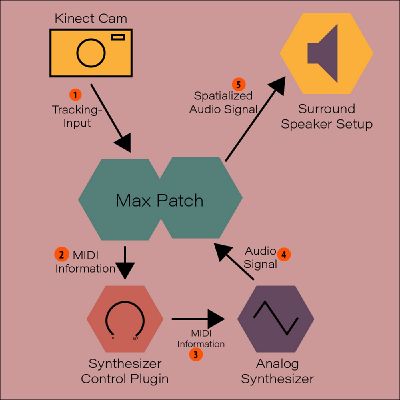

The tracking data would be inputted in Max and converted (scaled) to MIDI information. I found a vst plugin that enables me to send midi information to my analog synthesizer (Korg Minilogue), so the Max patch would send the MIDI data through the vst plugin to my synthsizer.

The then generated audio signal would be send back to Max to be spatialised and then outputted to the surround speaker setup.

To make this process a bit easier to follow, I've made this little sketch to show what happens first and what process is meant to happen

I plan to compose the music on the synth in the next weeks so I can set up the prototype in my room by the end of the semester and document it by filming the process.