(→Motion) |

|||

| (96 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

== | == Motion == | ||

[[File:Keep Moving.jpg|800px]] | |||

File: | |||

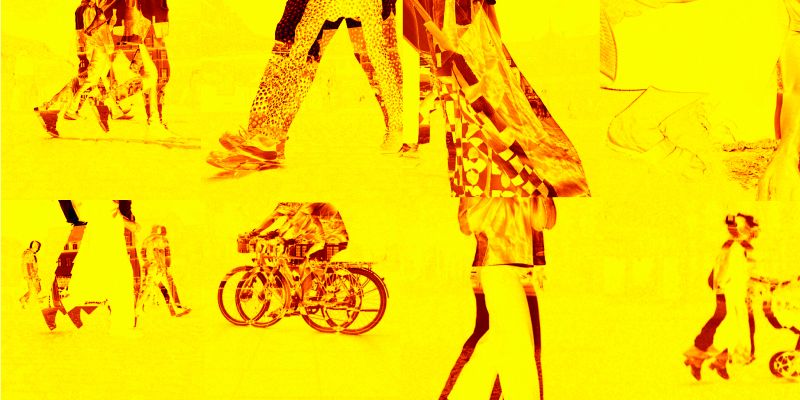

Video manipulating in real-time, 3min | |||

Things that move trigger our attention and curiosity. We immediately perceive them as living matter. Something that does not move from time to time is quickly perceived as dead. How is our environment shaped? Is it alive, livable, boring? Are cars living organisms? | |||

Video recordings of public places are made at various locations in Weimar using a laptop and its webcam. The video is embedded in Max/MSP, which manipulated the recordings in real-time. The setup filters out everything that doesn't move. Things that do move become visible. The top right calculates the difference between the frames of the video. Therefore it filters the non-moving objects and highlights changes in the video feed. The bottom left takes snapshots, triggered by loud noises. A resulting video is displayed at the bottom right, a sequence of images created from the individual snapshots captured by sound. | |||

{{#ev:vimeo|572240468}} | |||

<gallery> | <gallery> | ||

File: | File:Theater.png | ||

File: | File:Ilmpark.png | ||

File: | File:Koriat.png | ||

File: | File:Wieland.png | ||

</gallery> | </gallery> | ||

---- | ---- | ||

''' | '''Technical Solution''' | ||

Please find here the patch [[:File:210616_Keep Moving.maxpat]] | |||

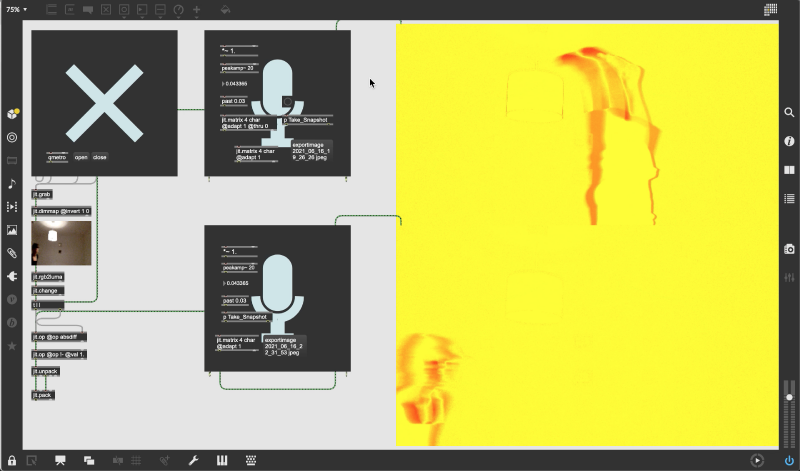

[[File: | [[File:Screenshot 2021-06-17 at 00.05.59.png|800px]] | ||

The lower display window shows the entire webcam video. The upper window shows the current snapshot until the next one is taken. For motion tracking, ''jit.rgb2luma'' is often used to identify an moving object. This command caught my attention. By ''jit.unpack'' and ''jit.pack'' the color get changed in and bright yellow for the background and bright red for the moving object. The trigger of the microphone is set very low to take a snapshot. Even a big bite into an apple can set it off. | |||

---- | ---- | ||

''' | '''Documentation''' | ||

[[/Process/]] | |||

[[/ | |||

[[/ | [[/References/]] | ||

[[/ | [[/Tutorials/]] | ||

Latest revision as of 20:24, 13 July 2021

Motion

Video manipulating in real-time, 3min

Things that move trigger our attention and curiosity. We immediately perceive them as living matter. Something that does not move from time to time is quickly perceived as dead. How is our environment shaped? Is it alive, livable, boring? Are cars living organisms?

Video recordings of public places are made at various locations in Weimar using a laptop and its webcam. The video is embedded in Max/MSP, which manipulated the recordings in real-time. The setup filters out everything that doesn't move. Things that do move become visible. The top right calculates the difference between the frames of the video. Therefore it filters the non-moving objects and highlights changes in the video feed. The bottom left takes snapshots, triggered by loud noises. A resulting video is displayed at the bottom right, a sequence of images created from the individual snapshots captured by sound.

Technical Solution

Please find here the patch File:210616_Keep Moving.maxpat

The lower display window shows the entire webcam video. The upper window shows the current snapshot until the next one is taken. For motion tracking, jit.rgb2luma is often used to identify an moving object. This command caught my attention. By jit.unpack and jit.pack the color get changed in and bright yellow for the background and bright red for the moving object. The trigger of the microphone is set very low to take a snapshot. Even a big bite into an apple can set it off.

Documentation