No edit summary |

No edit summary |

||

| Line 10: | Line 10: | ||

For this project I want to visualize some of that “hidden data”, creating a generative image in real time using today’s web technologies. This so generated image should simulate the phones camera view in a lo fi way. | For this project I want to visualize some of that “hidden data”, creating a generative image in real time using today’s web technologies. This so generated image should simulate the phones camera view in a lo fi way. | ||

[[File:awaresense_concept.jpg]] | |||

=input= | =input= | ||

| Line 18: | Line 20: | ||

* weather data | * weather data | ||

[[File:awaresense_input.jpg | [[File:awaresense_input.jpg] | ||

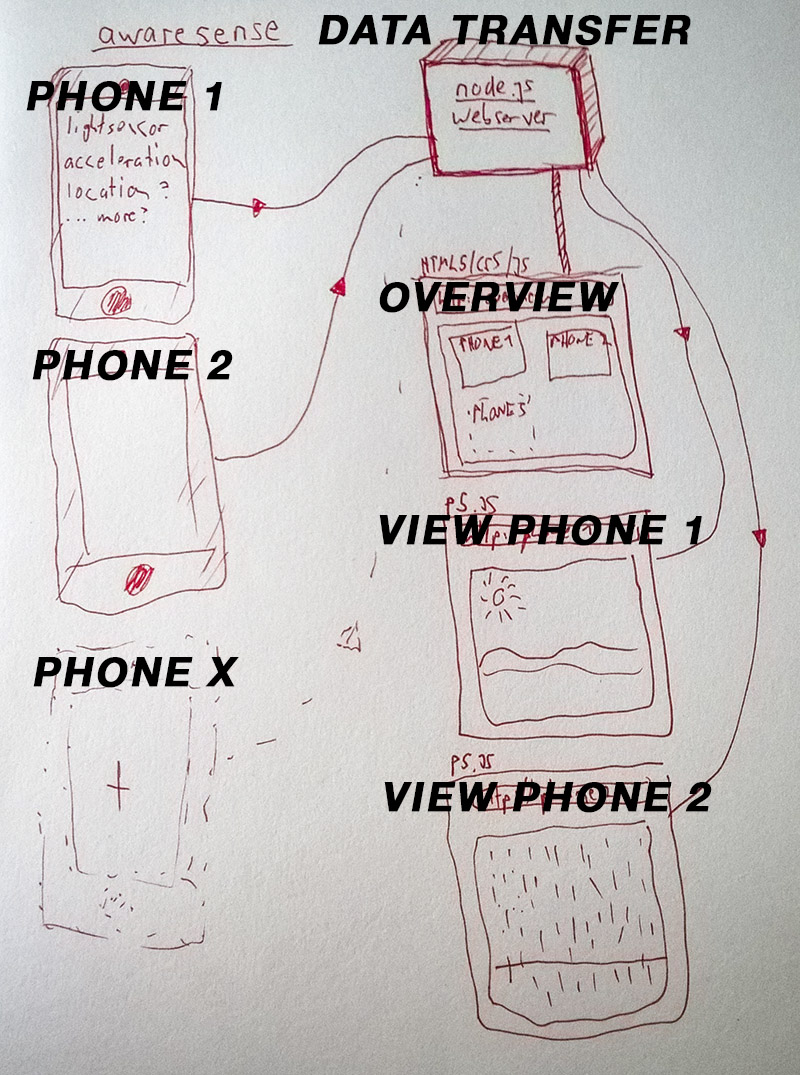

=data transfer= | =data transfer= | ||

Latest revision as of 09:32, 12 February 2018

awaresense

Jonas Obertüfer

Space is the Place: From Simulation to Hyperreality

Networked Interaction of Things

about

Our phones collect information from us and our surroundings: all the time, sometimes or on request. In order to get access to some of that data we have to give explicit permission (e.g. GPS, microphone, camera) but others can be fetched by the browser or apps without noticing.

For this project I want to visualize some of that “hidden data”, creating a generative image in real time using today’s web technologies. This so generated image should simulate the phones camera view in a lo fi way.

input

as input serves one or multiple mobile devices. on a simple one page they see what data they send. the following data is grabbed:

- acceleration

- light

- weather data

[[File:awaresense_input.jpg]

data transfer

Through the websockets protocol, the data from the mobile devices is transfered in nearly realtime to the node.js server

output

the data from the input sources is send to a server and gets visualised in two ways.