GMU:Tutorials/Performance Platform/Custom People Tracking: Difference between revisions

No edit summary |

No edit summary |

||

| (28 intermediate revisions by 2 users not shown) | |||

| Line 25: | Line 25: | ||

[[File:shuyan setting-1.jpg]] | [[File:shuyan setting-1.jpg]] | ||

1.Set up the camera on the ceiling. In this tutorial, the camera is not a full view camera and the height of space is limited, so the view seems too narrow. This tutorial shows how to get the x, y coordinates of one person on the stage as an example. | 1.Set up the camera on the ceiling. You probably need an extension cable to connect the camera with you laptop. In this tutorial, the camera is not a full view camera and the height of space is limited, so the view seems too narrow. This tutorial shows how to get the x, y coordinates of one person on the stage as an example. | ||

2. The reason why the camera is installed on the top is that the color of platform is white and pure, which can be a perfect background serve for the pixel difference capture. Therefore, any different pixels entering in the background consider to be detected. And more importantly, comparing to get the view from front or back side, get the view from top will make sure the visitors in the platform will have least interference between each other. | 2. The reason why the camera is installed on the top is that the color of platform is white and pure, which can be a perfect background serve for the pixel difference capture. Therefore, any different pixels entering in the background consider to be detected. And more importantly, comparing to get the view from front or back side, get the view from top will make sure the visitors in the platform will have least interference between each other. | ||

| Line 39: | Line 39: | ||

<br style="clear:both"> | <br style="clear:both"> | ||

'''Step 1''' | |||

[[Image:grablibrary2.png|thumb|left|353px]] [[Image: | Double click the "p vdev/input" to make a selection of webcam list, and get the external webcam in " USB2.0 PC CAMERA"as the webcam input. The number after "input" in the right side represents which webcam you are choosing. 0 means the default webcam in your laptop, 1, 2, 3...or n means which external webcam you are going to use. | ||

'''Step 2''' | |||

Then click the "open" button to open the red trigger button, which starts to activate the webcam you are using. | |||

[[Image:grablibrary2.png|thumb|left|353px]] [[Image:grablibrary03.png|thumb|left|270px]] | |||

<br style="clear:both"> | <br style="clear:both"> | ||

=='''Part 4 Detection'''== | |||

< | |||

'''Capture: jit.rgb2luma''' | |||

In the capture part, "jit.rgb2luma" is responsible for the pixel capture to read the pixel input. And this object is transforming the detected colorful image into rgb value in alpha mode which changes from 0 to 255. | |||

'''Image adjustment: jit.op @op < @val 172''' | |||

You can use "jit.op @op < @val 172" to adjust the brightness of the detected image in order to have more accurate position of blobs. In this object, "value 172" is a set you can change due to your own light environment, and it is actually a threshold to read all the pixels which are under 172 in brightness. The "integer input" in the right side which is "7" in the picture is as a input of the value you can change by yourself. | |||

'''Read images into pixels data''' | |||

"cv.jit.label", "cv.jit.blobs.bound", "cv.jit.blobs.bound.draw" can be regarded as combo objects, these three objects aim to detail the pixel matrix on the image filter by "jit.op @op < @val 172" in the last step. To read the pixels with similar brightness as pixel blobs, to classify them as a group, and finally use mark this group in rectangle. And in this case, the blobs represent the head of people in the top view. | |||

'''Read the position of blobs''' | |||

The code part in the left-bottom side output the data of blobs into position value in x and y axis. There are five group of data, from the first to the fifth respectively represents: 1. the order of the blobs that are captured, which is "0" in my case. 2. the x0 value of the blobs. 3. the y0 value of the blobs. 4. the x1 value of the blobs. 5. the y1 value of the blobs. | |||

(x0, y0) and (x1, y1) mean the position of left-top point and right-bottom point of the blob. Hence, the center point of the blob is (x1-x0)/2, (y1-y0)/2. And it is (205-131)/2, (151-79)/2= (37,36) in my case. | |||

Above explains how to get more accurate value of blob's position under the adjustment in reading images. | |||

'''You can download the complete code of MaxMsp here:''' <https://github.com/ConnorFk/MaxMspDBLPosition> | |||

[[Image:testing result position result.png|thumb|left|653px]] | [[Image:testing result position result.png|thumb|left|653px]] | ||

[[File:stesting process.jpg]] | |||

<br style="clear:both"> | <br style="clear:both"> | ||

'''Now, you can get the value of people's position!''' | |||

Thank you. | |||

Latest revision as of 19:04, 31 July 2016

Introduction

It’s a tutorial about using external webcam to get the data of people’s x and y position in DBL platform. I am using MaxMsp and external library cv.jit to grab the blobs due to the pixel differences captured by webcam, and to get the x, y value of the blobs which is considered to be the position data of people.

Part 1 Install cv.jit library

cv.jit is a collection of max/msp/jitter tools for computer vision applications. The goals of this project are to provide externals and abstractions to assist users in tasks such as image segmentation, shape and gesture recognition, motion tracking, etc. as well as to provide educational tools that outline the basics of computer vision techniques.

Firstly, you need to download the cv.jit. The latest version of cv.jit is 1.8 and can be used with 64-bit versions of Max.

Here is the link: http://jmpelletier.com/cv-jit-is-now-compatible-with-64-bit-max/

Find instructions to check it here: http://jmpelletier.com/cvjit/

After you downloading and unpacking the zip file, you should copy all the files in extras folder and help folder to the corresponding files in C74 folder. Tips: How to find C74 folder For example, in Mac, you go to Applications-Max, right click it and click Show Package Contents, and then open Resources folder, you will find C74 folder. Finally, restart your Max.

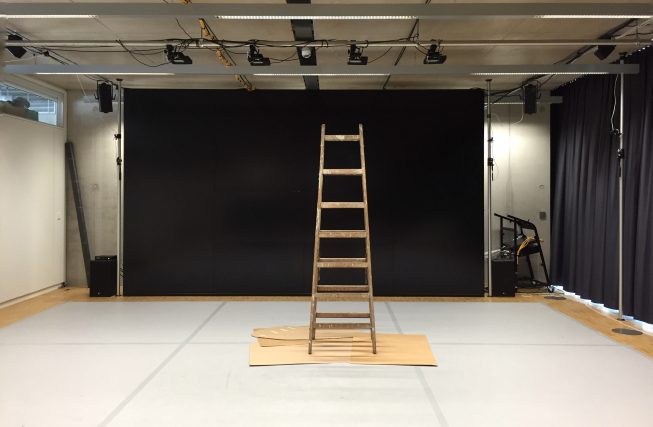

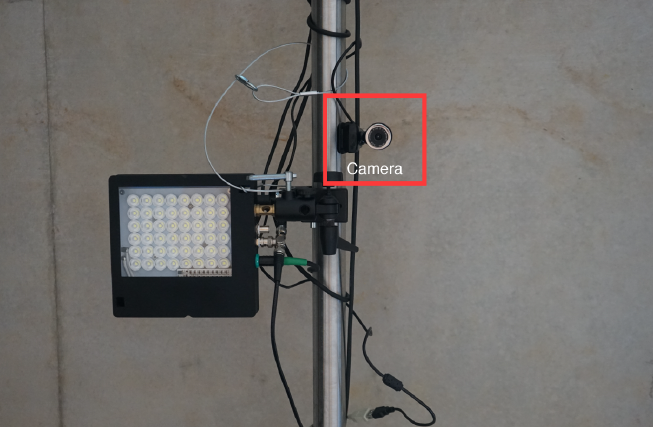

Part 2 Set up

Notice: If you use the ladder, please make sure to put several layers of cardboard below it, to prevent damage to the dance carpet. Please make sure that everything is securely fixed.

1.Set up the camera on the ceiling. You probably need an extension cable to connect the camera with you laptop. In this tutorial, the camera is not a full view camera and the height of space is limited, so the view seems too narrow. This tutorial shows how to get the x, y coordinates of one person on the stage as an example.

2. The reason why the camera is installed on the top is that the color of platform is white and pure, which can be a perfect background serve for the pixel difference capture. Therefore, any different pixels entering in the background consider to be detected. And more importantly, comparing to get the view from front or back side, get the view from top will make sure the visitors in the platform will have least interference between each other.

3. Make sure to close the curtains (and windows) in the DBL because the stable light environment is important for tracking.

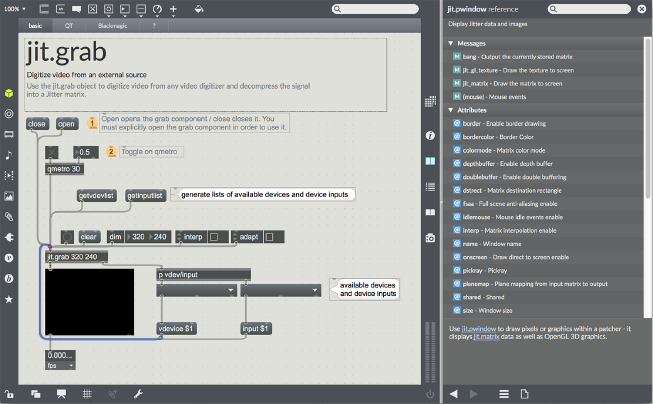

Part 3 Code testing

Use the jit.grab object to digitize video from any video digitizer and decompress the signal into a Jitter matrix. On OSX, QuickTime is used; on Windows, DirectX is used.

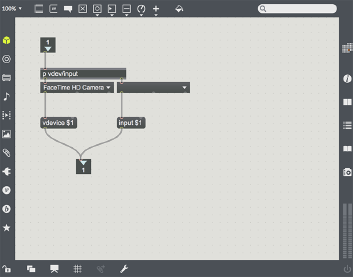

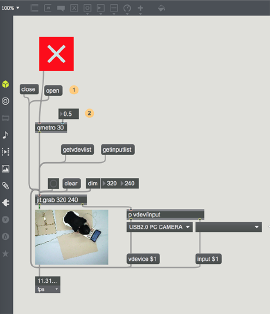

Step 1

Double click the "p vdev/input" to make a selection of webcam list, and get the external webcam in " USB2.0 PC CAMERA"as the webcam input. The number after "input" in the right side represents which webcam you are choosing. 0 means the default webcam in your laptop, 1, 2, 3...or n means which external webcam you are going to use.

Step 2

Then click the "open" button to open the red trigger button, which starts to activate the webcam you are using.

Part 4 Detection

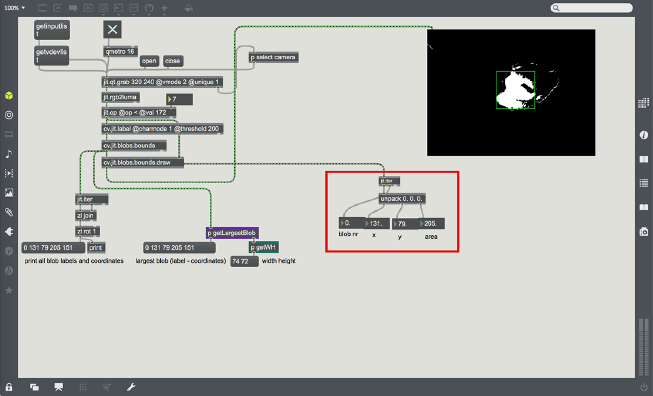

Capture: jit.rgb2luma

In the capture part, "jit.rgb2luma" is responsible for the pixel capture to read the pixel input. And this object is transforming the detected colorful image into rgb value in alpha mode which changes from 0 to 255.

Image adjustment: jit.op @op < @val 172

You can use "jit.op @op < @val 172" to adjust the brightness of the detected image in order to have more accurate position of blobs. In this object, "value 172" is a set you can change due to your own light environment, and it is actually a threshold to read all the pixels which are under 172 in brightness. The "integer input" in the right side which is "7" in the picture is as a input of the value you can change by yourself.

Read images into pixels data

"cv.jit.label", "cv.jit.blobs.bound", "cv.jit.blobs.bound.draw" can be regarded as combo objects, these three objects aim to detail the pixel matrix on the image filter by "jit.op @op < @val 172" in the last step. To read the pixels with similar brightness as pixel blobs, to classify them as a group, and finally use mark this group in rectangle. And in this case, the blobs represent the head of people in the top view.

Read the position of blobs

The code part in the left-bottom side output the data of blobs into position value in x and y axis. There are five group of data, from the first to the fifth respectively represents: 1. the order of the blobs that are captured, which is "0" in my case. 2. the x0 value of the blobs. 3. the y0 value of the blobs. 4. the x1 value of the blobs. 5. the y1 value of the blobs.

(x0, y0) and (x1, y1) mean the position of left-top point and right-bottom point of the blob. Hence, the center point of the blob is (x1-x0)/2, (y1-y0)/2. And it is (205-131)/2, (151-79)/2= (37,36) in my case.

Above explains how to get more accurate value of blob's position under the adjustment in reading images.

You can download the complete code of MaxMsp here: <https://github.com/ConnorFk/MaxMspDBLPosition>

Now, you can get the value of people's position!

Thank you.