|

|

| (15 intermediate revisions by the same user not shown) |

| Line 1: |

Line 1: |

| This page documents my work and experiments done during the "Max and I, Max and Me" course. Feel free to copy, but give attribution where appropriate. | | This page documents my work and experiments done during the "Max and I, Max and Me" course. Feel free to copy, but give attribution where appropriate. |

|

| |

|

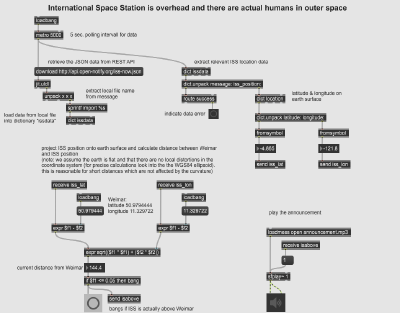

| = final project: ISS overhead = | | = ISS overhead = |

|

| |

|

| [[File:ISS_overhead.png|400px]] | | [[File:ISS_overhead.png|400px]] |

| Line 11: |

Line 11: |

| The technical setup consists of a computer running Max and some means of notification like a bell or a speaker. The current position of the International Space Station is calculated from its orbital data and set into relation to the place of exhibition (e.g. Weimar). If the ISS is at a place which can be considered overhead the notification is triggered. | | The technical setup consists of a computer running Max and some means of notification like a bell or a speaker. The current position of the International Space Station is calculated from its orbital data and set into relation to the place of exhibition (e.g. Weimar). If the ISS is at a place which can be considered overhead the notification is triggered. |

|

| |

|

| == implementation notes ==

| | = implementation notes = |

| The basic Max patch for tracking the ISS and sending a bang is done. | | The underlying Max patch for tracking the ISS and playing audio looks like this: |

| [[File:ISS_notifier_2021-05-19.png|400px]] | | [[File:ISS_overhead_patch.png|400px]] |

| | |

| | [[File:ISS_overhead_2021-06-14.maxpat|Max patch]] |

| | |

|

| |

|

| Processing TLE files and orbital data was sidestepped by relying on [http://open-notify.org/Open-Notify-API/ISS-Location-Now/ open-notify.org] and processing [https://json.org/ JSON] data. The distance (on earth) to the city center of Weimar (at 50.979444, 11.329722) calculated using the euclidian distance as a metric. To simplify the math it is assumed that the world is flat and the [https://en.wikipedia.org/wiki/World_Geodetic_System#WGS84 WGS 84] reference system is ignored. This is ok for small distances where the curvature of the earth does not result in too much of an error. | | Processing TLE files and orbital data was sidestepped by relying on [http://open-notify.org/Open-Notify-API/ISS-Location-Now/ open-notify.org] and processing [https://json.org/ JSON] data. The distance (on earth) to the city center of Weimar (at 50.979444, 11.329722) calculated using the euclidian distance as a metric. To simplify the math it is assumed that the world is flat and the [https://en.wikipedia.org/wiki/World_Geodetic_System#WGS84 WGS 84] reference system is ignored. This is ok for small distances where the curvature of the earth does not result in too much of an error. |

|

| |

|

| The Max patch was [https://docs.cycling74.com/max8/vignettes/standalone_building exported to a standalone application]. It is now run on a small computer board to reduce the complexity of the setup. | | The Max patch was [https://docs.cycling74.com/max8/vignettes/standalone_building exported to a standalone application]. It is now run on a small computer board to reduce the complexity of the setup. The data about the ISS crew is scraped from the internet using custom written software. |

|

| |

|

| Lessons learned: | | Lessons learned: |

| Line 24: |

Line 27: |

| * school math turned out to be useful | | * school math turned out to be useful |

|

| |

|

| == references ==

| | = references = |

| * [https://en.wikipedia.org/wiki/International_Space_Station wikipedia: International Space Station] | | * [https://en.wikipedia.org/wiki/International_Space_Station wikipedia: International Space Station] |

| * [https://en.wikipedia.org/wiki/Two-line_element_set wikipedia: two-line element set (TLE)] | | * [https://en.wikipedia.org/wiki/Two-line_element_set wikipedia: two-line element set (TLE)] |

| * [https://nssdc.gsfc.nasa.gov/nmc/SpacecraftQuery.jsp NASA Space Science Data Coordinated Archive Master Catalog Search] | | * [https://nssdc.gsfc.nasa.gov/nmc/SpacecraftQuery.jsp NASA Space Science Data Coordinated Archive Master Catalog Search] |

| * [https://www.howmanypeopleareinspacerightnow.com/ how many people are in space right now?] | | * [https://www.howmanypeopleareinspacerightnow.com/ how many people are in space right now?] |

| | | * [[Max and I, Max and Me class notebook]] |

| = process: other peoples work to investigate =

| |

| * [https://www.uni-weimar.de/kunst-und-gestaltung/wiki/GMU:Max_and_the_World/F.Z._Ayg%C3%BCler F.Z._Aygüler AN EYE TRACKING EXPERIMENT] | |

| * [https://www.uni-weimar.de/kunst-und-gestaltung/wiki/GMU:Max_and_the_World/WeiRu_Tai Does social distancing change the image of connections between friends, family and strangers? (includes patch walkthrough)]

| |

| * [https://www.uni-weimar.de/kunst-und-gestaltung/wiki/GMU:Max_and_the_World/Paulina_Magdalena_Chwala Paulina Magdalena Chwala (VR, OSC, Unity)]

| |

| | |

| = project: theremin =

| |

| Assignment: Please do your own first patch and upload onto the wiki under your name

| |

| | |

| I did build a simple [https://en.wikipedia.org/wiki/Theremin Theremin] to get to know the workflow with Max. Move your mouse cursor around to control the pitch and volume. | |

| | |

| [[File:simple_mouse_theremin_screenshot.png|400px]]

| |

| | |

| [[:File:simple_mouse_theremin.maxpat]]

| |

| | |

| Objects used:

| |

| * [https://docs.cycling74.com/max8/refpages/loadbang loadbang]

| |

| * [https://docs.cycling74.com/max8/refpages/mousestate mousestate]

| |

| * [https://docs.cycling74.com/max8/refpages/cycle~ cycle~]

| |

| * [https://docs.cycling74.com/max8/refpages/slider slider]

| |

| * [https://docs.cycling74.com/max8/refpages/ezdac~ ezdac~]

| |

| | |

| = process: retrieve images from a camera =

| |

| This simple patch allows you to grab (still) images from the camera.

| |

| | |

| [[File:retrieve_images_from_camera.png|400px]]

| |

| [[:File:2021-04-23_retrieve_images_from_camera_JS.maxpat]]

| |

| | |

| Objects used:

| |

| * [https://docs.cycling74.com/max8/refpages/jit.grab jit.grab]

| |

| | |

| = project: screamie =

| |

| If you scream loud enough ... you will get a selfie.

| |

| | |

| [[File:screamie_photo.png|400px]]

| |

| | |

| This little art is a first shot. Also: snarky comment on social media and selfie-culture.

| |

| [[File:screamie.png|400px]]

| |

| | |

| [[:File:2021-04-25_screamie_JS.maxpat]]

| |

| | |

| Objects used:

| |

| * [https://docs.cycling74.com/max8/refpages/levelmeter~ levelmeter~] - to display the audio signal level

| |

| * [https://docs.cycling74.com/max8/refpages/peakamp~ peakamp~] - find peak in signal amplitude (of the last second)

| |

| * [https://docs.cycling74.com/max8/refpages/past past] - send a bang when a number is larger then a given value

| |

| * [https://docs.cycling74.com/max8/refpages/jit.grab jit.grab] - to get image from camera

| |

| * [https://docs.cycling74.com/max8/refpages/jit.matrix jit.matrix] - to buffer the image and write to disk

| |

| | |

| = process: record and save audio and video data =

| |

| This patch demonstrates how to get audio/video data and how to write it to disk.

| |

| [[File:audio_video_recorder.png|400px]]

| |

| | |

| [[:File:2021-04-23_audio_video_recorder_JS.maxpat]]

| |

| | |

| Objects used:

| |

| * [https://docs.cycling74.com/max8/refpages/ezadc~ ezadc~] - to get audio input

| |

| * [https://docs.cycling74.com/max8/refpages/meter~ meter~] - to display (audio) signal levels

| |

| * [https://docs.cycling74.com/max8/refpages/metro metro] - to get a steady pulse of bangs

| |

| * [https://docs.cycling74.com/max8/refpages/counter counter] - to count bangs/toggles

| |

| * [https://docs.cycling74.com/max8/refpages/select select] - to detect certain values in number stream

| |

| * [https://docs.cycling74.com/max8/refpages/delay delay] - to delay a bang (to get DSP processing a head start)

| |

| * [https://docs.cycling74.com/max8/refpages/jit.grab jit.grab] - to get images from camera

| |

| * [https://docs.cycling74.com/max8/refpages/jit.vcr jit.vcr] - to write audio and video data to disk

| |

| | |

| = project: color based movement tracker =

| |

| This movement tracker uses color as a marker for an object to follow. Hold the object in front of the camera an click on it to select an RGB value to track. The object color needs to be distinctly

| |

| different from the background in order for this simple approach to work. This patch yields both a bounding box and its center point to process further.

| |

| | |

| The main trick is to overlay the video display area (jit.pwindow) with a color picker (suckah) to get the color. A drop-down menu is added as a convenience function to select the input camera (e.g. an external one vs. the build-in device).

| |

| The rest is math.

| |

| | |

| [[File:color_based_movement_tracker.png|400px]]

| |

| | |

| [[:File:2021-04-23_color_based_movement_tracker_JS.maxpat]]

| |

| | |

| The following objects are used

| |

| * [https://docs.cycling74.com/max8/refpages/metro metro] - steady pulse to grab images from camera

| |

| * [https://docs.cycling74.com/max8/refpages/jit.grab jit.grab] - actually grab an image from the camera upon a bang

| |

| * [https://docs.cycling74.com/max8/refpages/prepend prepend] - put a stored message in front of any input message

| |

| * [https://docs.cycling74.com/max8/refpages/umenu umenu] - a drop-down menu with selection

| |

| * [https://docs.cycling74.com/max8/refpages/jit.pwindow jit.pwindow] - a canvas to display the image from the camera

| |

| * [https://docs.cycling74.com/max8/refpages/suckah suckah] - pick a colour from the underlying part of the screen (yields RGBA as 4-tuple of floats (0.0 .. 1.0))

| |

| * [https://docs.cycling74.com/max8/refpages/swatch swatch] - display a colour (picked)

| |

| * [https://docs.cycling74.com/max8/refpages/vexpr vexpr] - for (C-style) math operations with elements in lists

| |

| * [https://docs.cycling74.com/max8/refpages/jit.findbounds findbounds] - search values in a given range in the video matrix

| |

| * [https://docs.cycling74.com/max8/refpages/jit.lcd jit.lcd] - a canvas to draw on

| |

| * [https://docs.cycling74.com/max8/refpages/send send] & [https://docs.cycling74.com/max8/refpages/receive receive] - avoid cable mess

| |

| | |

| = project: video theremin =

| |

| Assignment: Combine your first patch with sound/video conversion/analysis tools

| |

| | |

| This patch combines the previously developed color-based movement tracker with the first theremin.

| |

| | |

| [[File:video_theremin.png|400px]]

| |

| [[:File:2021-04-23_video_theremin_JS.maxpat]]

| |

| | |

| = project: i see you and you see me =

| |

| | |

| ''The project did not grew legs upon more detailed examination. It therefore was not persued further.''

| |

| | |

| * in collaboration with [[Luise Krumbein]]

| |

| | |

| Video conference calls replaced in-person meetings on a large scale in a short amount of time. Most software tries to mimic a conversation bus actually either forces people into the spotlight and into monologues or off to the side into silence. One to one communication allows a user to concentrate on the (one) other, but group meetings blur the personalities and pigeonhole expression into a cookie-cutter shape of what is technically efficient and socially barely tolerable. The final project in this course seeks to explore different representations in synchronous online group communication.

| |

| | |

| To shift the perception data will be gathered from multiple (ambient) sources. This means tapping into public data sets for broad context. It also means gathering hyper-local/personal data via an arduino or similar microcontroller (probably ESP8266) for sensing the individual. These data streams will be combined to produce a visual output using jitter. This result aims to represent the other humans in a manner to emphasize personality and not the emulation of the technicality in face to face exchanges.

| |

| | |

| = project: distance communication =

| |

| | |

| ''Different implementations of the algorithm were tried in Max. None yielded a convergence of the phases of the oscillators. Therefore the project was cancelled.''

| |

| | |

| The installation "distance communication" explores and reflects on (romantic) relationships at a time where partners are apart. Their lives don't take place in the same environment anymore and are open to different influences. It takes effort to stay in sync even though a distance may be bridged by modern communication means. Two blinking LEDs represent the partners, the blinking rhythm their respective lives.

| |

| | |

| The technical setup consists of two LEDs driven by a microcontroller and sensed via a light-dependent resistor. A small computer (laptop or small form factor PC) runs both Max and a video conference software to display "the other". The Max patch and the "other" are displayed on a screen or video projector. The Max patch tries to synchronize the respective blinking pattern by leveraging the well studied behaviour of fireflies. A microphone on the floor picks up ambient sound. If this audio level exceeds a given a threshold the blinking of one LEDs is triggers / reset to introduce disturbance in the process. The installation may be presented with both parts in the same room or one part off-site somewhere else.

| |

| | |

| == implementation notes ==

| |

| The firefly model turned out to be insufficient for the current setup due to the small number of agents. The interaction has been remodeled from first principles based on two harmonic oscillators and the Kuramoto–Daido model for phase locking.

| |

| | |

| [[File:lover_equations_2021-05-24.jpg|400px]]

| |

| | |

| Observing the equation system over time shows the expected behavior (when implemented in Python).

| |

| | |

| [[File:lover_oscillators_2021-05-24.png|400px]]

| |

| | |

| Max implementation of the Kuramoto-Daido model

| |

| | |

| [[File:portrait_of_two_lovers_at_a_distance_2021-05-31.png|400px]]

| |

| | |

| == references ==

| |

| * [https://www.springer.com/gp/book/9783540071747 Kuramoto, Yoshiki (1975). H. Araki (ed.). Lecture Notes in Physics, International Symposium on Mathematical Problems in Theoretical Physics. 39. Springer-Verlag, New York. p. 420.)]

| |

| * <s>[https://www.jstor.org/stable/2808377 Buck, John. (1988). Synchronous Rhythmic Flashing of Fireflies. The Quarterly Review of Biology, September 1988, 265 - 286.]</s>

| |

| * <s>[https://www.journals.uchicago.edu/doi/10.1086/414564 Carlson, A.D. & Copeland, J. (1985). Flash Communication in Fireflies. The Quarterly Review of Biology, December 1985, 415 - 433.]</s>

| |

| * <s>[https://deepblue.lib.umich.edu/handle/2027.42/56374 Lloyd, J. E. Studies on the flash communication system in Photinus fireflies 1966] </s>

| |

| * <s>[https://academic.oup.com/icb/article/44/3/225/600910 Nobuyoshi Ohba: Flash Communication Systems of Japanese Fireflies]</s>

| |