Li lingling (talk | contribs) No edit summary |

Li lingling (talk | contribs) No edit summary |

||

| Line 9: | Line 9: | ||

[[File:touch sensor2.jpg]] | [[File:touch sensor2.jpg]] | ||

[[File:touch sensor1.jpg]] | [[File:touch sensor1.jpg]] | ||

In my project I can get different data from Arduino to processing, when I touching the "Touch Sensor" . Then I will define different gestures. In my case I have four gestures. | In my project I can get different data from Arduino to processing, when I touching the "Touch Sensor" . Then I will define different gestures. In my case I have four gestures. <br> | ||

Gesture 1: No touching. | Gesture 1: No touching.<br> | ||

Gesture 2: One finger touching. | Gesture 2: One finger touching.<br> | ||

Gesture 3: Grab. | Gesture 3: Grab.<br> | ||

Gesture 4: Put the finger in the water. | Gesture 4: Put the finger in the water.<br> | ||

[[File:touch sensor4.jpg] | [[File:touch sensor4.jpg]] | ||

Because I want to use the different gestures to control the Gem object in pure data. So I name them "Go right"、“Go up”、“Go left”、“Go down”. | Because I want to use the different gestures to control the Gem object in pure data. So I name them "Go right"、“Go up”、“Go left”、“Go down”.<br> | ||

In processing the gesture data is remembered as numbers. | <videoflash type="vimeo">63046775</videoflash> | ||

Gesture 1 is "0". | In processing the gesture data is remembered as numbers.<br> | ||

Gesture 2 is "1". | Gesture 1 is "0".<br> | ||

Gesture 3 is "2". | Gesture 2 is "1".<br> | ||

Gesture 4 is "3". | Gesture 3 is "2".<br> | ||

Then I send the gesture data to pure data through OSC. So in pure data I define the numbers with different movement. | Gesture 4 is "3".<br> | ||

"0" is "Go Left". | Then I send the gesture data to pure data through OSC. So in pure data I define the numbers with different movement.<br> | ||

"1" is "Go up". | "0" is "Go Left".<br> | ||

"2" is "Go right". | "1" is "Go up".<br> | ||

"3" is "Go down". | "2" is "Go right".<br> | ||

"3" is "Go down".<br> | |||

<videoflash type="vimeo">63048325</videoflash> | <videoflash type="vimeo">63048325</videoflash> | ||

[[File:processing+puredata.zip]] | [[File:processing+puredata.zip]] | ||

Revision as of 20:33, 31 March 2013

Touch Sensor

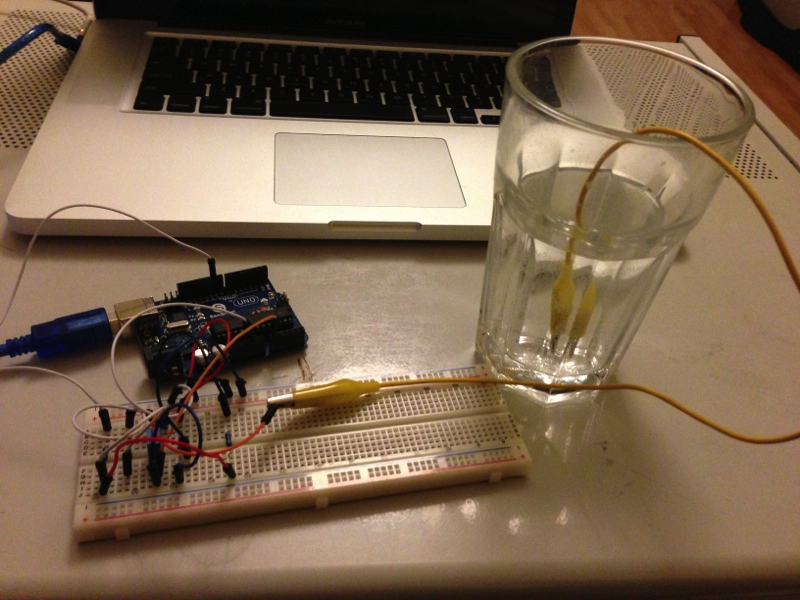

I work on the interaction between object and sound. I will connect the object with arduino and turn arduino into a touch sensor. First I will sammeln the information to processing. Then the message will be sent to puredata through OSC.

Description

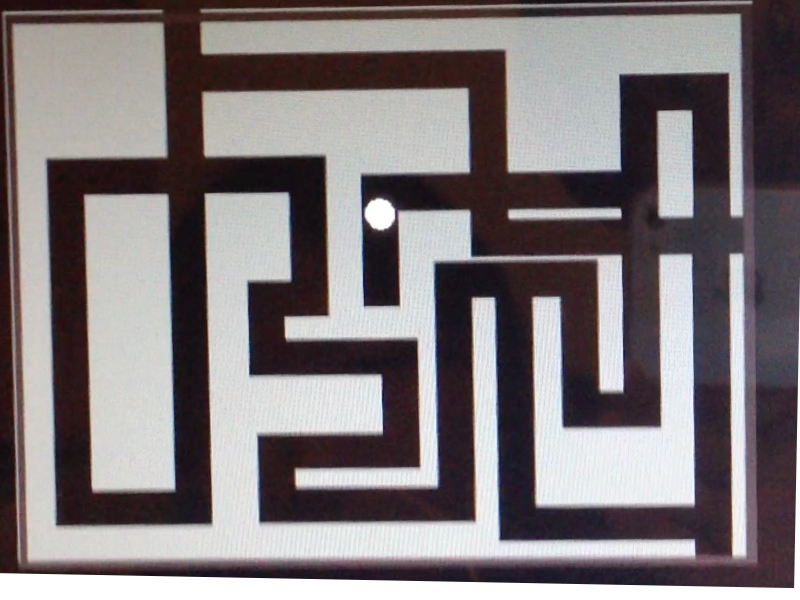

My concept come from one project online,it is called "Touche for Arduino: Advanced touch sensing'. In my project I make a combination between processing and pure data, using processing as a medium to send data from Arduino to pure data. In terms of processing, the information from Arduino is classified.Then I use the classified information in pure data define different actions. In pure data I will create a gem and then using the data to move it. At the end it will like a game “maze”.

Working Process

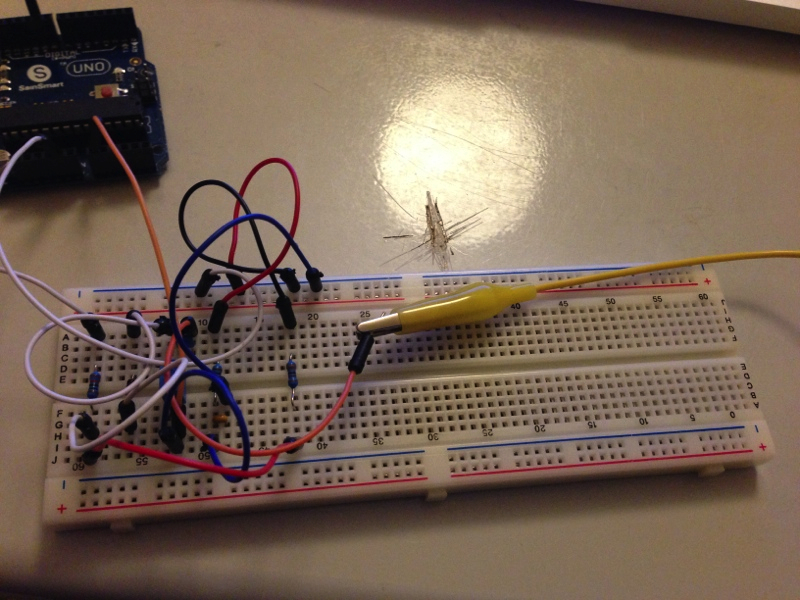

First I build a circuit on breadboard by myself to change the Arduino to be a touch sensor.

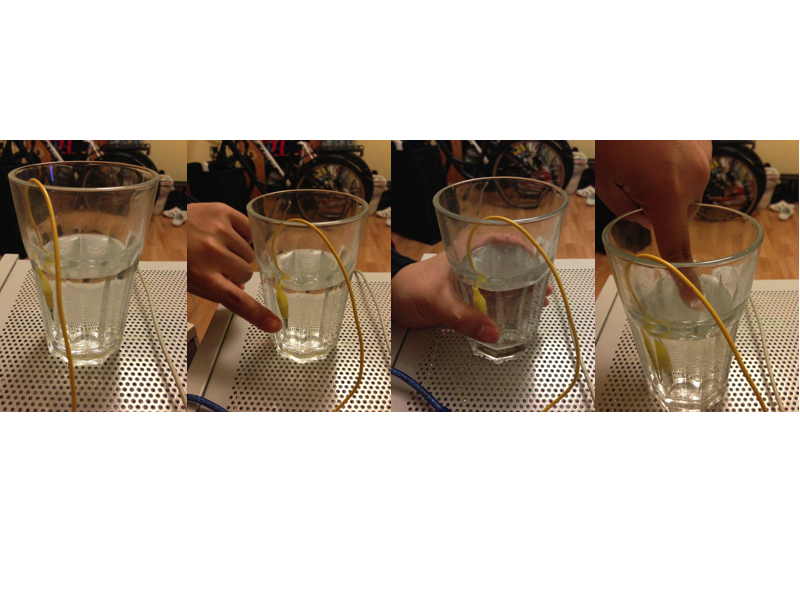

In my project I can get different data from Arduino to processing, when I touching the "Touch Sensor" . Then I will define different gestures. In my case I have four gestures.

In my project I can get different data from Arduino to processing, when I touching the "Touch Sensor" . Then I will define different gestures. In my case I have four gestures.

Gesture 1: No touching.

Gesture 2: One finger touching.

Gesture 3: Grab.

Gesture 4: Put the finger in the water.

Because I want to use the different gestures to control the Gem object in pure data. So I name them "Go right"、“Go up”、“Go left”、“Go down”.

Because I want to use the different gestures to control the Gem object in pure data. So I name them "Go right"、“Go up”、“Go left”、“Go down”.

<videoflash type="vimeo">63046775</videoflash>

In processing the gesture data is remembered as numbers.

Gesture 1 is "0".

Gesture 2 is "1".

Gesture 3 is "2".

Gesture 4 is "3".

Then I send the gesture data to pure data through OSC. So in pure data I define the numbers with different movement.

"0" is "Go Left".

"1" is "Go up".

"2" is "Go right".

"3" is "Go down".

<videoflash type="vimeo">63048325</videoflash>