Li lingling (talk | contribs) No edit summary |

m (moved GMU:Dataflow I/Li Lingling to GMU:Dataflow I WS12/Li Lingling: Prepare for Dataflow I in SS 2013) |

||

| (8 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

== Touch Sensor== | == Touch Sensor== | ||

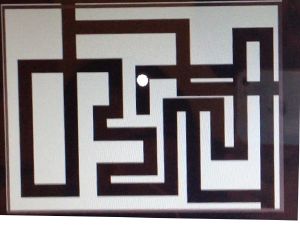

[[File:touch sensor3.jpg|thumb|Maze]] | |||

I work on the interaction between object and sound. I will connect the object with arduino and turn arduino into a touch sensor. | I work on the interaction between object and sound. I will connect the object with arduino and turn arduino into a touch sensor. | ||

First I will | First I will collect the information to Processing. Then the message will be sent to puredata through OSC. | ||

==Description== | |||

My concept come from | === Description === | ||

My concept come from one project online,it is called "Touche for Arduino: Advanced touch sensing'. In my project I make a combination between processing and pure data, using processing as a medium to send data from Arduino to pure data. In terms of processing, the information from Arduino is classified. Then I use the classified information in [[Pure Data]] define different actions. In Pd I will create a gem and then using the data to move it. At the end it will like a game maze. | |||

[[ | ==Implementation== | ||

First I build a circuit on breadboard by myself to change the Arduino to be a touch sensor. | |||

<gallery> | |||

File:touch sensor2.jpg|Breadboard | |||

File:touch sensor1.jpg|Glass with water | |||

File:touch sensor4.jpg|Different gestures | |||

</gallery> | |||

In my project I can get different data from Arduino to Processing, when I touching the “Touch Sensor” . Then I will define different gestures. In my case I have four gestures. | |||

# Gesture: No touching. | |||

# Gesture: One finger touching. | |||

# Gesture: Grab. | |||

# Gesture: Put the finger in the water. | |||

Because I want to use the different gestures to control the Gem object in Pure Data. So I name them "Go right"、“Go up”、“Go left”、“Go down”. | |||

<videoflash type="vimeo">63046775|450|260</videoflash> | |||

In processing the gesture data is remembered as numbers. | |||

# Gesture is "0". | |||

# Gesture is "1". | |||

# Gesture is "2". | |||

# Gesture is "3". | |||

Then I send the gesture data to pure data through OSC. So in pure data I define the numbers with different movement. | |||

"0" is "Go Left".<br> | |||

"1" is "Go up".<br> | |||

"2" is "Go right".<br> | |||

"3" is "Go down".<br> | |||

<videoflash type="vimeo">63048325|450|330</videoflash> | |||

[[Media:processing+puredata.zip]] | |||

Latest revision as of 05:59, 14 October 2013

Touch Sensor

I work on the interaction between object and sound. I will connect the object with arduino and turn arduino into a touch sensor. First I will collect the information to Processing. Then the message will be sent to puredata through OSC.

Description

My concept come from one project online,it is called "Touche for Arduino: Advanced touch sensing'. In my project I make a combination between processing and pure data, using processing as a medium to send data from Arduino to pure data. In terms of processing, the information from Arduino is classified. Then I use the classified information in Pure Data define different actions. In Pd I will create a gem and then using the data to move it. At the end it will like a game maze.

Implementation

First I build a circuit on breadboard by myself to change the Arduino to be a touch sensor.

In my project I can get different data from Arduino to Processing, when I touching the “Touch Sensor” . Then I will define different gestures. In my case I have four gestures.

- Gesture: No touching.

- Gesture: One finger touching.

- Gesture: Grab.

- Gesture: Put the finger in the water.

Because I want to use the different gestures to control the Gem object in Pure Data. So I name them "Go right"、“Go up”、“Go left”、“Go down”.

<videoflash type="vimeo">63046775|450|260</videoflash>

In processing the gesture data is remembered as numbers.

- Gesture is "0".

- Gesture is "1".

- Gesture is "2".

- Gesture is "3".

Then I send the gesture data to pure data through OSC. So in pure data I define the numbers with different movement.

"0" is "Go Left".

"1" is "Go up".

"2" is "Go right".

"3" is "Go down".

<videoflash type="vimeo">63048325|450|330</videoflash>