The Internet is calm and soothing Place

Context:

Concept:

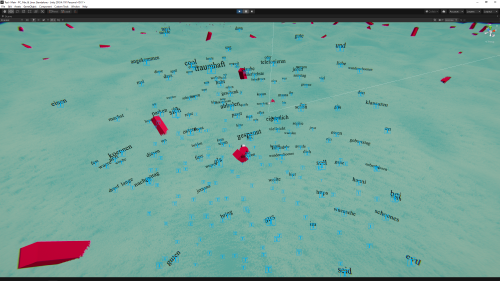

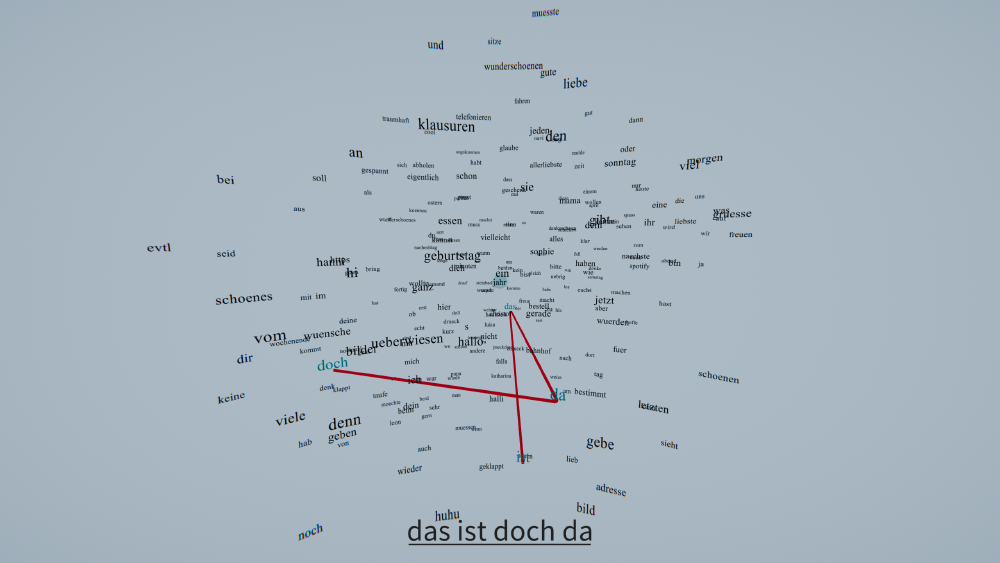

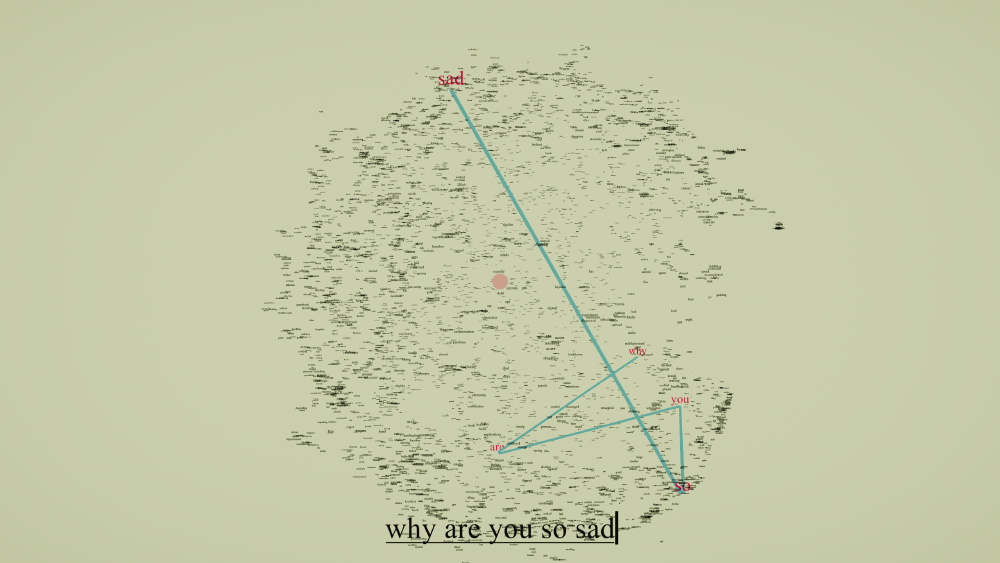

The viewer experiences the word embeddings by wandering through a minimalistic procedural generated world. To guide the viewer towards the "word-spheres" the viewer is followed by a swarm of letters that live and get born if you are close to a word-sphere and die the further away you get from them. After interacting with a word-sphere, the viewer is presented the word embedding and has now the chance to explore the word-relations by formulating sentences or searching for words that will be highlighted in the word-cloud.

Approach:

To analyze the relationship between words, the content of the subreddits hat to be extracted using the pushift-API to crawl all posts and comments and then merging them into one file per subreddit.

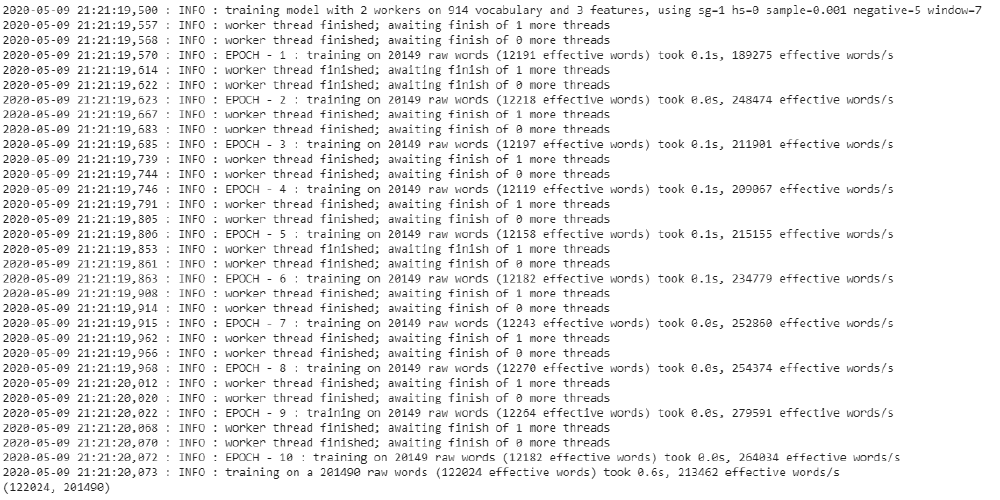

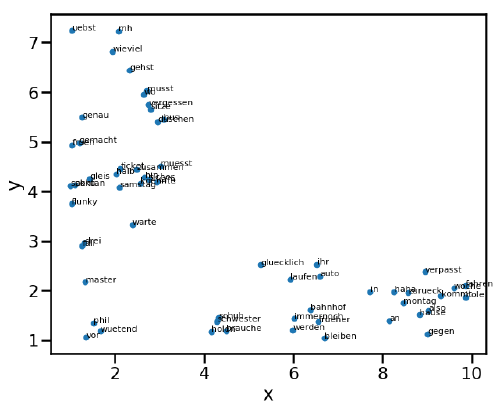

For the texts To generate the point word-clouds, the text of the posts and comments are extracted from the JSON files and are analyzed using the natural language processing technique word2vec.

The resulting vector space is of very high dimensionality, thus cannot be easily visualized. To reduce the high dimensional space to three.dimensions the method t-distributed stochastic neighbor embedding is used, which keeps words close together that are close in the high dimensional space.

The resulting data is then imported into unity using a csv-file and for every data-point a billboard-text of the word is generated. This process is repeated for every text.

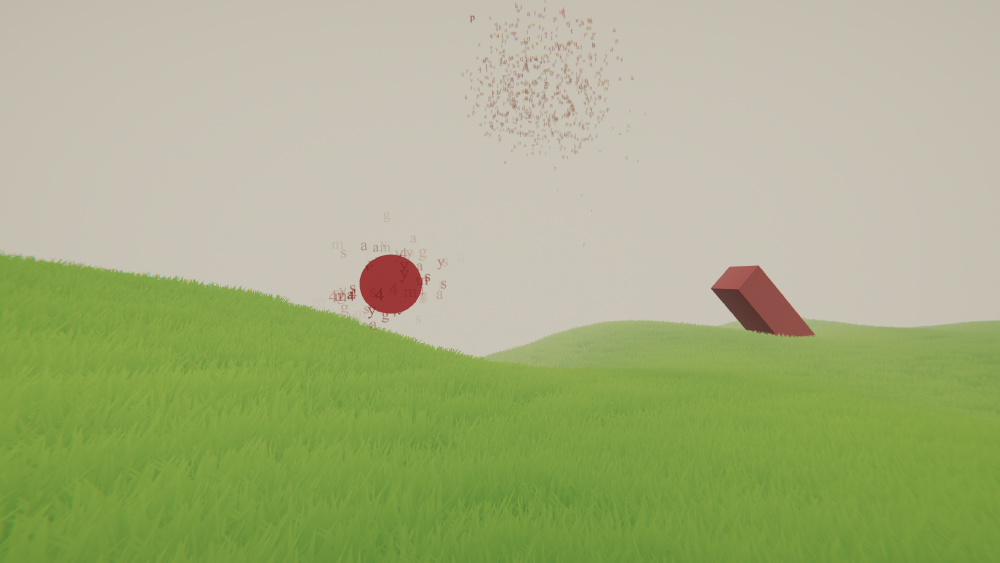

The world in Unity that the viewer walks through is generated using tileable noise as displacement for a plane. As the user walks through the world, new chunks are generated on the fly thus giving the illusion of an infinite world.

The grass, cuboids and word-spheres are generated and distributed per tile using seeded randomness. Every tile has its own noise and therefore distribution pattern of the objects, making the world even more endless. A swarm of letters using the boid-algorithm guides the player through the world and towards the word-spheres. The closer the view-direction of the viewer is to a word-sphere the more letters are in the swarm and the closer they fly to each other. The boid-algorithm simulates the behaviour of birds by enforcing simple rules for every boid like separation, alignment and cohesion towards all other boids. A compute-shader is used to speed up the simulation process.

Reflection / Outlook:

The interaction with the word-cloud would not work with a real VR-controller, text-input needs to work with a heads up keyboard and scale and rotation could work with two controllers. To fully benefit from the word-embeddings it would be great to make simple arithmetics in the word-vector-space available. Maybe by dragging and dropping words onto each other. To further underline the idea of an immersive walk, there should be ambient and interaction sound-effects. Distribution of things in the world should not be totally random and interaction should have consequences.