No edit summary |

No edit summary |

||

| (20 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

[[ | POINT CLOUD EXPERIMENT | ||

I have been interested in testing the data visualization possibilities while still trying to understand Unity. As an exercise for a larger project, I built a scene with point cloud data and used the walls of the scan as trigger zone collided with a sound. I used Pcx - Point Cloud dataImporter/Renderer for Unity to import binary .ply point cloud file, downloaded the 3D scanned room and the sound. | |||

[[File:Screen Shot.png|700px]] | |||

Particles dont look right and movements are choppy. I just couldnt get a smooth video out of OBC. | |||

https://www.youtube.com/watch?v=lURd37SiN6Y&feature=youtu.be | |||

---- | |||

CRITICAL VR PROJECT | |||

Continuing data visualization on Unity, I try to get a multi dimensional graphic extracted from a machine learning algorithm which is a set of language modeling features learning techniques in natural language processing (NLP) technique. It is a two-layer neural networks that are trained to reconstruct linguistic contexts of words. I used an open source algorithm (Word2Vec) which was created, published and patented by a team of researchers by Google in 2013. | |||

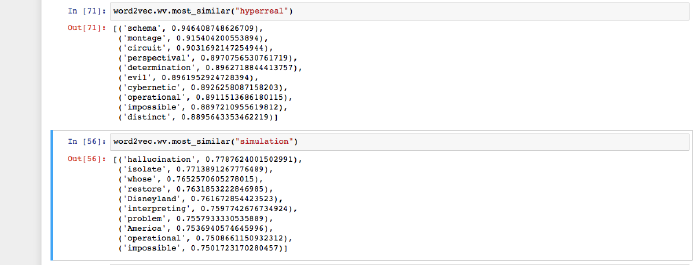

I choose a literary work of Jean Baudrillard- Simulacra and Simulation. I used the gensim library’s Word2Vec model to get word-embedding vectors for each word. Word2Vec is used to compute the similarity between words from a large corpus of text. The algorithm is very good at finding most similar words (nearest neighbors), I also tried subtracting and adding words. I am giving an examples to show how the program functions. | |||

[[File:simularca.png|700px]] | |||

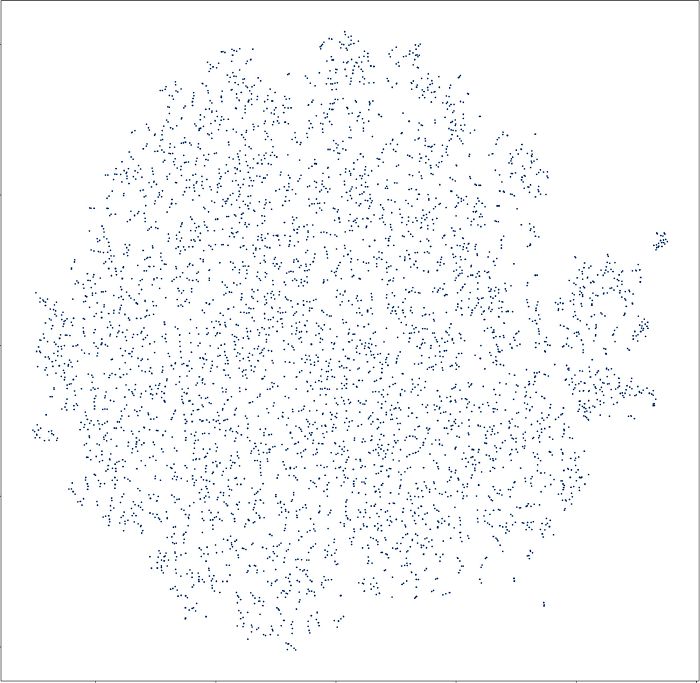

This is the graphic which is reduced the dimensions of the Word2Vec space down to x and y coordinates. Every dot represents a word. Dots that are closer together in a space mean that they are similar. | |||

[[File:indexsimularca4.jpg|700px]] | |||

In order to get the 3 dimensional data from Word2Vec, I first reduced the dimensions to 3D then, extract the data as a csv table including x y z coordinates and the corresponding word. In principle, I need to know what Unity needs as input and figure out how I can generate the missing information. First solution was to convert .csv file to point cloud and open it in Unity using point cloud data Importer/Renderer. Here is the result: | |||

[[File:simularcamoviemovie.mp4|800px]] | |||

NEXT STEP(S) | |||

-The particle system is one single object. In order to get separate objects for every word, first I need to understand the concept of serialization in Unity and use it to read from csv file and automatically create objects. | |||

-When I have an array of objects, each object is going to be one word, then, I need to match all objects with corresponding word using TextMeshPro-Asset. | |||

-Finally, I need to create an orbit camera to make the text face the camera from all angles. | |||

Latest revision as of 15:29, 18 June 2020

POINT CLOUD EXPERIMENT

I have been interested in testing the data visualization possibilities while still trying to understand Unity. As an exercise for a larger project, I built a scene with point cloud data and used the walls of the scan as trigger zone collided with a sound. I used Pcx - Point Cloud dataImporter/Renderer for Unity to import binary .ply point cloud file, downloaded the 3D scanned room and the sound.

Particles dont look right and movements are choppy. I just couldnt get a smooth video out of OBC.

https://www.youtube.com/watch?v=lURd37SiN6Y&feature=youtu.be

CRITICAL VR PROJECT

Continuing data visualization on Unity, I try to get a multi dimensional graphic extracted from a machine learning algorithm which is a set of language modeling features learning techniques in natural language processing (NLP) technique. It is a two-layer neural networks that are trained to reconstruct linguistic contexts of words. I used an open source algorithm (Word2Vec) which was created, published and patented by a team of researchers by Google in 2013.

I choose a literary work of Jean Baudrillard- Simulacra and Simulation. I used the gensim library’s Word2Vec model to get word-embedding vectors for each word. Word2Vec is used to compute the similarity between words from a large corpus of text. The algorithm is very good at finding most similar words (nearest neighbors), I also tried subtracting and adding words. I am giving an examples to show how the program functions.

This is the graphic which is reduced the dimensions of the Word2Vec space down to x and y coordinates. Every dot represents a word. Dots that are closer together in a space mean that they are similar.

In order to get the 3 dimensional data from Word2Vec, I first reduced the dimensions to 3D then, extract the data as a csv table including x y z coordinates and the corresponding word. In principle, I need to know what Unity needs as input and figure out how I can generate the missing information. First solution was to convert .csv file to point cloud and open it in Unity using point cloud data Importer/Renderer. Here is the result:

NEXT STEP(S)

-The particle system is one single object. In order to get separate objects for every word, first I need to understand the concept of serialization in Unity and use it to read from csv file and automatically create objects.

-When I have an array of objects, each object is going to be one word, then, I need to match all objects with corresponding word using TextMeshPro-Asset.

-Finally, I need to create an orbit camera to make the text face the camera from all angles.