Project Description

Full Artistic Statement in German as PDF download

Video Documentation (with screen recording)

Audio Samples

The complete Patch including download-, purchase- or information links to: - all externals used in the patch - all the hardware used in the patch and where to buy it (including audio output situation)

patch walkthrough and tutorial

Conclusion and final works

all the things stated above will be added here as soon as the project is finished

Introduction

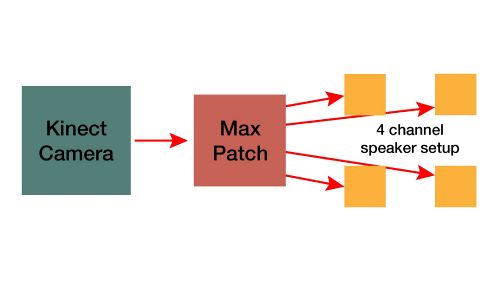

The goal of the semester project was to write a Max Patch for an interactive sound installation. The installation was about connecting the movement of an individual in a defined space or room to the acoustic environment in this certain area.

The main idea behind this action was to give the person a certain amount of control over the parameters of sounds, that would be played and positioned in the room, without the person necessarily being aware of the interaction. The goal of the installation is not to create a new environment or to make the installation speak for itself alone. It's meant to enhance and augment the given surrounding, giving the recipient the ability to interact with his environment.

This required an interface that wasn't visible or touchable from the beginning on, so the installation used video tracking, scanning the movement of the person in the room.

The data, that's gained from the video tracking is used to influence the parameters of the sound as well as the spatialisation of the sound, meaning its position in the room. This already offers quite a lot of potential to give the recipient a feeling of influence on his environment.

Artistic Statement

The whole process of planning, building and testing the installation happened during the first online semester and Covid-19 pandemic. Most of the life of an ordinary student such as me happened in a small room. Wether it’s working, sleeping, resting, meeting a few friends or just spending free time, everything that normally happened in quite different spaces was now forced and compressed into a small room. That naturally created different areas and spaces with very different meanings, actions and emotions connected with them. They often clashed, yet it wasn’t that easy to separate them from each other. These smaller areas are the basis for the sound composition.

Actually, this relation and connection between the sound installation and the room during the lockdown wasn’t planned from the beginning, but evolved during the semester. The reason therefore was that working on the project perfectly showed the problematic of these different areas and actions colliding due to the lack of space. For example when I set up a 4 speaker surround system in my room, to try connecting the tracking data to the spatialisation of the sound material, one speaker had to stand on my bed to be in the right place, so I had to sleep elsewhere for a few nights. This slowly had me thinking that the installation had to be about this problem, as it fits the original idea of the installation so well. As a result you could say, the installation itself deals with the struggles and problems of its own creation.

This also meant, that the installation was meant to be placed in a normal bedroom of a students flat, instead of a studio room. On one hand this meant that a speaker setup had to be placed in this room, on the other hand it meant that the sound quality wouldn't be as nice as in a professional surround studio. Yet, the environment wouldn't be fitting the context and idea of the installation, if it was placed in an "artificial" surrounding like a surround studio.

The Setup

To be independent from the light condition in the room, an infrared camera was used. The most practical, and especially cheapest way to do this was using a Kinect 360 camera.

In order to position the sounds in the room and change these positions, it was necessary to have a setup of multiple speakers. In this case, a surround speaker setup of four speakers was used.

As you can see on the pictures above, the kinect camera was hung to the ceiling facing downwards.

Video Documentation

Right here you can find a video documentation of the final installation prototype. The audio signal will be explained, recorded and converted to stereo. Like this the audio isn't spatialized, but at least you are able to hear it.

The Video is divided into three parts: Introduction, Demonstration and Explanation.

00:17 - 10:00 Introduction 10:00 - 18:40 Demonstration 18:40 - End Explanation

Also I have made a patch walkthrough in video format. You can watch using the link below.

Patch Walkthrough and Setup Tutorial

Resources

HARDWARE

Kinect 360 sensor https://www.amazon.de/Microsoft-LPF-00057-Xbox-Kinect-Sensor/dp/B009SJAIP6 (pretty expensive... but you can easily get used ones for less than 40€ on ebay-kleinanzeigen.de)

Kinect 360 Adapter https://www.amazon.de/gp/product/B008OAVS3Q/ref=ppx_yo_dt_b_asin_title_o04_s00?ie=UTF8&psc=1

To output audio to multiple external speakers, you'll most likely need a

SOFTWARE

ICST Ambisonics Plugins free download https://www.zhdk.ch/forschung/icst/icst-ambisonics-plugins-7870

TUTORIALS

Color Tracking in Max MSP https://www.youtube.com/watch?v=t0OncCG4hMw&list=PLG-tSxIO2Jkjj0BthZ_y0GRkWRjAvL1Uo&index=4&t=310s (Part 1 of 3)

Kinect Input and normalisation https://www.youtube.com/watch?v=ro3OwWnjfDk&list=PLG-tSxIO2Jkjj0BthZ_y0GRkWRjAvL1Uo&index=5

Conclusion and future works

The project so far is finished and came to an end. Yet, the patch, and the installation context will be developed further, as there is a huge potential in this instrument.

This far, the control worked just by changing the position values. It's planned to add for exemple blob tracking to the patch, to control parameters by a certain movement, no matter where in the room it may happen. This would make the whole sound sculpture more diverse and flexible.

A huge and very important addition, that may happen during the next semester, is a visual dimension. This means the sensor data will also be used to control the for example light environment in the room. That's why it's important not to use a simple camera, but a Kinect360 with an infrared camera.

Also, the patch this far only scratches the surface of all the things the Kinect camera is capable of. For example it can be possible to let the installation be visited by more than one person at the time. To explore the given sound situation together, or even compose the sounds as a group.