No edit summary |

No edit summary |

||

| (8 intermediate revisions by one other user not shown) | |||

| Line 1: | Line 1: | ||

==Motion to sound== | |||

===idea=== | |||

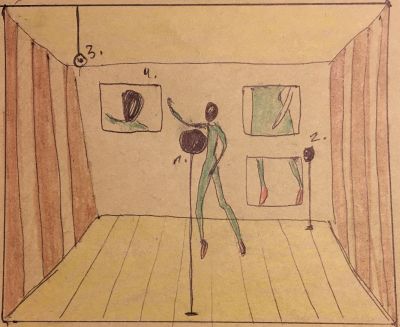

The aim of the project is to play sounds by moving the body. I would like to use 3 webcams that run under different tracking systems.The movement is recorded in max msp and converted into numbers. This numbers are converted into the sounds or they activate the sounds. | |||

Here is a little insight from outside. | |||

[[ | [[File:71F3113C-0DE0-4032-985D-5BAC0C799F2D.jpeg|400px]] | ||

= | *1: cam:face trackin | ||

== | *2: cam:motion tracking legs | ||

== | *3: cam:motion tracking arms/body | ||

=== | *4: screens | ||

===process=== | |||

First step is to look how to get three webcams in max to run in the same time. There for I used the the jit.qt.grab or jit.dx.grab (Windows) and see whether I can have three of these objects running simultaneously for the three different inputs. | |||

So now we come to the point where I start to play around with different opurtunities for tracking. | |||

Face tracking : run with Face OSC | |||

There for I need to install Face Osc and connect it to max with the object: udprecive 8338 the number is the ip of face osc you can change if like. Then you need the object OSC-route/… after you can say that you wonna route the mouth or the left eyebrow. If something in the route area will change it gets a bang wich activate the sound. | |||

==Patch== | |||

===Motion tracking=== | |||

at first i used the jit.change object wich is connected to jit.rgb2luma this both objects converts RGB values to luminosity which is then sent to the ‘jit.matrix’ object. | |||

Latest revision as of 15:06, 27 April 2020

Motion to sound

idea

The aim of the project is to play sounds by moving the body. I would like to use 3 webcams that run under different tracking systems.The movement is recorded in max msp and converted into numbers. This numbers are converted into the sounds or they activate the sounds.

Here is a little insight from outside.

- 1: cam:face trackin

- 2: cam:motion tracking legs

- 3: cam:motion tracking arms/body

- 4: screens

process

First step is to look how to get three webcams in max to run in the same time. There for I used the the jit.qt.grab or jit.dx.grab (Windows) and see whether I can have three of these objects running simultaneously for the three different inputs.

So now we come to the point where I start to play around with different opurtunities for tracking. Face tracking : run with Face OSC

There for I need to install Face Osc and connect it to max with the object: udprecive 8338 the number is the ip of face osc you can change if like. Then you need the object OSC-route/… after you can say that you wonna route the mouth or the left eyebrow. If something in the route area will change it gets a bang wich activate the sound.

Patch

Motion tracking

at first i used the jit.change object wich is connected to jit.rgb2luma this both objects converts RGB values to luminosity which is then sent to the ‘jit.matrix’ object.