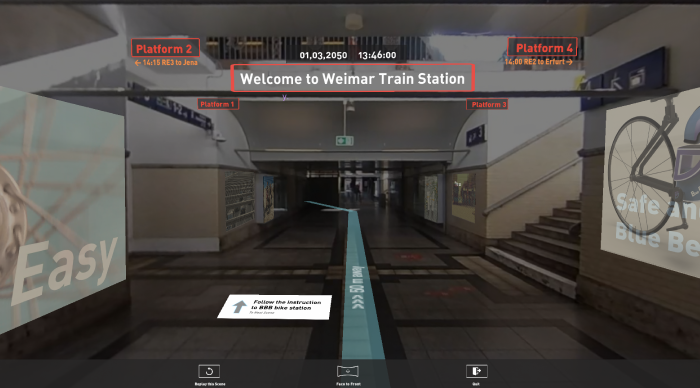

Blue Belly Bike sharing- Imaginary future bike sharing service in Weimar Introduction:

The main topic of the project focus on the relationship between technology and mobility. The objective is to discuss how XR technology could be implemented on future cycling experience. The outcome of the project is about the imaginary future bike sharing system called “Blue Belly Bike” in Weimar in 2035. This documentation is mainly about how I experiment with Unity to simulate the experience visually.

Background:

Technology plays an important role in the development of modern transportation. Topics like electric vehicles (EV), autonomous driving or underground tunnel based on new technology, such as artificial intelligence (AI), cross reality (XR), became more and more popular. People tried to solved the current traffic problems by implementing the new technology. In recent year, people’s awareness of sustainability constantly rises, the voice of promoting sustainable mobility is also getting louder. Especially in 2020, the influences of COVID-19 push people to re-evaluate the relationship between human and the environment. The use of bicycle also boomed in 2020. 32% of people stated that they are cycling more due to the crisis (Haubold, 2020). In the meanwhile, the importance of technology and digital infrastructures become so obviously seen that people are depend their everyday life on it. What will the future mobility look like? How can we make transportation more sustainable?

Experiment with camera:

1.Dynamic scene

It is especially hard to make a dynamic 360 scene without taking the camera or equipment inside. Even with the support of stabilizer (gimbal), it is still a question how to hide the stabling axis from the camera. I planned to build a scene like riding a bike, however, I could not find proper equipment for stabilization. I tried to film in different methods, includes to film while riding a bike. It ended up that filming while walking with the software stabilization, video 2D editing and simulation of speed create the effect that I wanted. more stable and simulate the speed with “field of view” from the camera.

Shooting/ Instead of using a stabilizer and shooting it while riding a bike, which could block a huge button portion of the shooting, I used a simple tripod to hold the 360 camera while walking. Therefore, only the top of my head and some black spot from the tripod should be erased.

Stabilization and editing/ After Effect is the main software I used to edit and prepare the 360 video for Unity. I use camera analysis for stabilization and 2D edit to erase the top of my head and the shadow away.

Speed simulation/ Since the video was film when walking, the speed is too slow for a cycling simulation. In the case, the “field of view” of the camera in Unity create a good visual effect of speed. Even though the image/ video is stretched on the side, the front view looks more spacious and make the illusion of moving forward faster.

Fig.1 Field of view "60" (original setting)

Fig.1 Field of view "60" (original setting)

Fig.2 Field of view "133"

(video demo in the link at the bottom)

Fig.2 Field of view "133"

(video demo in the link at the bottom)

Fig.3 Entry scene also use field of view to increase the speed

Fig.3 Entry scene also use field of view to increase the speed

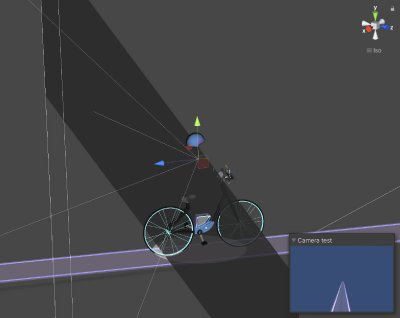

2.Render texture

I wanted to simulate the mirror on the bike by using AR technology. I therefore created another camera looing to the back, then projected the camera on a render texture, which applied to the material of the object. Then the effect of a back mirror then been created in front of the bike.

Fig.4 Simulated mirror

Fig.5 Camera setting in Unity

Project File and Video Demo: https://drive.google.com/drive/folders/1vXwTOpUzUeeVzuxktVndXnd_EaUgPhsR?usp=sharing

- - - - - - - - - - - - - - - - - - - - - - - - - - - - - -

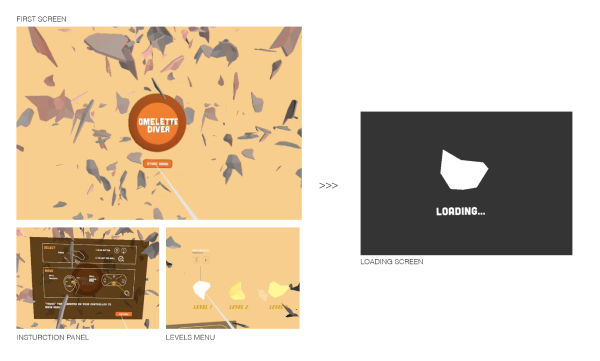

Omelette Diver- VR Game in Oculus Go

This is the extension of the project last semester, transferring a dome game into a VR game for Oculus Go. The main tool is the XR interaction toolkits to implement the input of players. In terms of interaction, one difficulty for VR game right now is that the interactive element of games in other format could not directly transfer into a VR game. Therefore, it is hard to share a same VR environment with others. Omelette Diver hence became a game with one player, who is setting up a solo new game experience.

The other limitation of Oculus go is only one controller is available. However, for the game it need 8 direction of actions, including: move front, left, right, back, up, down, right rotation, left rotation. Besides the trackpad on the controller, an additional VR panel was made to let the player control the whole dimension of movement. To avoid confusion, all player will go through an instruction panels before starting the game.

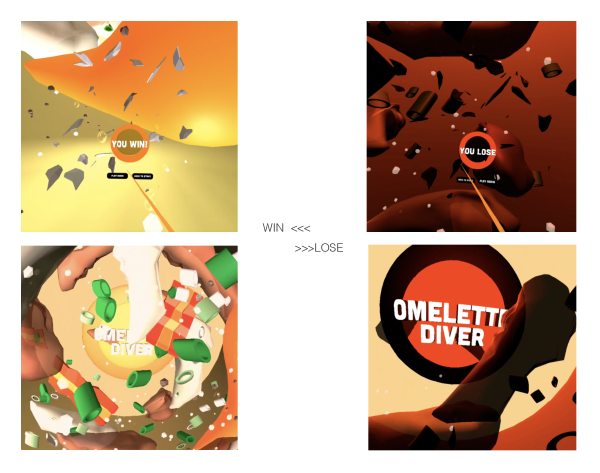

The different of lighting and items enlarge the emotion difference between win and lose. In the win scene, there is a bright light from the egg shell and particle system releasing bubbles(from unity asset store), to make the scene looks cheering. On the other hand, the lose scene remain almost no light that only the light from the emission material.

What I have learned this semester:

In general, I am more capable of utilising the movement and interaction in Unity. In the Omelette Diver game, I tried with the input system to enable players to move around the environment and select the items. In the second BBB Bike Sharing demo, even though it is not made for a VR device, it implement a static skybox which stimulate a dynamic scene.

Discussion: