|

|

| Line 1: |

Line 1: |

|

| |

|

| ==[[Encapsulated//Encrypted]]== | | ==[[Encapsulated//Encrypted]]== |

| ''SoSe22 - Observe Experiement Connect''

| | SoSe22 - Observe Experiement Connect |

|

| |

|

| ''an interpretation of the digital privacy concept through auto-biographical photography practices'' | | ''an interpretation of the digital privacy concept through auto-biographical photography practices'' |

| Line 11: |

Line 11: |

|

| |

|

|

| |

|

| ==[[InterFace: how you see me]]==

| |

| ''WiSe23 - Designing Utopias''

| |

|

| |

|

| InterFace is an interactive tool which uses the facial expressions to detect the emotions and creates an additional layer of communication between the viewer and the wearer. | | ==InterFace: how you see me== |

|

| |

|

| | WiSe23 - Designing Utopias |

|

| |

|

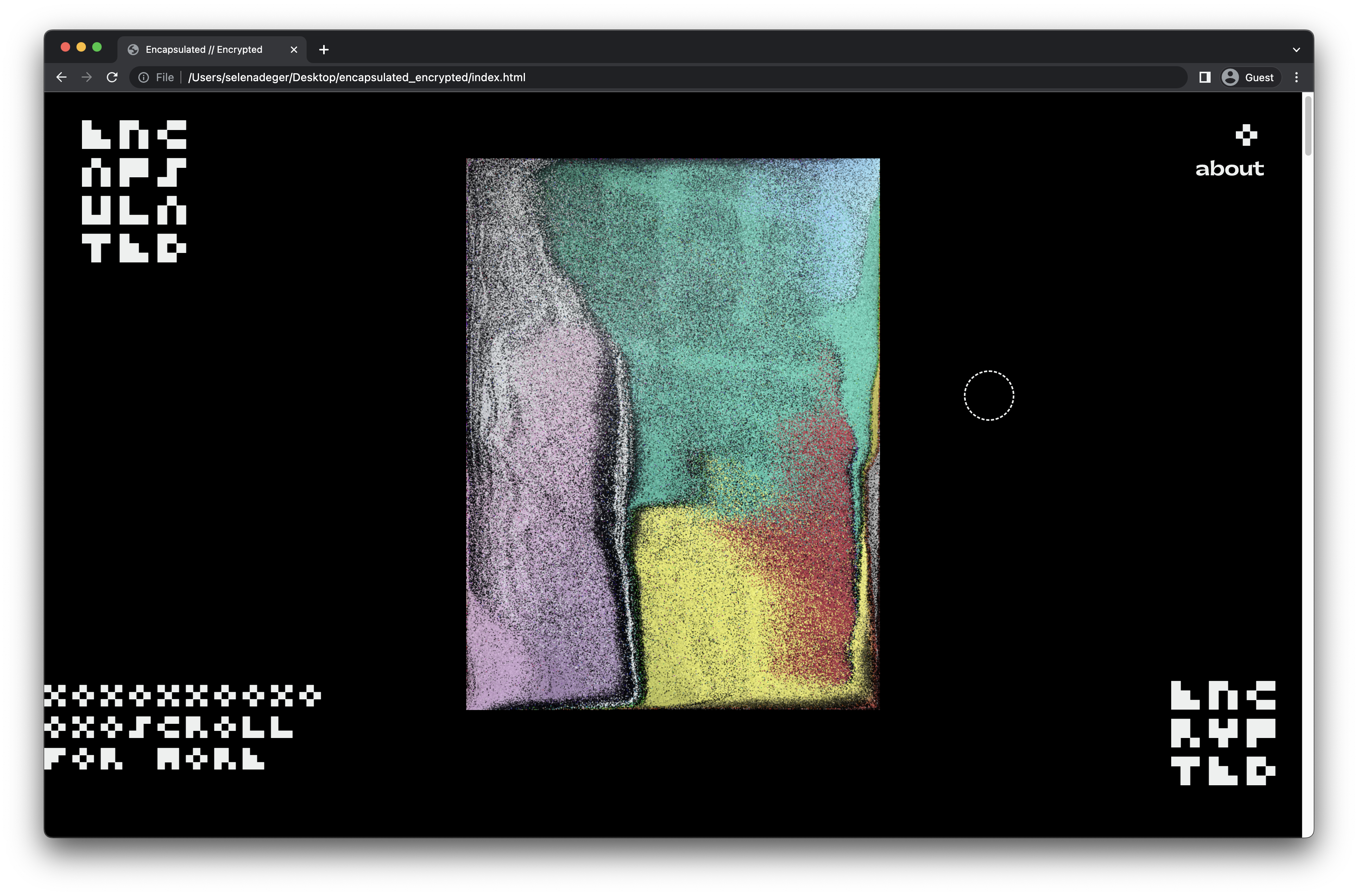

| =='''Encapsulated//Encrypted'''==

| | [[GMU:Designing_Utopias:_Theory_and_Practice/Selena_Deger]] |

| ''an interpretation of the digital privacy concept through auto-biographical photography practices''

| |

| | |

| [[File:selenade1.png]]

| |

| | |

| https://flux999.github.io/encapsulated/

| |

| | |

| =='''abstract'''==

| |

| | |

| Photography is a relatively new medium of visual expression that also has many contemporary interpretations regarding archiving or even constructing a new identity for the self. Especially auto-biographic photos have become a part of people’s identities with newly emerging online platforms and continuously changing world views of younger people. The purpose of photography moved from being only memoirs to recall the past to documenting daily life. Unlike past, there is no need to have a special occasion to remember but every day is to be recorded and sometimes shared with others. These archives of nonsignificant moments in life mirror people’s lives and enable them to curate and communicate their (digital)identities. With the paradigm shift in the usage of this medium, photography is democratized even more and started to have a significant effect on social interactions but this also came with many concerns about privacy in online environments. Digital privacy is also a very contemporary subject in everyone’s lives as we are going through a very digitalized era where most of the experiences are converting to online even the social interactions are becoming more and more framed on screens.

| |

| | |

| | |

| =='''about the project'''==

| |

| | |

| The project's focal point, "Encapsulated//Encryped" utilizes my photo archive, which includes the photos I took or the photos of me taken by another person. The first part, "Encapsulated" refers to the archive where I document my private life from many aspects, the place I have been living, the people I know, the places I have been, my face, works, cat… These photos encapsulate the essential moments of my life and most of them I don't share online. However, I was curious about how my decision process goes by when I share one photo online, with people I rarely know and also acknowledge that the photo will probably stay on the internet for ever.

| |

| | |

| At this point the second part of the project, "Encrypted" comes. Even though I have many concerns about the privacy of my life and being skeptical about sharing photos online, I gladly exist in online communities. And usually, I am more disturbed when passively consuming the content than creating or sharing of my own. The dilemma is I don't want to share my life online, but I want to share the photos I take, which are basically depictions of my life and contain a lot of information about my identity and surroundings. Therefore, to share the photos I took, I have to encrypt them so that they will be based on authentic images but will no longer be the photos on my gallery.

| |

| | |

| | |

| | |

| == '''process'''==

| |

| '''Machine Learning Model for Public/Private Assessment'''

| |

| | |

| ''in collaboration with Tarkan Uskudar, Data Scientist''

| |

| | |

| To decide if a photo is available for sharing online or entirely private, I used a deep learning model based on my past decisions on sharing a photo. Two classes with the photos that I have already shared online(public) and another selection of photos from my archive that is rated explicitly as private and fed to the algorithm to find the patterns.

| |

| | |

| Tensorflow library is used to implement convolutional neural network architecture. There are three steps for the model. During the preprocessing, the images are resized to 540x540px for computational purposes. After resizing, data augmentation was used to create different representations of the images. This is to improve the generalisability of the model by creating flipped, zoomed, and rescaled versions of the photos. The second part was to train the model, convolutional neural networks with max pooling were used and the idea was to convey the meaning of the image through the layers and get information back from the model.

| |

| | |

| After the training, the results are evaluated, and minor adjustments are made to reach the final accuracy of 89%. Finally, by feeding a batch of 45 photos taken between September 2021-2022, the privacy values are exported to be used for image processing at later steps.

| |

| | |

| | |

| | |

| | |

| '''Pixel Sorting Algorithm for Image Processing'''

| |

| | |

| After trying many methods to alter the photos, such as style transfer or mapping them in a different concept like Lissajous figures, randomized geometric shapes etc. it was more appealing and fruitful to process them via the colors they already carry.

| |

| | |

| ''trials with different algorithms;''

| |

| | |

| <gallery>

| |

| File:selenade3.png

| |

| File:selenade4.png

| |

| File:selenade5.png

| |

| </gallery>

| |

| | |

| | |

| A bubble sorting algorithm and p5.js library features are used to change the images with the aim of not showing the actual image but the "encrypted" version. First, the image's pixel values are retrieved and with the bubble sorting, pixels are sorted according to their hue values and relocated until they are neighbors with another with the same hue value. Additionally, a random color function is assigned to the photos to have slightly different results for each time program runs. The final look of the images shows flowing pixel paths and different color gradings.

| |

| | |

| ''pixel sorting initial experiments;''

| |

| | |

| <gallery>

| |

| File:selenade6.jpg

| |

| File:selenade7.jpg

| |

| File:selenade8.png

| |

| File:selenade9.png

| |

| </gallery>

| |

| | |

| | |

| ''code; ''

| |

| | |

| <source style="border:none; height:650px; overflow:scroll;" lang="c" line start="55" highlight="4">

| |

| let img;

| |

| let imgCornerx;

| |

| let imgCornerY;

| |

| let counter = 0;

| |

| | |

| | |

| function preload() {

| |

| img = loadImage("cover/cover11.jpeg");

| |

| }

| |

| | |

| function setup() {

| |

| img.resize(600, 0);

| |

| createCanvas(windowWidth, windowHeight);

| |

| pixelDensity(1);

| |

|

| |

| imgCornerx = (windowWidth-img.width)/2;

| |

| imgCornery = (windowHeight-img.height)/2;

| |

|

| |

| frameRate(10);

| |

| }

| |

| | |

| | |

| function draw() {

| |

| | |

| | |

| img.loadPixels();

| |

|

| |

| for (let i = 0; i < img.width - 1; i++) {

| |

| for (let j = 0; j < img.height - 1; j++) {

| |

| | |

| if (random(2)<1) {

| |

| vmovePixels(i, j);

| |

| } else {

| |

| hmovePixels(i, j);

| |

| }

| |

|

| |

| counter++; //not to change every pixel during the process.

| |

|

| |

| if (counter<img.width*img.height*50) {

| |

|

| |

| randomColor(parseInt(random(img.width)), parseInt(random(img.height)));

| |

| } else {

| |

| //console.log(counter);

| |

| }

| |

| }

| |

| }

| |

|

| |

| img.updatePixels();

| |

| | |

| background(0);

| |

|

| |

| image(img, imgCornerx, imgCornery);

| |

| //filter(INVERT);

| |

|

| |

| }

| |

| | |

| // assign random colors to random pixels on the canvas

| |

| function randomColor(i, j, density="low") {

| |

|

| |

| if (density == "high") {

| |

| randomValue= random(50);

| |

|

| |

| } else {

| |

| randomValue = random(100);

| |

| }

| |

|

| |

| if(randomValue<0.05) {

| |

| const index5 = 4 * (j * img.width +i);

| |

| img.pixels[index5]= random(200);

| |

| img.pixels[index5+1]= random(200);

| |

| img.pixels[index5+2]= random(200);

| |

| }

| |

| }

| |

| | |

| function hmovePixels(x, y) {

| |

| let neighbour;

| |

| let pix;

| |

|

| |

| //locating the pixel values in the array of values

| |

| const index = 4 * (y * img.width + x);

| |

| const index3 = 4 * (y * img.width + (x+1));

| |

| neighbour = [img.pixels[index3], img.pixels[index3+1], img.pixels[index3+2]];

| |

| pix = [img.pixels[index], img.pixels[index+1], img.pixels[index+2]];

| |

|

| |

| //bubble sort algorithm to first check the values of the pixels inside if condition,

| |

| if (hue(pix) < hue(neighbour)) {

| |

| temp = [];

| |

|

| |

| temp[0]= img.pixels[index]; //then displacement by putting the first index to the temporary position in a new array and,

| |

| img.pixels[index] = img.pixels[index3]; //exchanging the locations according to the if condition.

| |

| img.pixels[index3] = temp[0];

| |

|

| |

| //repeating it for all r,g,b values of the pixels.

| |

| temp[1]= img.pixels[index+1];

| |

| img.pixels[index+1] = img.pixels[index3+1];

| |

| img.pixels[index3+1] = temp[1];

| |

|

| |

| temp[2]= img.pixels[index+2];

| |

| img.pixels[index+2] = img.pixels[index3+2];

| |

| img.pixels[index3+2] = temp[2];

| |

|

| |

|

| |

| }

| |

| }

| |

| | |

| function vmovePixels(x, y) {

| |

| let neighbour;

| |

| let pix;

| |

|

| |

| const index = 4 * (y * img.width + x);

| |

| const index3 = 4 * ((y+1) * img.width + x);

| |

| neighbour = [img.pixels[index3], img.pixels[index3+1], img.pixels[index3+2]];

| |

| pix = [img.pixels[index], img.pixels[index+1], img.pixels[index+2]];

| |

|

| |

| let a = random([hue, brightness]); //vertical move method works with either hue or brightness.

| |

| if (a(pix) < a(neighbour)) {

| |

| temp = [];

| |

|

| |

| temp[0]= img.pixels[index3];

| |

| img.pixels[index3] = img.pixels[index];

| |

| img.pixels[index] = temp[0];

| |

|

| |

| temp[1]= img.pixels[index+1];

| |

| img.pixels[index+1] = img.pixels[index3+1];

| |

| img.pixels[index3+1] = temp[1];

| |

|

| |

| temp[2]= img.pixels[index+2];

| |

| img.pixels[index+2] = img.pixels[index3+2];

| |

| img.pixels[index3+2] = temp[2];

| |

|

| |

| }

| |

| }

| |

| </source>

| |

| | |

| | |

| '''Exhibition of the Final Images'''

| |

| | |

| The final visuals generated by the algorithm, are put into a website format to exhibit them. The first content the user see when the page shows, is the on-site processed images works as covers and changes when the page is refreshed.

| |

| | |

| <gallery>

| |

| File:selenade2.png

| |

| File:selenade13.png

| |

| </gallery>

| |

| | |

| | |

| Grid format is chosen where the collection is shown, the reason behind that is the resemblance with the contemporary social media formatting of visual media as well as not focusing only to one image but seeing all of them as a whole.

| |

| | |

| <gallery>

| |

| File:selenade10.png

| |

| File:selenade11.png

| |

| File:selenade12.png

| |

| </gallery>

| |

| | |

| | |

| Since on-site calculation of the image requires so much computational power and it decreases the responsiveness of the website. therefore, for exhibition purposes, a lite version again with a static video is placed in the place of the cover image and shows the processes from the end to the beginning.

| |

| | |

| lite version;

| |

| | |

| [[File:selenade15.mp4]]

| |

| | |

| | |

| in-site computed version;

| |

| | |

| [[File:selenade16.mp4]]

| |

| | |

| | |

| == '''references'''==

| |

| | |

| ''image processing inspirations''

| |

| | |

| - Coding Train - Pixel Arrays (https://www.youtube.com/watch?v=nMUMZ5YRxHI)

| |

| | |

| - Sortraits (https://wtracy.gitlab.io/sortraits/)

| |

| | |

| - Pixel Sorting Article in satyarth.me (http://satyarth.me/articles/pixel-sorting/)

| |

| | |

| - Kim Assendorf - Pixel Sorting with Threshold Control (https://github.com/kimasendorf/ASDFPixelSort)

| |

| | |

| | |

| ''literature''

| |

| | |

| Barthes, Roland (1981). Camera Lucida: Reflections on Photography, Çev. Richard Howard. New York: Hill and Wang.

| |

| | |

| Lehtonen, T.-K., Koskinen , lpo, & Kurvinen, E. (n.d.). Mobile Digital Pictures the future of the Postcard . Retrieved September 28, 2022, from http://www2.uiah.fi/~ikoskine/recentpapers/mobile_multimedia/Mobiles_Vienna.pdf

| |

|

| |

|

| Lury, C. (2007). Prosthetic culture: Photography, memory and Identity. Routledge.

| | ''InterFace is an interactive tool which uses the facial expressions to detect the emotions and creates an additional layer of communication between the viewer and the wearer.'' |

|

| |

|

| van Dijck, J. (2008). Digital Photography: Communication, Identity, memory. Visual Communication, 7(1), 57–76. https://doi.org/10.1177/1470357207084865

| | {{#ev:youtube|e1CXrPU11XQ}} |