No edit summary |

No edit summary |

||

| Line 1: | Line 1: | ||

=='''Documentation of Gestalt Choir on 12th September'''== | =='''Documentation of Gestalt Choir on 12th September'''== | ||

<br><videoflash type=vimeo>101410956|610|340</videoflash> | |||

<videoflash type=vimeo>101410956|610|340</videoflash> | |||

Revision as of 10:59, 6 August 2014

Documentation of Gestalt Choir on 12th September

<videoflash type=vimeo>101410956|610|340</videoflash>

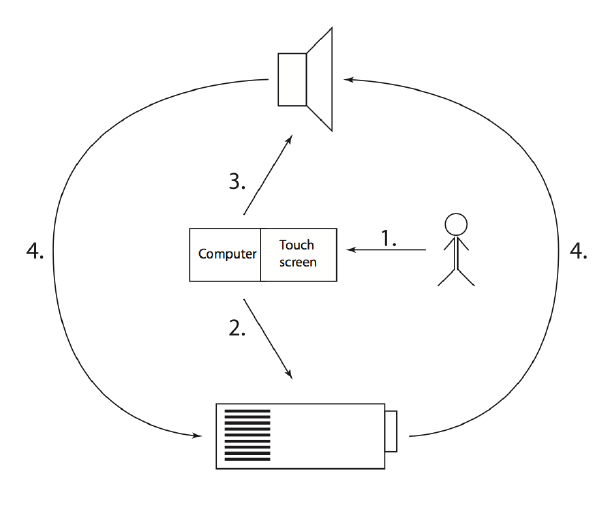

Final project sketch

1. Touch screen input. Input triggers generative visualizations. Human can interact whit them during performance + midi controller or sensors which changes processes in audio and/or in visuals.

2. Visuals are being projected behind performing human.

3. In the same time sound is being made by the visualization produced data.

4. The sound produced data is being analyze and the data triggers changes in the visualization. New visualization produced data changes the sound. In the middle of all this still is human who can add changes manually in both of this process.

It's possible to exhibit it as installation. For that need: empty room, 8 loudspeakers and 4 projectors.