No edit summary |

No edit summary |

||

| Line 27: | Line 27: | ||

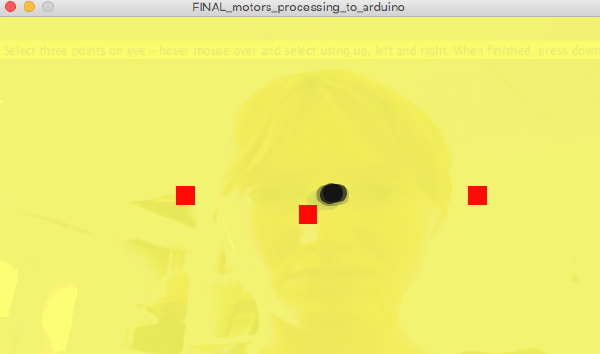

For the sensor I have used an eye tracking processing sketch, developed in a previous course and tweaked it to send messages to arduino when your eyes hit certain targets on the screen. Each target sets off a different motor. The communication between the two programmes works using the serial port. | For the sensor I have used an eye tracking processing sketch, developed in a previous course and tweaked it to send messages to arduino when your eyes hit certain targets on the screen. Each target sets off a different motor. The communication between the two programmes works using the serial port. | ||

[https://vimeo.com/163158806 Link to video of eye tracking programme] | [https://vimeo.com/163158806 Link to video of eye tracking programme] | ||

| Line 35: | Line 34: | ||

*[[/Link to Arduino code /]] | *[[/Link to Arduino code /]] | ||

'''Protoyping Experiments''' | |||

[[File:Shrs5.png|600px]] | [[File:Shrs5.png|600px]] | ||

| Line 41: | Line 43: | ||

[[File:Shrs2.png]] | [[File:Shrs2.png]] | ||

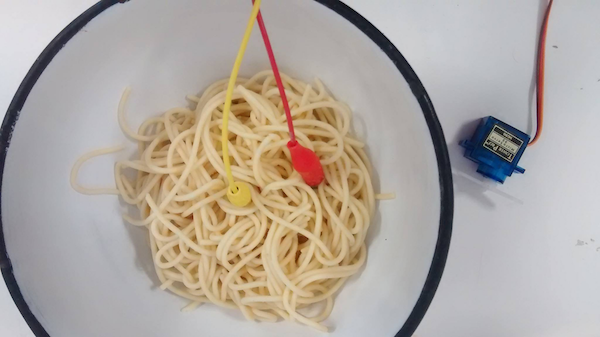

Experimenting with spaghetti as a sensor using variable resistance in a voltage divider circuit to send values to the arduino analogue input pin. | Experimenting with spaghetti as a sensor using variable resistance in a voltage divider circuit to send values to the arduino analogue input pin. | ||

Revision as of 12:58, 10 June 2016

Project Overview

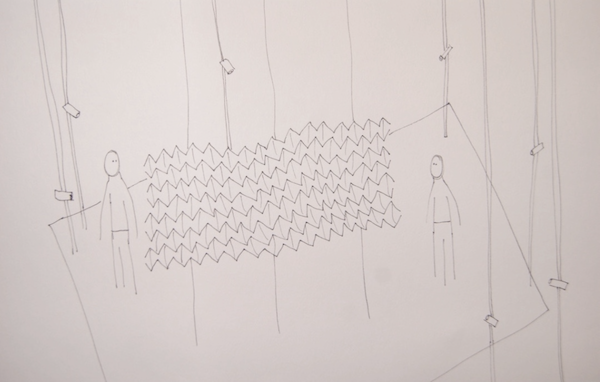

In this course I wanted to develop an interactive object for use with the performance platform, the idea being that the tracking system would sense human movement and send this data to the object which, in turn, would respond. Eventually, I want to build a wall/curtain structure which will hang in between two people and act as a medium of communication. I am particularly interested in the small and subconscious movements made by humans while interacting and will concentrate on eye gaze as my sensory input.

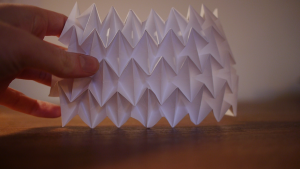

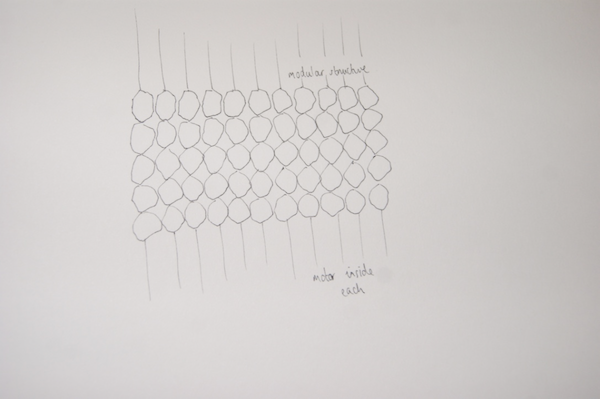

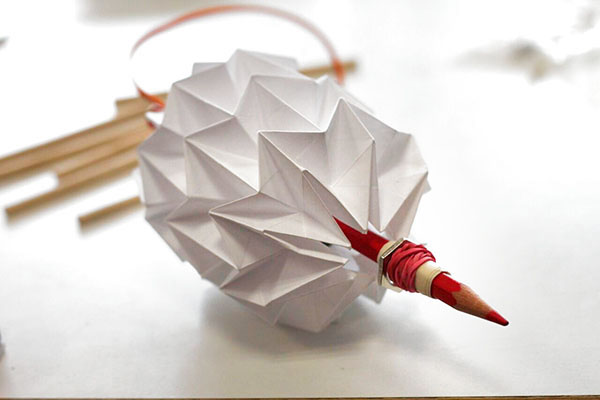

The wall will be made up of units, each an origami structure with the ability to move individually in response to the eye movements of the interactors. In this course I have experimented with various ways of achieving this movement and also with various sensory inputs.

Outline of installation in performance platform (12 cameras surrounding for tracking)

One continuous origami structure

Units of origami

Technical Implementation

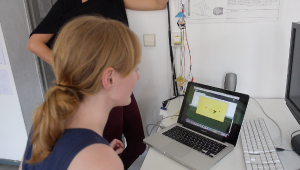

For the sensor I have used an eye tracking processing sketch, developed in a previous course and tweaked it to send messages to arduino when your eyes hit certain targets on the screen. Each target sets off a different motor. The communication between the two programmes works using the serial port.

Link to video of eye tracking programme

*Link to processing code

*Link to Arduino code

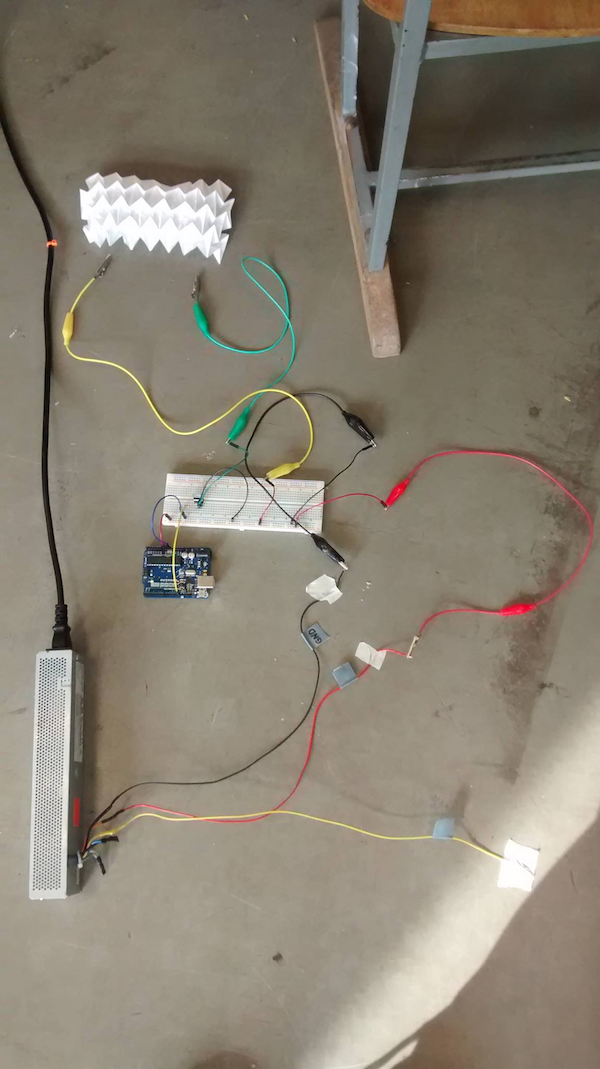

Protoyping Experiments

Experimenting with spaghetti as a sensor using variable resistance in a voltage divider circuit to send values to the arduino analogue input pin.

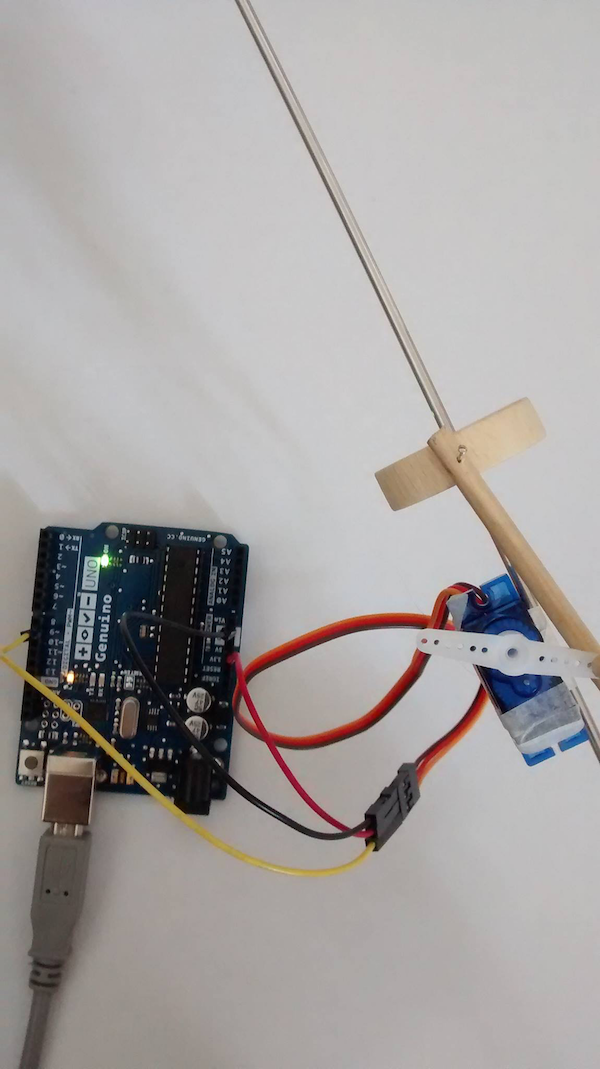

Here is an experiment with 'Muscle Wire' which shrinks when certain currents are applied. I tried sewing it into the paper to see what kinds of movement could happen. It resulted in subtle, slow movements which were too slight for this project so I looked again at servo motors.