No edit summary |

No edit summary |

||

| Line 2: | Line 2: | ||

My name is Etienne COLIN, I am currently studying in a Master's degree in Image and Sound Sciences, Arts and Techniques with a specialisation in sound engineering at the Aix-Marseille University (France). | My name is Etienne COLIN, I am currently studying in a Master's degree in Image and Sound Sciences, Arts and Techniques with a specialisation in sound engineering at the Aix-Marseille University (France). | ||

The Performance Platform of the Bauhaus University | The Performance Platform of the Bauhaus University was for me a great opportunity to discover new working methods in immersive and interactive Medias. I Had the opportunity to be initiated to Max, Pure Data and OSC. | ||

I | I was eager to familiarize myself with the state-of-the-art equipment of the Performance Platform in order to experiment on interactions between a performer and a video and especially its potential regarding sound. | ||

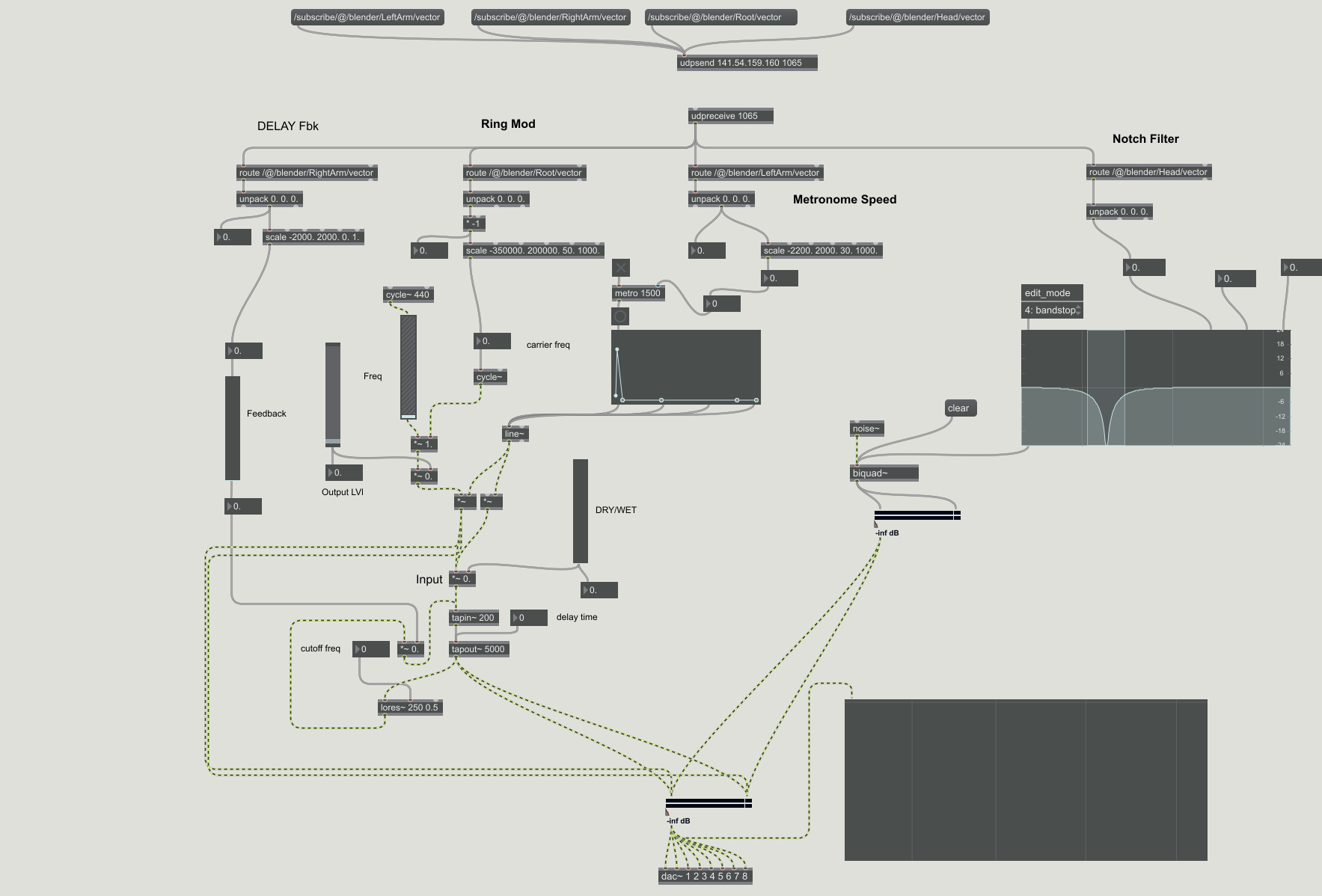

The aim of my experiment was to be able to generate and control sounds by body movements thanks to the captury Live tracking system. | |||

The experiment was a real challenge because I had to quickly familiarize myself with how Max and the Captury Live system work and OSC data that I had never had to deal with before. | |||

Most of the difficulties I encountered were due to my low level of knowledge of how Max works and especially how to generate sound. Indeed, I wanted to not use recorded sounds but rather to generate them directly from the Max patch. | |||

So I could only generate sounds from basic oscillators or noise generators. That is why the possible controls on these sources were very limited and I established that the body's movements would eventually have an impact on the effects applied to these basic signals. | |||

I thus established that the Root position (x coordinate) in the space will control the carrier frequency of a ring modulation, and that the Head position (x coordinate) in the space will control the center frequency of a notch filter. In that way, when the listener moves on the platform, frequency modulation and a sweep effect will result. | |||

Besides, | |||

The elevation of the left arm (y coordinate) controls the speed of a metronome that generates a fixed frequency subjected to an envelope generator. The elevation of the right arm (y coordinate) controls the feedback of a delay applied to the same source. When the arm is down, there is no delay (feedback=0) when the arm is raised to the maximum the feedback goes up to 0.7. The association of interactions with both arms was supposed to have a really playful effect on the listener who literally controls the "instrument" like a conductor. | |||

[[File:subscriber_EColin_1.png]] | |||

I must admit that I was frankly disappointed with the final result but I still want to go further in this direction because the possibilities offered by Max are really impressive and have made me want to devote myself seriously to it. | |||

Revision as of 10:28, 14 February 2019

My name is Etienne COLIN, I am currently studying in a Master's degree in Image and Sound Sciences, Arts and Techniques with a specialisation in sound engineering at the Aix-Marseille University (France).

The Performance Platform of the Bauhaus University was for me a great opportunity to discover new working methods in immersive and interactive Medias. I Had the opportunity to be initiated to Max, Pure Data and OSC.

I was eager to familiarize myself with the state-of-the-art equipment of the Performance Platform in order to experiment on interactions between a performer and a video and especially its potential regarding sound. The aim of my experiment was to be able to generate and control sounds by body movements thanks to the captury Live tracking system.

The experiment was a real challenge because I had to quickly familiarize myself with how Max and the Captury Live system work and OSC data that I had never had to deal with before.

Most of the difficulties I encountered were due to my low level of knowledge of how Max works and especially how to generate sound. Indeed, I wanted to not use recorded sounds but rather to generate them directly from the Max patch. So I could only generate sounds from basic oscillators or noise generators. That is why the possible controls on these sources were very limited and I established that the body's movements would eventually have an impact on the effects applied to these basic signals.

I thus established that the Root position (x coordinate) in the space will control the carrier frequency of a ring modulation, and that the Head position (x coordinate) in the space will control the center frequency of a notch filter. In that way, when the listener moves on the platform, frequency modulation and a sweep effect will result. Besides,

The elevation of the left arm (y coordinate) controls the speed of a metronome that generates a fixed frequency subjected to an envelope generator. The elevation of the right arm (y coordinate) controls the feedback of a delay applied to the same source. When the arm is down, there is no delay (feedback=0) when the arm is raised to the maximum the feedback goes up to 0.7. The association of interactions with both arms was supposed to have a really playful effect on the listener who literally controls the "instrument" like a conductor.

I must admit that I was frankly disappointed with the final result but I still want to go further in this direction because the possibilities offered by Max are really impressive and have made me want to devote myself seriously to it.