No edit summary |

No edit summary |

||

| (16 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

[[File: | [[File:plant_2_kuo.jpg|700px]] | ||

[[File: | [[File:plant_3_kuo.jpg|700px]] | ||

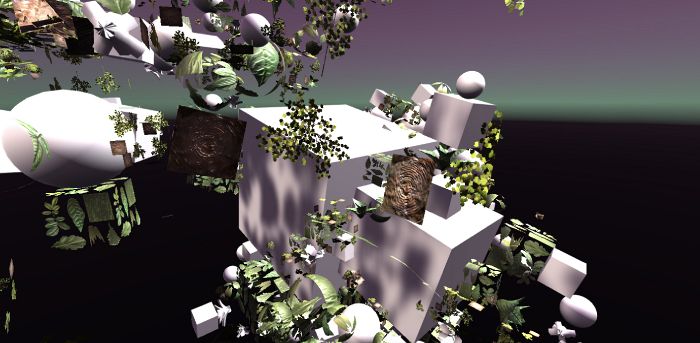

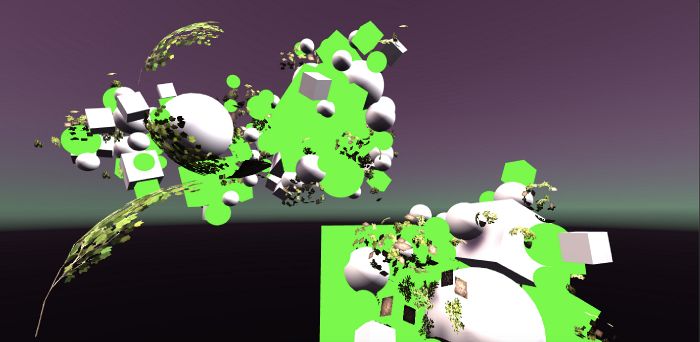

I am interested in the self generated graphic and the similarity between the characters of the algorithm and biology. So that I have created a self growing fractal in Unity, which the selected materials are applied to selected elements randomly. Each play creates a different fractal and I will like to build up an installation that the viewer can start the play by their own and viewing the interesting graphic through the google cardboard glasses. | |||

[[File:concept_kuo_plant.jpg|600px]] | |||

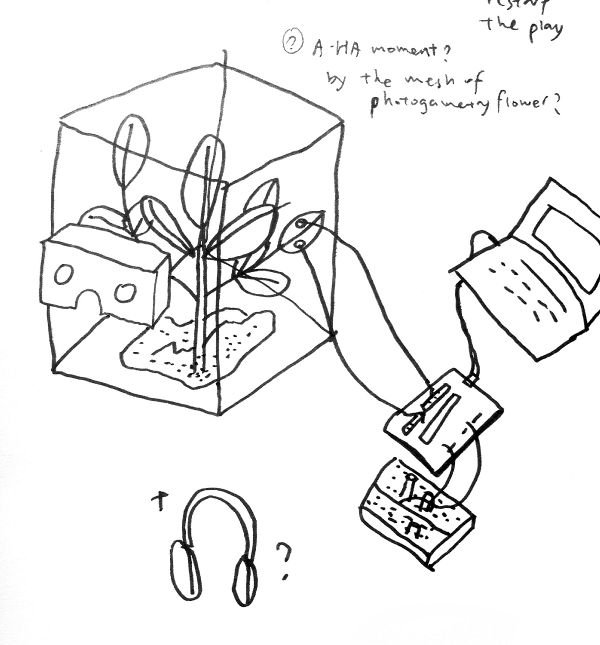

The installation will compose of a google cardboard, a game controller, a organism ( small green house or simply a plant), and maybe a headphone. | |||

The relationship between the Max/Msp and Unity will not only be the switch to start the plays but also getting data from a | |||

organism to change the rotation speed of the fractal in the unity and maybe with sounds generated from the signal of the plants. | |||

Latest revision as of 08:46, 20 June 2019

I am interested in the self generated graphic and the similarity between the characters of the algorithm and biology. So that I have created a self growing fractal in Unity, which the selected materials are applied to selected elements randomly. Each play creates a different fractal and I will like to build up an installation that the viewer can start the play by their own and viewing the interesting graphic through the google cardboard glasses.

The installation will compose of a google cardboard, a game controller, a organism ( small green house or simply a plant), and maybe a headphone.

The relationship between the Max/Msp and Unity will not only be the switch to start the plays but also getting data from a organism to change the rotation speed of the fractal in the unity and maybe with sounds generated from the signal of the plants.