No edit summary |

No edit summary |

||

| (12 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

[[ | [[File:Bildschirmfoto 2021-05-12 um 18.08.24.png|400px]] | ||

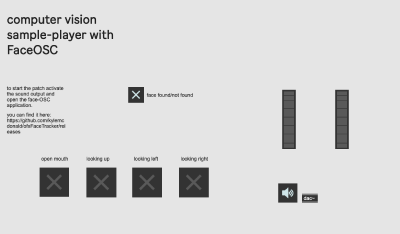

I built a patch that uses computer-vision to control sound-plaback with head-gestures. | |||

Here is the patch and a video that explains how to use it and what you can do with it. | |||

[[:File:facetracking_sampler_leon_g.maxpat]] | |||

{{#ev:youtube|mef2j1WzQoI}} | |||

The patch was developed in the process of building an interactive installation experimenting with the extension of language in the combination of sound and language. | |||

I realized the Prototype - compo - which provides an instrument to create future interactive compositions between words and music. | |||

It is documented over here: https://wwws.uni-weimar.de/kunst-und-gestaltung/wiki/GMU:Artists_Lab_IV/Leon_Goltermann | |||

Latest revision as of 15:12, 14 May 2021

I built a patch that uses computer-vision to control sound-plaback with head-gestures.

Here is the patch and a video that explains how to use it and what you can do with it.

File:facetracking_sampler_leon_g.maxpat

The patch was developed in the process of building an interactive installation experimenting with the extension of language in the combination of sound and language.

I realized the Prototype - compo - which provides an instrument to create future interactive compositions between words and music.

It is documented over here: https://wwws.uni-weimar.de/kunst-und-gestaltung/wiki/GMU:Artists_Lab_IV/Leon_Goltermann