I am interested in tracking small, subconscious movements made by humans. Last semester I developed an eye tracking programme, made in Processing, which uses colour selection and face recognition to draw the paths of movements made by eyes.

With an earlier version of the programme, I made some tests with recordings of my eyes whilst using a computer, starting after ten minutes of surfing the internet.

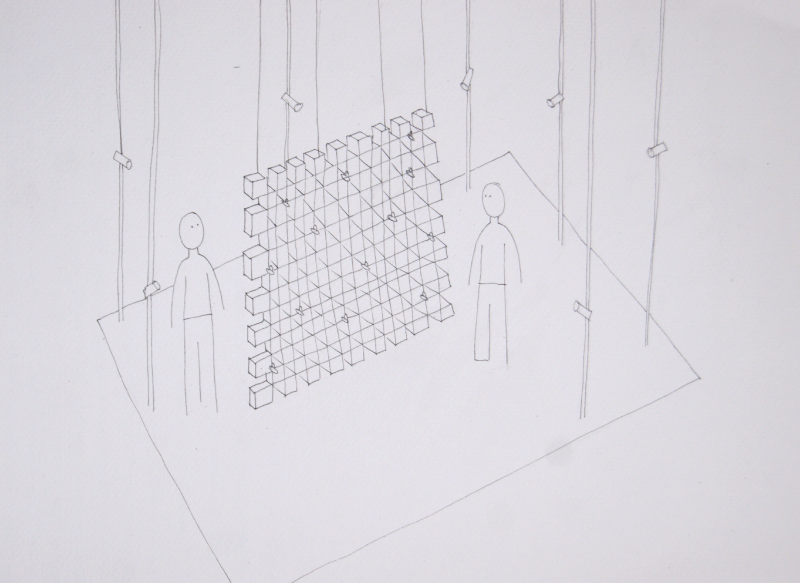

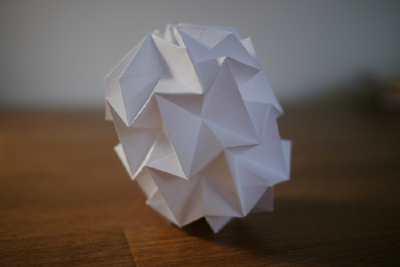

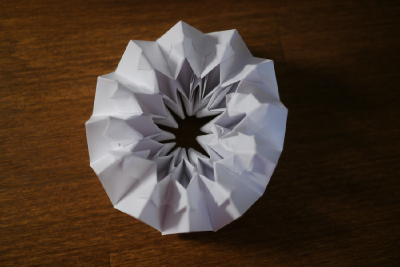

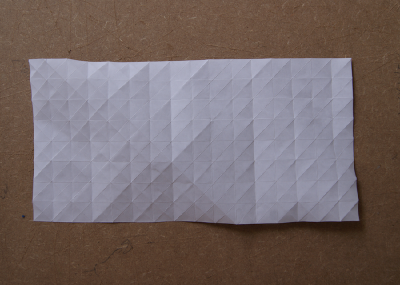

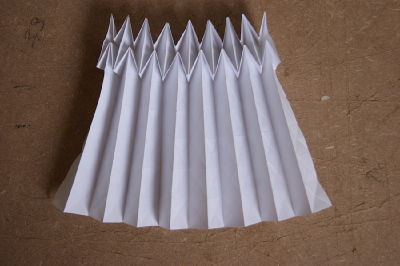

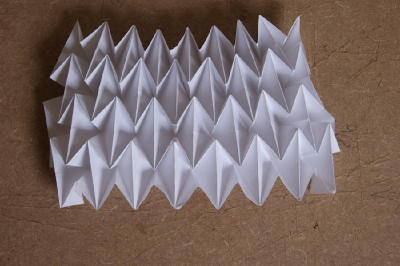

Now I want to bring this into the space of the performance platform and map tiny eye movements onto a moving 3D object. This object will be a hanging paper 'wall', serving to restrict the view of two people from one another. Standing opposite yet unable to see the other, communication or interaction is made possible via moving the wall, both intentionally and unintentionally. Upon entering the space, it will be obvious that another person is present but it will not be immediately clear as to what is causing the structure to move. They eye movements of each person will compete or combine to create the overall motion. I want to track the paths made by the combined interactions of humans, eye movements and the object in order to determine whether we tend to fall into the same patterns of motion, individually or as a group.

How it works

The processing sketch picks up eye movement data via the cameras and activates servo motors in the structure, causing the wall to move at locations 'hit' by the gazes. The paper wall is constructed in such a way as to allow a ripple effect through the entire structure each time a motor is activated.

Video of eye tracking programme