Aloneg

— Connection and Communication

Idea

We’re born alone, we live alone, we die alone. Only

through our love and friendship can we create the

illusion for the moment that we’re not alone.

— Orson Welles

Humans are always complex and have different kinds of emotions inside, which you can observe a little bit from the outside appearance. But in this way, one can never understand much about the other. So, the better way is to get close with transpositional consideration, be friendly and make effort to create a channel to communicate with each other for a mutual understanding. After the connection is formed, when we stop talking or sharing feelings with each other, the relation will be unstable and needs care from both sides to maintain. And also the distance is an important factor that affects the link. And the ’distance’ here doesn’t only represents the geographical distance, but also the distance of heart or inner environment of our own. When we are parted from others for a while, we always need some time and some actions to get close again and rebuild the link with others. And as human being, we can not live alone or we may somehow lose the meaning of being alive. So, when we have no connections with others for a long time, the value or the existence of ourselves will be eroded and little by little be washed out from the world...

Concept

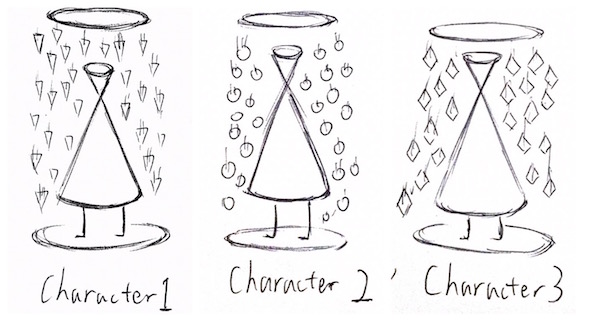

I want to create a virtual scene, where participants can walk around with VR devices in the purpose of creating connection with others. All the participants in the motion tracking system will have a corresponding virtual character with a fluid shied/wall consisting of falling shapes(triangle, circle, square, etc.). Each character has its own typical shape. Like the picture below.

But from the outside, the character looks just like they are covered by the rain of triangles/circles/squares. This image conveys a unclear, vague, unknown, mysterious feel of a stranger. And it’s only possible to see what behind the ‘rain’, when participants create the connection with each other.

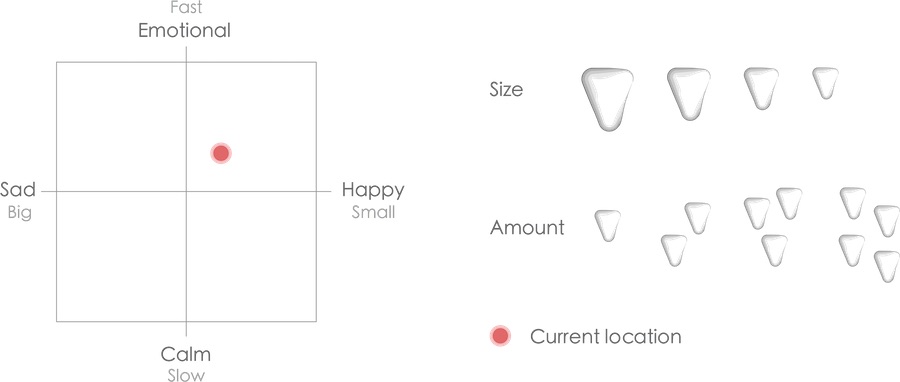

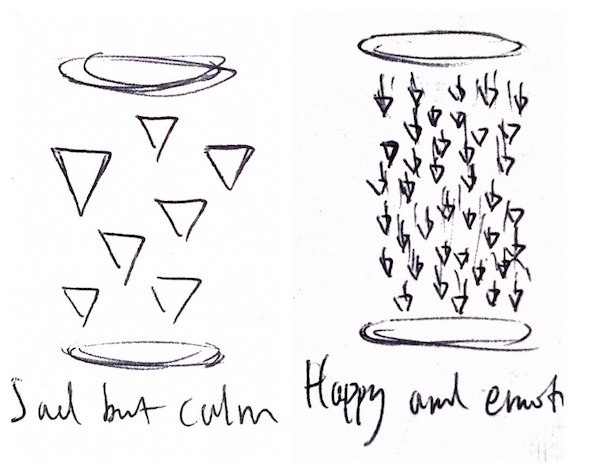

And the size and the speed of the ’rain’ is based on which zone the participant is standing on. As the picture below, the platform is divided according to the diagram into four quadrants.

The horizontal line means how your mood is, and the vertical line shows how emotional you are. That is to say,the happier you are, the smaller the shapes will be. Meanwhile, the more emotional you are, the faster the shapes will drop. And vice versa. Thus, the inner feel will be partly shown by the ‘rain’. One participant can notice a general idea of others’ emotion, so that participants will try to get closer to match a similar emotional state for further connection.

Besides, the participants will hear their own melody when walking around in the four quadrants matching with the their own ‘inner feeling’. So, the participants will be aware of how they ‘feel’ in this virtual simulation scene.

In order to create the channel to communicate with other participants, one just needs to wave his hand and spread part of the ‘rain’ to the other. The other will be noticed through the sound of other’s ‘rain’ hitting on his own. Just like picture here, A waves and B waves back, then they are able to connect to each other. You can see, there are elements of their own flowing bidirectionally. Once the connection is built, they can see each other a bit more but not entirely and they can directly talk to each other or use body languages.

When there’s no any kind of communication or the distance between the participants is too long, then the channel will be slowly closed. After the characters are parted, they will carry a few shapes from other characters symbolizing the influence others have brought. But as time goes on, the shapes from others will disappear slowly indicating the character is forgetting the others. And maybe, it’s the time for a reunion.

Additionally, when the participant stop making connections with others too long, the character in the virtual scene will slowly fade away… as mentioned above, the existence of human being will be meaningless without connection and communication with others.

In a word, by allowing the participants play a role in the interactive scene, it’s a concept strengthening the necessity and significance of the connection and communication with others.

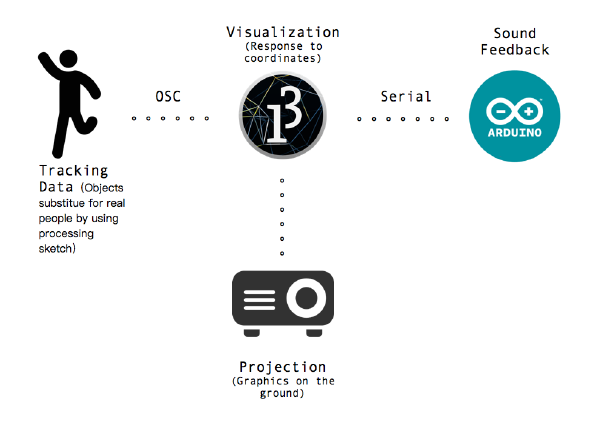

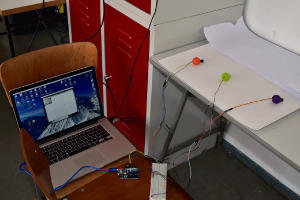

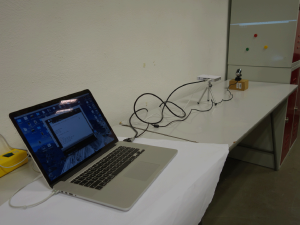

Technical setting-up

As my original idea using Blender and Unity or Unreal engine to create a VR environment is too complicated and it's hard to set up just using simple VR sets like Google cardboard and interact with multiple participants at the same time. So, I finally chose to use some easier approach to do my project, but still in some role-play mode. From the sketch for 3D and develop the concept of a plain and simple way to show the rains around the participants by using projector.

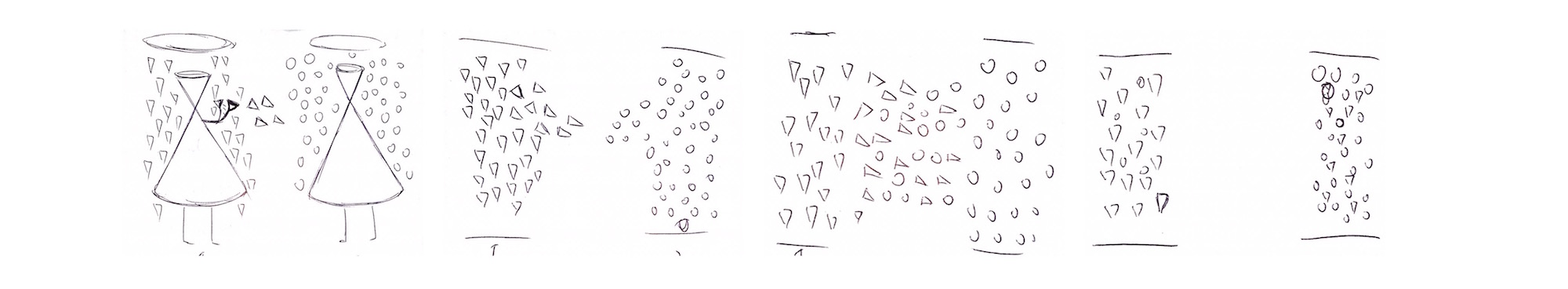

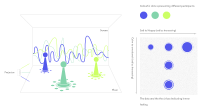

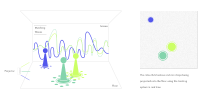

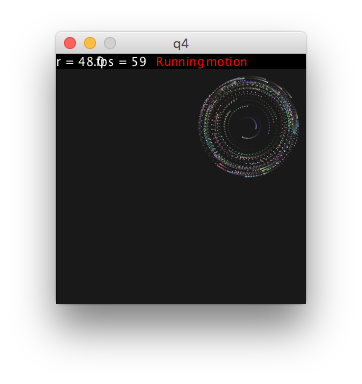

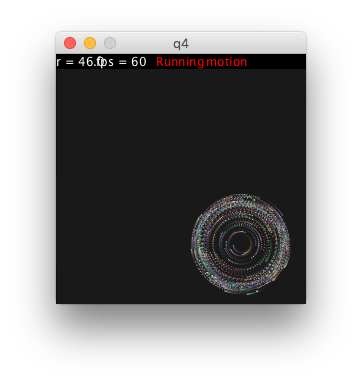

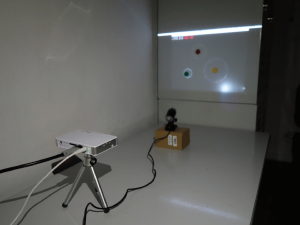

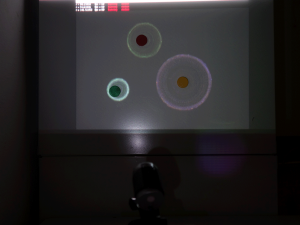

Just like the pictures on the left side, I will use tracking system to locate the participants and project the dots of rainfall to the ground where the participants are actually standing. And the circles and dots will change its size according to the position of the participants. The patterns indicate the 'inner feeling' of the participants. (The feeling is not your real feeling but a simulated one in this specific environment.) At the same time, the screen behind will display the corresponding curve like electrocardiogram of each participants in different colors with their own rhythms of sounds. By looking at the curves on the screen and hearing the sound, the participants can get a notion of others' 'feeling' in this interactive 'game'. When people get close, the rhythms will be alike and the dots will merge.

Updates:

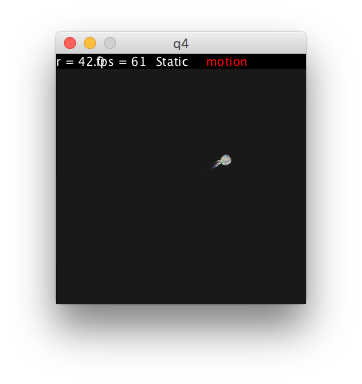

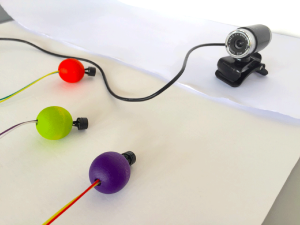

Instead of using the tracking system, I am using the color detection sketch from processing to capture certain objects' movements. Ideally, it should be able to track several single-color objects. But in the real world, the webcam just not accurate enough for the tracking and it depends on the light condition extremely.

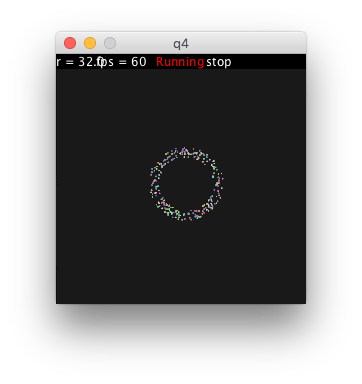

Ground graphics: Just as I mentioned above, the field is a coordinate and the dots will change according to the location data.

The particles will stop moving when two participants get close enough just like the 5th pictures showing.

Sound feedback:

This is my original idea of sound feedback using individual buzzer for each single-color object. The melody or sound will be played, when the distance between every two objects are close or far enough . But during my test with syncing the tracking data with the arduino, I found the buzzer couldn't work as the way I wanted, one melody for one specific pin will go through the all three buzzers. I could't solve this, so I had no choice but looking for another solution. And the simplest solution is just play the audio through the processing sketch from computer speakers. Which is not so instinct and natural comparing with the original plan.

Final Result

The setting is able to track three objects and measure the distance between each other and trigger certain interactions mentioned above, although the tracking is not stable.So for the time being, two objects are the proper for the interaction.