GMU:Designing Utopias: Theory and Practice/Selena Deger: Difference between revisions

No edit summary |

|||

| (10 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

=='''InterFace: How You See Me'''== | =='''InterFace: How You See Me'''== | ||

{{#ev:youtube|e1CXrPU11XQ}} | {{#ev:youtube|e1CXrPU11XQ}} | ||

InterFace is an interactive tool which uses the facial expressions to detect the emotions and creates an additional layer of communication between the viewer and the wearer. When an emotion is detected on the wearer side, it is translated into a set of colors to be seen by the viewer who is also triggered by the colors that has a relatively universal meaning. | |||

InterFace is an interactive tool which uses the facial expressions to detect the emotions and creates an additional layer of communication between the viewer and the wearer. When an emotion is detected on the wearer side, it is translated into a set of colors to be seen by the viewer who is also triggered by the colors that has a relatively universal meaning. It is designed to be used in a public space but rather than being in the center of attention, it aims to exist in the peripheral while still stimulating the people around. | |||

| Line 15: | Line 18: | ||

Moreover, it is a naturally evolved survival mechanism to avoid an unwanted situation with the help of others around. According to the findings of Adams et al(2006), two studies suggest that there is accuracy in detecting movements in angry and fearful faces, either moving towards or away from the observer. They found that observers were quicker to correctly identify angry faces moving towards them, suggesting that anger displays convey the intent to approach. However, the results were not the same for fear faces, which may indicate that fear signals a "freeze" response rather than a behavior of fleeing. Therefore, translating the emotions of one party to another has an essential role in sharing “data” collected from the outer world sensed by human body receptors. Besides the expressions of emotion being a means of non-verbal communication, unlike the gestures that can change from culture to culture, they are also relatively universal. According to Ekman(1970), basic emotions have a pancultural nature in that they are identified and also expressed in similar ways in different cultures with the same facial muscle responses. | Moreover, it is a naturally evolved survival mechanism to avoid an unwanted situation with the help of others around. According to the findings of Adams et al(2006), two studies suggest that there is accuracy in detecting movements in angry and fearful faces, either moving towards or away from the observer. They found that observers were quicker to correctly identify angry faces moving towards them, suggesting that anger displays convey the intent to approach. However, the results were not the same for fear faces, which may indicate that fear signals a "freeze" response rather than a behavior of fleeing. Therefore, translating the emotions of one party to another has an essential role in sharing “data” collected from the outer world sensed by human body receptors. Besides the expressions of emotion being a means of non-verbal communication, unlike the gestures that can change from culture to culture, they are also relatively universal. According to Ekman(1970), basic emotions have a pancultural nature in that they are identified and also expressed in similar ways in different cultures with the same facial muscle responses. | ||

The embodiment of emotions through facial expressions is a mean of communication with the outer world. However, it is distinctive from vocal communications etc. by not being self-reflective that people cannot see or feel the immediate effect of their actions. Rather it is moving to the other party to be evaluated and has its effect on them and that is where the reflection forms. So one person feels the emotion but the other one sees the facial expression. The viewer is the bridge to the outer world as well as the reflection of the inside. | |||

To explore the nature of these interactions through facial expressions of emotions in a bigger picture and to disrupt the woven structure of daily life, InterFace pursues to create a space for emphasizing the power of these individual emotions becoming visible and vivid for the outside world. | To explore the nature of these interactions through facial expressions of emotions in a bigger picture and to disrupt the woven structure of daily life, InterFace pursues to create a space for emphasizing the power of these individual emotions becoming visible and vivid for the outside world. | ||

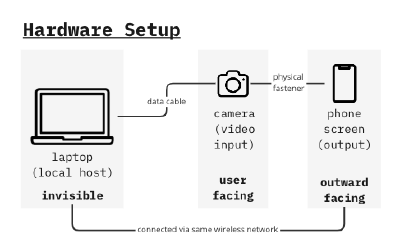

==Hardware Setup== | ==Hardware Setup== | ||

[[File: | [[File:emotiondet_14.png|400px]] | ||

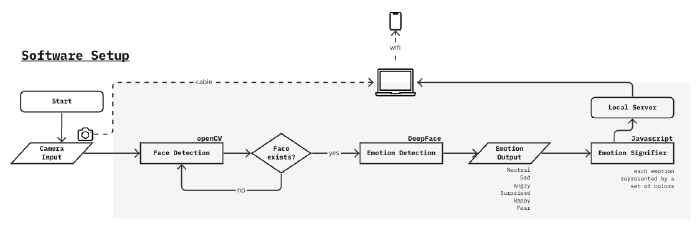

==Software Setup== | ==Software Setup== | ||

| Line 65: | Line 33: | ||

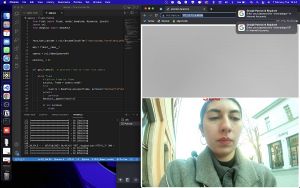

''See the detailed development process below'' | |||

==[[Hardware and Software Systems Processes]]== | |||

==Interaction== | |||

''Video Walk'' | |||

{{#ev:youtube|i5LFJzIUPKk}} | |||

As an object to be used in the public space, the evaluation of the interactivity is performed with a video walk. The walk starts at the uni campus and by using the most crowded streets aims to reach Theaterplatz and then go back to the campus again. The observations on this route follow as the most interactivity is caught when the wearer was facing the viewer and the distance between them was smaller(as if walking on the same sidewalk to opposite directions). In some instances are seen as some curious ones are also turning their heads to have another look after they pass. Moreover interest in the tool was more visible when the observational video recording is stopped and it became a standalone object. | |||

''Emotions on the viewer screen after the video walk'' | |||

{{#ev:youtube|WUd2enyf2lQ}} | |||

[[File: | [[File: emo_ss.jpg|300px]] | ||

[[File: emo_archive.png|200px]] | |||

During the walk, emotion output is saved with an automated screenshot script while the laptop in the bag was also remote controlled to ensure the stability of the system. The collected emotion visuals(static images) are blended together with a frame interpolator to create the transitions between them. | |||

==Discussions & Further Development== | |||

===Limitations of Facial Emotion Recognition Through Deep Learning Algorithms=== | |||

Even though emotion detection with AI has been studied a lot and used in many different ways, it is important to think about its problems. One of the main limitations is accuracy. It is claimed by the DeepFace framework that according to experiments, humans can recognize faces with an accuracy of 97.53%, whereas those models have already attained and surpassed that mark. From my personal experience, I can say that it can be used for simple emotions; however, its power to assess complex or micro emotions is not close to the human capability of understanding emotional expressions. Current emotion detection systems can still struggle to identify emotions accurately due to cultural and individual differences and context. The technology is also prone to algorithmic bias, leading to inaccuracies for certain groups of people. My own experience with the tool is more or less had the same hustles, where the emotion detection was usually tagging me as sad rather than neutral. This kind of algorithm is a black box that makes it harder to understand what goes wrong during the process, and it gives a very different result than expected. Therefore, it is crucial to approach these tools with a critical mindset and use them as a supplement rather than a replacement for human analysis. From another point of view, the fragility and inaccuracy of the system make it vulnerable to misinterpretation in an automated setting. It still needs an operator or interpreter that extracts the source of truth. This highlights the importance of human involvement in decision-making processes, especially in complex systems such as emotion recognition. While automation or massification instead of personalization of these kinds of tools can improve efficiency, they should not completely replace human oversight and intervention. | |||

Another point of bias in the facial emotion recognition systems is being evaluated at the 2D level. Human facial expressions have historically been investigated using either static 2D images or 2D video sequences. Recent datasets have taken into account 3D facial expressions to better facilitate an examination of the fine structural changes inherent to spontaneous expressions; however, a 2D-based analysis has difficulty handling large variations in pose and subtle facial movements (Ko, 2018) | |||

The OpenCV library used in this project works in a way that it retrieves the static images of a face from the camera data to assess if it is a human face or not. DeepFace then evaluates the facial emotion of the face screenshots. As a result, the system is judging the emotion from a static example of the face rather than from the context of the face, which makes it less unreliable than the human eye. Moreover, it was not able to recognize a face from another angle, which means the camera needs to be stable enough to see the face horizontally aligned and this feature can limit the possibility of some actions while wearing the device. | |||

===Color Usage to Evoke Emotions=== | |||

The reasoning of psychophysical effects and evolutionary interpretations of the colors that have been given on the part of color selection for the emotion visualizer is a relatively controversial topic in the context of this work. Anyway, | |||

There are many factors such as cultural, age, and gender that can influence the psychological effects of colors. White, for instance, can signify innocence and purity in some cultures, but death and mourning in others. Similarly, people of different ages and sexes may associate different colors with different things. The vision of humans is not possible to put in the same shape, and there are even some limitations regarding the perception of color, such as several types of color blindness. Even with these limitations, I think it's still important to look into the general psychological properties of colors and use this knowledge to create meaning that speaks to a wider audience. | |||

From this point of view, the choice of colors for this work could go in a more personal direction, depending on who is looking at it, when it is more on an exclusive communication level and not shown to the public. Personalized color selection can help create a deeper emotional connection with the viewer, which can lead to a more impactful and memorable experience. However, it is important to consider the context and purpose of the work before making any color choices. | |||

Overall, I think that the use of color in art is a powerful way to make people feel things and get the emotions across even if it has a more personalized or symbolic meaning, and I am particularly fascinated by the idea of manipulating a viewer's mood and feelings through color. By understanding the psychological properties of colors and using them in a deliberate, thoughtful, and also more personal manner, they can have a lasting impact on the viewer. | |||

===Limitations Regarding the Wearable Piece=== | |||

The usage of the setup in the wild showed that the most important factors affecting the visibility of the device are the distance between the wearer and the viewer and its positioning. As mentioned above, the most visible results were when the distance was even smaller than 2 arm lengths, and it was also visible that some curious people were turning their heads to look, but the device had already passed. For the next phase, the setup will be more controlled and assistive but still interactive in a way that there will be no active motion but more exclusive communication between the parties. | |||

Also, according to the goals of usage, the lighting and size of the display were not ideal. The size of the screen was too small, and it became negligible for most of the people who were further away. But this problem can't just be fixed by making the screen bigger, because then it stops being an extension of the person wearing it. Hence, the placement of the visual could be behind the wearer in a more immersive environment. | |||

==References== | |||

Adams, R. B., Ambady, N., Macrae, C. N., & Kleck, R. E. (2006). Emotional expressions forecast approach-avoidance behavior. *Motivation and Emotion*, *30*(2), 177–186. https://doi.org/10.1007/s11031-006-9020-2 | |||

* | |||

* | |||

De’Aira, Deepak, & Ayanna. (2021). Age bias in emotion detection An analysis of facial emotion recognition performance on young middle-aged and older adults. | |||

Ekman, P. (1970). Universal Facial Expressions of Emotions. California Mental Health Research Digest, 8(4), 151-158. | Ekman, P. (1970). Universal Facial Expressions of Emotions. California Mental Health Research Digest, 8(4), 151-158. | ||

Ferrari, P. F., & Coudé, G. (2018). Mirror neurons, embodied emotions, and empathy. *Neuronal Correlates of Empathy*, 67–77. https://doi.org/10.1016/b978-0-12-805397-3.00006-1 | |||

Ko, B. (2018). A brief review of facial emotion recognition based on visual information. Sensors, 18(2), 401. https://doi.org/10.3390/s18020401 | |||

DeepFace https://github.com/serengil/deepface | DeepFace https://github.com/serengil/deepface | ||

Latest revision as of 21:52, 29 March 2023

InterFace: How You See Me

InterFace is an interactive tool which uses the facial expressions to detect the emotions and creates an additional layer of communication between the viewer and the wearer. When an emotion is detected on the wearer side, it is translated into a set of colors to be seen by the viewer who is also triggered by the colors that has a relatively universal meaning. It is designed to be used in a public space but rather than being in the center of attention, it aims to exist in the peripheral while still stimulating the people around.

Abstract

The evolutionary development of human resulted in many features to create greater societies from extensive groups of people, ideally living in harmony with each other. One of the most influential features that bring us the ability to build and sustain these social structures is our ability to empathize with the other people around us. However, in modern societies, we are getting more and more apart from each other and lost in the rush of our modern-day problems. Our perception of social interactions gets trapped in a closer circle even though we are encountering many different faces even in one day of our lives. As those faces got blurry for us, the lifting effect of being social and sharing decays even more. The project evaluates the effects of emotions through facial expressions in the contexts of empathy and modern-day social structures.

The process of empathy starts with people imagining themselves in another person’s shoes and trying to form meaning out of it. This involves paying attention to their body language, facial expressions, tone of voice, and words, as well as considering their past experiences and current circumstances. Several experts think that mirror neurons, or at least a similar mechanism, play a role in some forms of basic empathy. Mirror neurons in the mouth and the ability to imitate facial expressions are likely the foundation for being in tune with others emotionally. While the embodiment of emotions does not cover all aspects of empathetic experience, it provides a straightforward explanation of how we may share emotions with others and how this skill could have evolved through evolution (Coudé & Ferrari, 2018).

Moreover, it is a naturally evolved survival mechanism to avoid an unwanted situation with the help of others around. According to the findings of Adams et al(2006), two studies suggest that there is accuracy in detecting movements in angry and fearful faces, either moving towards or away from the observer. They found that observers were quicker to correctly identify angry faces moving towards them, suggesting that anger displays convey the intent to approach. However, the results were not the same for fear faces, which may indicate that fear signals a "freeze" response rather than a behavior of fleeing. Therefore, translating the emotions of one party to another has an essential role in sharing “data” collected from the outer world sensed by human body receptors. Besides the expressions of emotion being a means of non-verbal communication, unlike the gestures that can change from culture to culture, they are also relatively universal. According to Ekman(1970), basic emotions have a pancultural nature in that they are identified and also expressed in similar ways in different cultures with the same facial muscle responses.

The embodiment of emotions through facial expressions is a mean of communication with the outer world. However, it is distinctive from vocal communications etc. by not being self-reflective that people cannot see or feel the immediate effect of their actions. Rather it is moving to the other party to be evaluated and has its effect on them and that is where the reflection forms. So one person feels the emotion but the other one sees the facial expression. The viewer is the bridge to the outer world as well as the reflection of the inside.

To explore the nature of these interactions through facial expressions of emotions in a bigger picture and to disrupt the woven structure of daily life, InterFace pursues to create a space for emphasizing the power of these individual emotions becoming visible and vivid for the outside world.

Hardware Setup

Software Setup

See the detailed development process below

Hardware and Software Systems Processes

Interaction

Video Walk

As an object to be used in the public space, the evaluation of the interactivity is performed with a video walk. The walk starts at the uni campus and by using the most crowded streets aims to reach Theaterplatz and then go back to the campus again. The observations on this route follow as the most interactivity is caught when the wearer was facing the viewer and the distance between them was smaller(as if walking on the same sidewalk to opposite directions). In some instances are seen as some curious ones are also turning their heads to have another look after they pass. Moreover interest in the tool was more visible when the observational video recording is stopped and it became a standalone object.

Emotions on the viewer screen after the video walk

During the walk, emotion output is saved with an automated screenshot script while the laptop in the bag was also remote controlled to ensure the stability of the system. The collected emotion visuals(static images) are blended together with a frame interpolator to create the transitions between them.

Discussions & Further Development

Limitations of Facial Emotion Recognition Through Deep Learning Algorithms

Even though emotion detection with AI has been studied a lot and used in many different ways, it is important to think about its problems. One of the main limitations is accuracy. It is claimed by the DeepFace framework that according to experiments, humans can recognize faces with an accuracy of 97.53%, whereas those models have already attained and surpassed that mark. From my personal experience, I can say that it can be used for simple emotions; however, its power to assess complex or micro emotions is not close to the human capability of understanding emotional expressions. Current emotion detection systems can still struggle to identify emotions accurately due to cultural and individual differences and context. The technology is also prone to algorithmic bias, leading to inaccuracies for certain groups of people. My own experience with the tool is more or less had the same hustles, where the emotion detection was usually tagging me as sad rather than neutral. This kind of algorithm is a black box that makes it harder to understand what goes wrong during the process, and it gives a very different result than expected. Therefore, it is crucial to approach these tools with a critical mindset and use them as a supplement rather than a replacement for human analysis. From another point of view, the fragility and inaccuracy of the system make it vulnerable to misinterpretation in an automated setting. It still needs an operator or interpreter that extracts the source of truth. This highlights the importance of human involvement in decision-making processes, especially in complex systems such as emotion recognition. While automation or massification instead of personalization of these kinds of tools can improve efficiency, they should not completely replace human oversight and intervention.

Another point of bias in the facial emotion recognition systems is being evaluated at the 2D level. Human facial expressions have historically been investigated using either static 2D images or 2D video sequences. Recent datasets have taken into account 3D facial expressions to better facilitate an examination of the fine structural changes inherent to spontaneous expressions; however, a 2D-based analysis has difficulty handling large variations in pose and subtle facial movements (Ko, 2018)

The OpenCV library used in this project works in a way that it retrieves the static images of a face from the camera data to assess if it is a human face or not. DeepFace then evaluates the facial emotion of the face screenshots. As a result, the system is judging the emotion from a static example of the face rather than from the context of the face, which makes it less unreliable than the human eye. Moreover, it was not able to recognize a face from another angle, which means the camera needs to be stable enough to see the face horizontally aligned and this feature can limit the possibility of some actions while wearing the device.

Color Usage to Evoke Emotions

The reasoning of psychophysical effects and evolutionary interpretations of the colors that have been given on the part of color selection for the emotion visualizer is a relatively controversial topic in the context of this work. Anyway,

There are many factors such as cultural, age, and gender that can influence the psychological effects of colors. White, for instance, can signify innocence and purity in some cultures, but death and mourning in others. Similarly, people of different ages and sexes may associate different colors with different things. The vision of humans is not possible to put in the same shape, and there are even some limitations regarding the perception of color, such as several types of color blindness. Even with these limitations, I think it's still important to look into the general psychological properties of colors and use this knowledge to create meaning that speaks to a wider audience.

From this point of view, the choice of colors for this work could go in a more personal direction, depending on who is looking at it, when it is more on an exclusive communication level and not shown to the public. Personalized color selection can help create a deeper emotional connection with the viewer, which can lead to a more impactful and memorable experience. However, it is important to consider the context and purpose of the work before making any color choices.

Overall, I think that the use of color in art is a powerful way to make people feel things and get the emotions across even if it has a more personalized or symbolic meaning, and I am particularly fascinated by the idea of manipulating a viewer's mood and feelings through color. By understanding the psychological properties of colors and using them in a deliberate, thoughtful, and also more personal manner, they can have a lasting impact on the viewer.

Limitations Regarding the Wearable Piece

The usage of the setup in the wild showed that the most important factors affecting the visibility of the device are the distance between the wearer and the viewer and its positioning. As mentioned above, the most visible results were when the distance was even smaller than 2 arm lengths, and it was also visible that some curious people were turning their heads to look, but the device had already passed. For the next phase, the setup will be more controlled and assistive but still interactive in a way that there will be no active motion but more exclusive communication between the parties.

Also, according to the goals of usage, the lighting and size of the display were not ideal. The size of the screen was too small, and it became negligible for most of the people who were further away. But this problem can't just be fixed by making the screen bigger, because then it stops being an extension of the person wearing it. Hence, the placement of the visual could be behind the wearer in a more immersive environment.

References

Adams, R. B., Ambady, N., Macrae, C. N., & Kleck, R. E. (2006). Emotional expressions forecast approach-avoidance behavior. *Motivation and Emotion*, *30*(2), 177–186. https://doi.org/10.1007/s11031-006-9020-2

De’Aira, Deepak, & Ayanna. (2021). Age bias in emotion detection An analysis of facial emotion recognition performance on young middle-aged and older adults.

Ekman, P. (1970). Universal Facial Expressions of Emotions. California Mental Health Research Digest, 8(4), 151-158.

Ferrari, P. F., & Coudé, G. (2018). Mirror neurons, embodied emotions, and empathy. *Neuronal Correlates of Empathy*, 67–77. https://doi.org/10.1016/b978-0-12-805397-3.00006-1

Ko, B. (2018). A brief review of facial emotion recognition based on visual information. Sensors, 18(2), 401. https://doi.org/10.3390/s18020401

DeepFace https://github.com/serengil/deepface

OpenCV https://opencv.org

early sensor experiments