| Line 16: | Line 16: | ||

*theory: working on [https://www.uni-weimar.de/en/media/chairs/computer-science-and-media/webis/teaching/lecturenotes/#machine-learning lecture] and [https://www.uni-weimar.de/en/media/chairs/computer-science-and-media/webis/teaching/ws-201718/machine-learning/ exercises] by Benno Stein on Machine Learning | *theory: working on [https://www.uni-weimar.de/en/media/chairs/computer-science-and-media/webis/teaching/lecturenotes/#machine-learning lecture] and [https://www.uni-weimar.de/en/media/chairs/computer-science-and-media/webis/teaching/ws-201718/machine-learning/ exercises] by Benno Stein on Machine Learning | ||

*application: playing around with [http://www.wekinator.orgwekinator wekinator] and this [https://github.com/hughrawlinson/wekinator-node helpful framework] for interfacing it with osc-protocol | *application: playing around with [http://www.wekinator.orgwekinator wekinator] and this [https://github.com/hughrawlinson/wekinator-node helpful framework] for interfacing it with osc-protocol | ||

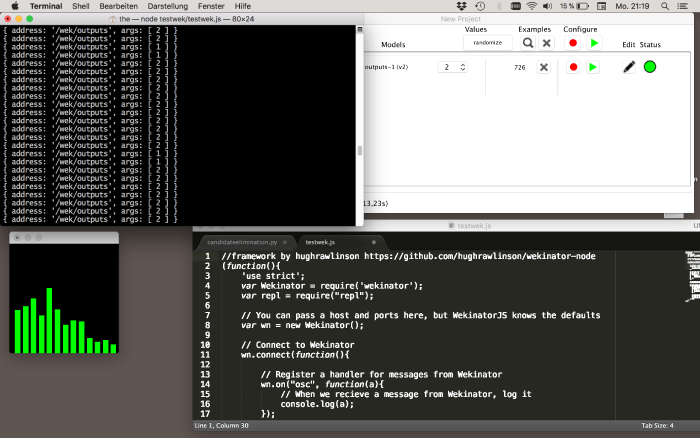

The following picture shows the setup on my computer, while playing around with wekinator: It shows the Wekinator-UI on the upper right corner, some code to interface it with osc-protocol on the lower right corner, the voice-input-interface from the [http://www.wekinator.org/examples/ wekinator example set] on the lower left corner and finally the command-line-output of the classified voice on the upper left corner. The command-line-interface shows the output of a model, that is trained to classify 2 different voices. It outputs '1' for one voice, and '2' for the other voice. | |||

[[File:Voicediscrimination.png|link=MediaWiki|thumb|700px|The model can distinguish two different voices.|center]] | |||

Latest revision as of 20:40, 4 December 2017

Artists Lab 17/18

Initial Post

Machine Learning is ubiquitous. Machines and gadgets all around us are continuously learning from the behaviour of their users and the surrounding world. I am interested in this learning process, its algorithmic mechanics and its motivation. I plan to concentrate my work on machine learning

- as an interactive tool,

- in terms of borders of possible applications (empathy, self-awareness),

- in the fact that it can detect patterns, where we do not see any

- and its use of "statistical stereotypes" for decision-making.

Progress

I am currently in a research phase, this includes

- theory: working on lecture and exercises by Benno Stein on Machine Learning

- application: playing around with wekinator and this helpful framework for interfacing it with osc-protocol

The following picture shows the setup on my computer, while playing around with wekinator: It shows the Wekinator-UI on the upper right corner, some code to interface it with osc-protocol on the lower right corner, the voice-input-interface from the wekinator example set on the lower left corner and finally the command-line-output of the classified voice on the upper left corner. The command-line-interface shows the output of a model, that is trained to classify 2 different voices. It outputs '1' for one voice, and '2' for the other voice.