| (15 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

''November 2, 2021'' | |||

== '''INTRODUCTION''' == | == '''INTRODUCTION''' == | ||

| Line 26: | Line 27: | ||

Originally, I wanted to experiment with this new simplicity and develop a narrative around a person who lives "in the screen". I wanted to develop a dialog system that offered choices for questions and answers I defined, which could then be used to converse with the "person in the screen." Depending on the selected question or answer, the "story" would take a different course and hold surprises. Another idea was to place the work in public space. Accordingly, it would not be about speech recognition or generative questions and answers generated by an AI. Instead, I wanted to have a lot of conversations with people here in Weimar, interview them about certain topics, have good talks and then incorporate all of these realistic conversations into the dialog system and record the respective people and their facial expressions with performance capture. In this way, I thought, with enough material, the conversations would be far more realistic and, above all, more personal than those created with well-known chatbots. | Originally, I wanted to experiment with this new simplicity and develop a narrative around a person who lives "in the screen". I wanted to develop a dialog system that offered choices for questions and answers I defined, which could then be used to converse with the "person in the screen." Depending on the selected question or answer, the "story" would take a different course and hold surprises. Another idea was to place the work in public space. Accordingly, it would not be about speech recognition or generative questions and answers generated by an AI. Instead, I wanted to have a lot of conversations with people here in Weimar, interview them about certain topics, have good talks and then incorporate all of these realistic conversations into the dialog system and record the respective people and their facial expressions with performance capture. In this way, I thought, with enough material, the conversations would be far more realistic and, above all, more personal than those created with well-known chatbots. | ||

But then I looked at some examples on the Internet of MetaHumans, which disappointed me more and more the more I looked into them: | '''But then''' I looked at some examples on the Internet of MetaHumans, which disappointed me more and more the more I looked into them: | ||

Even though the performance capture results were impressive, unfortunately it was precisely the high realism of the 3D models that overemphasized, to my mind, every little flaw in the facial expressions, speed and movement of the animated heads. | Even though the performance capture results were impressive, unfortunately it was precisely the high realism of the 3D models that overemphasized, to my mind, every little flaw in the facial expressions, speed and movement of the animated heads. | ||

| Line 32: | Line 33: | ||

For me it's mainly about the content, the idea of talking to a human being who is convinced that his or her world is in the screen. I don't think it's necessary to have photorealistic faces as interlocutors for that, if the narrative is coherent. While I don't want to rule out the use of MetaHumans at this point, I will definitely focus on a style that tries less to visually mimic reality. Instead, the goal is to create a different reality with its own believable rules. | For me it's mainly about the content, the idea of talking to a human being who is convinced that his or her world is in the screen. I don't think it's necessary to have photorealistic faces as interlocutors for that, if the narrative is coherent. While I don't want to rule out the use of MetaHumans at this point, I will definitely focus on a style that tries less to visually mimic reality. Instead, the goal is to create a different reality with its own believable rules. | ||

''November 25, 2021'' | |||

== '''A New Idea In Between''' == | |||

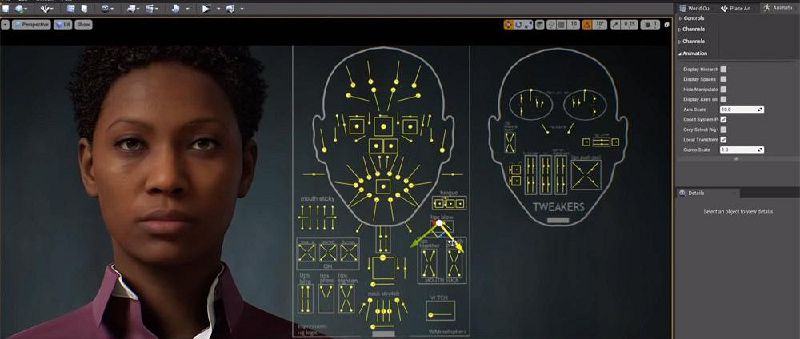

MetaHumans can also be animated manually by hand. In doing so, facial parts behave realistically to each other and one can go into enormous detail. However, to create realistic animations this way, much more time is needed to also understand human facial expressions and their connection to feelings you want to express. In a self-experiment that could be a small artistic work during my further research, I would like to experiment with this manual animation and document the process. In addition to "finished" emotions, one would also see the process in time-lapse and real-time on other screens. In doing so, one would see the rig of the face as well as my mouse movements. Accompanying the visual work, a concise written reflection on the complexity of human emotions , personalities and artificial intelligence is conceivable. | |||

[[File:MetahumanRig.jpg|800px|center]] | |||

Latest revision as of 10:23, 29 November 2021

November 2, 2021

INTRODUCTION

At this point I would like to document in which areas I am currently researching, which tools I would like to acquire and finally: what artistic work it will amount to this semester and what the development process behind it is. Basically, it's important to me to have fun and to have a nice sense of achievement on a regular basis. My overall goal is at least to acquire a good workflow in Unreal Engine 4 and ideally also basics in 3D modeling in Blender and / or procedural 3D modeling in Houdini. The background is that I am currently in the founding phase of an unusual studio for striking game design and interaction in Weimar with good friends. The studio also asks questions about the common good and critically reflects on (digital) society. As a first step, we were already able to receive the fellowship of the Gründerwerkstatt neudeli in Weimar in the summer of 2021.

It is essential for me to understand said software to a certain degree in order to be able to work even better with my team and create prototypes quickly. Nevertheless, I don't want to simply learn tools, but also develop artistic works this semester that reflect my current thematic interests in general. It's important to me for my work to be fun, to encourage interaction, and ideally to draw attention to issues that I feel are very important but don't get enough attention.

The last thing I want this semester is to achieve a fixed result based on a fixed concept. I will be working very dynamically, in small rather than large steps and probably always modifying, revising ideas and prototypes and also sometimes looking at ideas from the past with a new perspective. It will be about creating many prototypes quickly in a space I have already roughly marked out, which will begin to manifest itself more and more precisely for outsiders in the course of this documentation.

Starting Point

Like many people on the internet, I was very impressed by the trailer of the cloud application "MetaHuman Creator" from Feb. 10, 2021, which can already be tested in an early access version. As seen in the video above, highly realistic 3D models of humans can be created and are fully rigged. With various performance capture solutions, facial expressions can be transferred live to the 3D faces (result around minute 6):

Originally, I wanted to experiment with this new simplicity and develop a narrative around a person who lives "in the screen". I wanted to develop a dialog system that offered choices for questions and answers I defined, which could then be used to converse with the "person in the screen." Depending on the selected question or answer, the "story" would take a different course and hold surprises. Another idea was to place the work in public space. Accordingly, it would not be about speech recognition or generative questions and answers generated by an AI. Instead, I wanted to have a lot of conversations with people here in Weimar, interview them about certain topics, have good talks and then incorporate all of these realistic conversations into the dialog system and record the respective people and their facial expressions with performance capture. In this way, I thought, with enough material, the conversations would be far more realistic and, above all, more personal than those created with well-known chatbots.

But then I looked at some examples on the Internet of MetaHumans, which disappointed me more and more the more I looked into them:

Even though the performance capture results were impressive, unfortunately it was precisely the high realism of the 3D models that overemphasized, to my mind, every little flaw in the facial expressions, speed and movement of the animated heads. I am sure that this software solution will quickly improve, as it is still in Early Access. For this semester, however, it seems to me that far too much fine-tuning and time is needed to get the movements as realistic as they are shown in the trailer for MetaHuman Creator.

For me it's mainly about the content, the idea of talking to a human being who is convinced that his or her world is in the screen. I don't think it's necessary to have photorealistic faces as interlocutors for that, if the narrative is coherent. While I don't want to rule out the use of MetaHumans at this point, I will definitely focus on a style that tries less to visually mimic reality. Instead, the goal is to create a different reality with its own believable rules.

November 25, 2021

A New Idea In Between

MetaHumans can also be animated manually by hand. In doing so, facial parts behave realistically to each other and one can go into enormous detail. However, to create realistic animations this way, much more time is needed to also understand human facial expressions and their connection to feelings you want to express. In a self-experiment that could be a small artistic work during my further research, I would like to experiment with this manual animation and document the process. In addition to "finished" emotions, one would also see the process in time-lapse and real-time on other screens. In doing so, one would see the rig of the face as well as my mouse movements. Accompanying the visual work, a concise written reflection on the complexity of human emotions , personalities and artificial intelligence is conceivable.