(→Sound) |

(→Sound) |

||

| Line 28: | Line 28: | ||

== Sound == | == Sound == | ||

''Because I am still working on the texture of the generated drone-sound. Here is an updated list of it's most recent condition:'' | ''Because I am still working on the texture of the generated drone-sound. Here is an updated list of it's most recent condition:'' | ||

'''15.01.2022''' | |||

audioTest3: https://soundcloud.com/nik-cosmo/playbackaudiotest3-15012022/s-Q0p4guqoSJv | |||

'''12.01.2022''' | '''12.01.2022''' | ||

Revision as of 14:09, 15 January 2022

Speculative Atmospheres ll

Project research

Cosmo Niklas Schüppel

Playback - Where Past and Presence Dance

'Playback' is an outdoor sound-installation, which opens up the topic of time as a non-linear concept; all time happening in the same moment - in the present. This philosophy of understanding time is for example widely spread in the 'buddhist' world-view. The installation does not intend to argue that this aproach to the nature of time is the right one. It only tries to open up the mind of the participants to other - fundamentally different - concepts of understanding reality.

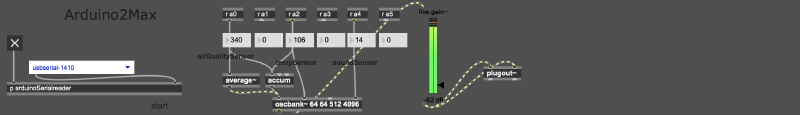

In ‘Playback’ a designated place is chosen where the data of natural factors (air quality, temperature, decibel) is collected and its soundscape (the atmospheric sounds) is recorded. The data is made audible in a piece of atmospheric drone-music; here the data of the natural factors determine the aspects of the created sound. This monotonic drone is mixed with the recorded soundscape and is played back to/at the place at a later time. At this later playback-event, the data is collected again and is transcribed into music - live. The participator then can witness the two data-drones (the one from collected and the one from live transcribed natural factors) and the two soundscapes (the pre-recorded one and the one that the place naturally emits) which morph into each other.

Conceptually this project talks about the influence of the past on the perceived presence of the present moment and what happens if past and present are not clearly distinguishable. Through this, ‘Playback’ questions the accustomed view and perception of time as a linear and absolute concept. In this conceptual dissolving of the perception of time (which can happen naturally when the mind drifts into a state of trance, for example by perceiving monotonic sounds) the installation leads the participator into a meditative state where past and present morph into each other. The world of thought is brought into the foreground of perception and the mind is confronted with its own more or less hectic patterns. The participant's thoughts and emotional patterns, created in the past, are now confronted by being held in the present moment. Just like that the space is confronted with its own past. Through this process the participant becomes aware of the interplay of esoteric and exoteric sounds, their different perceptions of time, as well as their relationship of their physical body and the surrounding space.

Technically this is achieved by creating sounds out of the data of Arduino sensors through MaxMSP.

The atmospheric-soundscape is recorded with a ‘normal’ microphone.

The playback-event would need quite a loud stereo sound system, so that the mixture of past- and present-soundscape can be achieved in a convincing manner.

Sound

Because I am still working on the texture of the generated drone-sound. Here is an updated list of it's most recent condition:

15.01.2022

audioTest3: https://soundcloud.com/nik-cosmo/playbackaudiotest3-15012022/s-Q0p4guqoSJv

12.01.2022

audioTest2: https://soundcloud.com/nik-cosmo/playbackaudiotest-2201122/s-QIKZ0m2DYDy (...there is some clipping that is not on purpose...)

audioTest1: https://soundcloud.com/nik-cosmo/playbackaudiotest-220112/s-5UFmo34Evb9

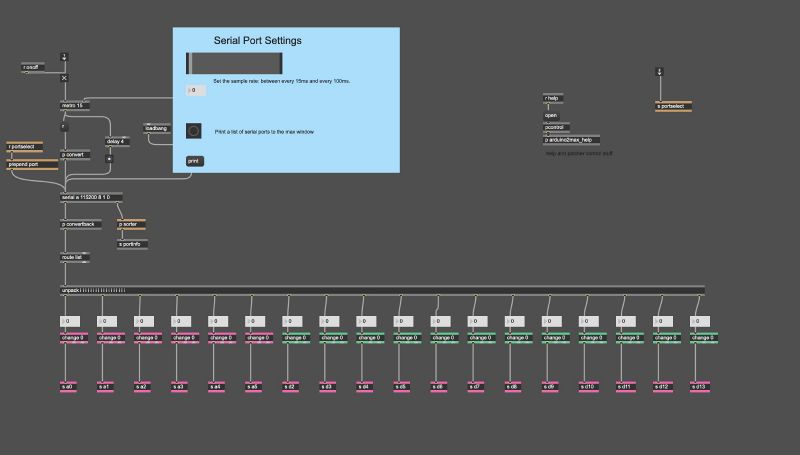

arduino code :

to read-out the sensor data and to make it available for Max

// a place to hold pin values int x = 0;

void setup()

{

Serial.begin(115200);

delay(600);

digitalWrite(13,LOW);

pinMode(13,INPUT);

}

// Check serial buffer for characters

// If an 'r' is received then read the pins

// Read and send analog pins 0-5

void loop()

{

if (Serial.available() > 0){

if (Serial.read() == 'r') {

for (int pin= 0; pin<=5; pin++){

x = analogRead(pin);

sendValue (x);

}

// Read and send digital pins 2-13

// Send a carriage returnt to mark end of pin data.

// add a delay to prevent crashing/overloading of the serial port

for (int pin= 2; pin<=13; pin++){

x = digitalRead(pin);

sendValue (x);

}

Serial.println();

delay (5);

}

}

}

// function to send the pin value followed by a "space".

void sendValue (int x){

Serial.print(x);

Serial.write(32);

}

Max msp (Max for Live) patch:

the sound that is created in Max is a sensor-data controlled sine-oscillator. This simple sine wave is them modified in Ableton Live, to what can be heard in the video example. For that I used different filters, modifications of the frequency spectrum, spaces and other aesthetical effects. Besides that the data-osc. is tripples (in both the 'past' and the 'present' - so in the final listening experience you heard six at the same time). One osc. stays at the original pitch - laying flat and fat in the mid range frequencies, depending on the data that it is given by the arduino. The other two are focused on the highs and lows, to give me more control over the modification of the sound and to give both more if the clearness.