No edit summary |

|||

| (48 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

== '''''PLAYBACK - A Place Where Past And Presence Dance''''' == | |||

'''Speculative Atmospheres ll''' | |||

'''Project research''' | |||

Cosmo Schüppel | |||

'' ‘Playback’ is a sound performance/experiment. It investigates changes in the atmosphere of a place, when it gets confronted with its past. | |||

Influenced by buddhist philosophy and different conceptions of time, ‘Playback’ aims to open the listeners mind to investigate their perception of sound and time; and the relation between both. | |||

Furthermore it is a journey to lead the mind into the awareness of its surroundings.'' | |||

'''Recording of the final exhibition at ''ACC-Galerie Weimar''. | |||

'''The performance happened within the framework of the ''Winterwerkschau 2022''.''' | |||

https://https://soundcloud.com/nik-cosmo/playbackexhrec/s-9tXqVBjcoPp | |||

[[File:ppPoster.png|400px]] | |||

Technically this | |||

The | |||

The playback-event | ---- | ||

''In ‘Playback’ a designated place is chosen where the data of natural factors (air quality, temperature, decibel) is collected and its soundscape (the atmospheric sounds) is recorded. The data is made audible in a piece of atmospheric drone-music; here the data of the natural factors determine the aspects of the created sound. This monotonic drone is mixed with the recorded soundscape and is played back to/at the place at a later time. At this later playback-event, the data is collected again and is transcribed into music - live. The participator then can witness the two data-drones (the one from collected and the one from live transcribed natural factors) and the two soundscapes (the pre-recorded one and the one that the place naturally emits) which morph into each other. | |||

'''Conceptually this project talks about the influence of the past on the perceived presence of the present moment and what happens if past and present are not clearly distinguishable. Through this, ‘Playback’ questions the accustomed view and perception of time as a linear and absolute concept. In this conceptual dissolving of the perception of time the installation leads the participator into a meditative state where past and present morph into each other. This can happen naturally when the mind drifts into a state of trance, for example by perceiving monotonic sounds. The world of thought is brought into the foreground of perception and the mind is confronted with its own more or less hectic patterns. The participant's thoughts and emotional patterns, created in the past, are now confronted by being held in the present moment. Just like that the space is confronted with its own past. Through this process the participant becomes aware of the interplay of esoteric and exoteric sounds, their different perceptions of time, as well as their relationship of their physical body and the surrounding space. | |||

''' | |||

Technically this is achieved by creating sounds out of the data of Arduino sensors through MaxMSP. | |||

The atmospheric-soundscape is recorded with a ‘normal’ microphone. | |||

The playback-event requires a loud stereo PA, so that the mixture of past- and present-soundscape can be achieved in a convincing manner.'' | |||

---- | |||

'''''The Pre-Recording''''' | |||

[[File:P1050665.JPG|300px]] | |||

[[File:P1050662.JPG|300px]] | |||

'''''The final Performance''''' | |||

[[File:P1050672.JPG|300px]] | |||

[[File:P1050675.JPG|300px]] | |||

'''''Evaluation of the final performance''''' | |||

Presenting my work was a good opportunity to explore what works and what does not. Conceptually as well as technically. And I took it as such. An experiment. To try and to fail and to try again and fail better - next time. | |||

Overall I was satisfied with the result and with the responses that I received. The feeling of “loosing sense of what is really there and what is only in the played back soundscape” and the “sinking into the sounds and becoming aware of what was happening around” where two commonly shared experiences. And where also my intended reactions. | |||

The communication of the ‘melting of past and present’ was not so intuitively accessible for most. I was aware of that before, but it became very clear when talking to the visitors. It was hard to communicate these ideas because of their complexity. And seeing the result helped me to understand how to proceed in the future. Complex ideas like that have to be communicated more clearly and bluntly. They have to be spelled out more, or left out of the work. | |||

Technically the experience was almost as imagined. The stereo PA, that was required to produce the phenomena in the work, was only accessible to me for a short period of time. Because of that I could not find out, until the final presentation, if my idea even worked. | |||

I was glad and relieved that it worked almost perfectly. Still, there were some things to work on. | |||

In the future I could include the stereo field more. Both while recording and while the playback-event. This could mean having more distance in between the microphones while recording and building up the speakers further away from the audience. Giving the sound more room to dissolve into the surrounding soundscape. Making the two even less distinguishable. | |||

Also it would be a possibility to work with more speakers. Either with an immersive system or with placing many speakers consciously into the places, where repeating sounds come from. For example if there is a birds nest, putting a speaker next to the nest. Making it sound like there are birds, even when they are not there in the moment. | |||

It would also be interesting to play around with hiding the speakers, to experiment how the experience would change, when there are not as consciously perceived. | |||

Overall I am happy with the exhibition of the work. There are many things that I would do different next time, but building it up helped me a lot to see and to understand what I know now better. | |||

Also it helped me to train myself in the organisational part of the work. Which was as complicated as I expected, even though the performance was fairly simple. | |||

---- | |||

'''''Mood Trailer''''' | |||

''Concept and audio as of mid January'' | |||

{{#ev:vimeo|662405925}} | |||

'''''Sound''''' | |||

''Updated list of it's most recent condition:'' | |||

'''19.01.2022''' | |||

Final Audio: https://soundcloud.com/nik-cosmo/playbackaudiotest4-190122/s-B2LKQr2AxV0 | |||

'''15.01.2022''' | |||

audioTest3: https://soundcloud.com/nik-cosmo/playbackaudiotest3-15012022/s-Q0p4guqoSJv | |||

'''12.01.2022''' | |||

audioTest2: https://soundcloud.com/nik-cosmo/playbackaudiotest-2201122/s-QIKZ0m2DYDy | |||

(...there is some clipping that is not on purpose...) | |||

audioTest1: https://soundcloud.com/nik-cosmo/playbackaudiotest-220112/s-5UFmo34Evb9 | |||

---- | |||

'''''arduino code :''''' | |||

''to read-out the sensor data and to make it available for Max'' | |||

// a place to hold pin values | |||

int x = 0; | |||

void setup() | |||

{ | |||

Serial.begin(115200); | |||

delay(600); | |||

digitalWrite(13,LOW); | |||

pinMode(13,INPUT); | |||

} | |||

// Check serial buffer for characters | |||

// If an 'r' is received then read the pins | |||

// Read and send analog pins 0-5 | |||

void loop() | |||

{ | |||

if (Serial.available() > 0){ | |||

if (Serial.read() == 'r') { | |||

for (int pin= 0; pin<=5; pin++){ | |||

x = analogRead(pin); | |||

sendValue (x); | |||

} | |||

// Read and send digital pins 2-13 | |||

// Send a carriage returnt to mark end of pin data. | |||

// add a delay to prevent crashing/overloading of the serial port | |||

for (int pin= 2; pin<=13; pin++){ | |||

x = digitalRead(pin); | |||

sendValue (x); | |||

} | |||

Serial.println(); | |||

delay (5); | |||

} | |||

} | |||

} | |||

// function to send the pin value followed by a "space". | |||

void sendValue (int x){ | |||

Serial.print(x); | |||

Serial.write(32); | |||

} | |||

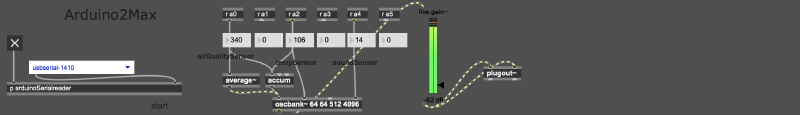

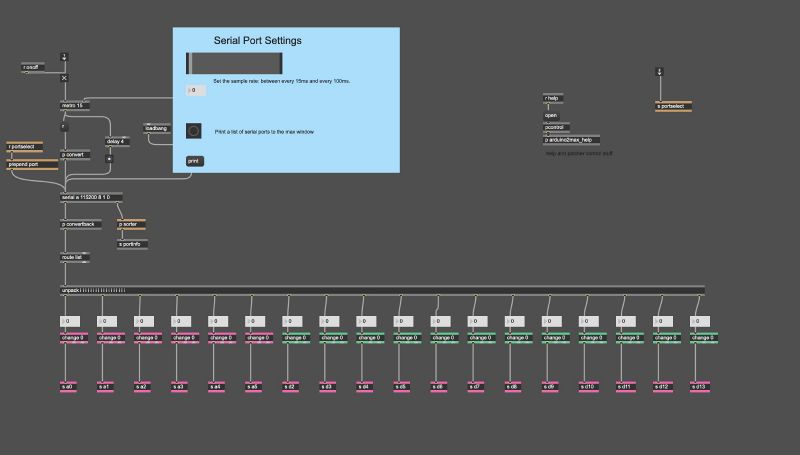

'''''Max msp (Max for Live) patch:''''' | |||

[[File:Screenshot 2022-01-12 at 12.39.31.png|800px]] | |||

[[File:Screenshot cosmo 01.jpg|800px]] | |||

the sound that is created in Max is a sensor-data controlled sine-oscillator. This simple sine wave is them modified in Ableton Live, to what can be heard in the video example. For that I used different filters, modifications of the frequency spectrum, spaces and other aesthetical effects. Besides that the data-osc. is tripples (in both the 'past' and the 'present' - so in the final listening experience you heard six at the same time). One osc. stays at the original pitch - laying flat and fat in the mid range frequencies, depending on the data that it is given by the arduino. The other two are focused on the highs and lows, to give me more control over the modification of the sound and to give both more if the clearness. | |||

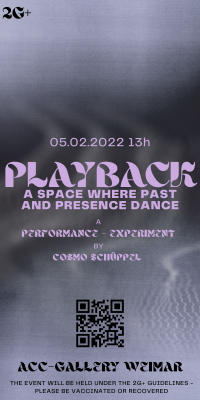

'''''Poster and Flyers''''' | |||

[[File:ppPoster.png|300px]] | |||

[[File:ppFlyer1.png|200px]] | |||

[[File:ppFlyer2.png|200px]] | |||

Latest revision as of 23:29, 8 February 2022

PLAYBACK - A Place Where Past And Presence Dance

Speculative Atmospheres ll

Project research

Cosmo Schüppel

‘Playback’ is a sound performance/experiment. It investigates changes in the atmosphere of a place, when it gets confronted with its past.

Influenced by buddhist philosophy and different conceptions of time, ‘Playback’ aims to open the listeners mind to investigate their perception of sound and time; and the relation between both.

Furthermore it is a journey to lead the mind into the awareness of its surroundings.

Recording of the final exhibition at ACC-Galerie Weimar.

The performance happened within the framework of the Winterwerkschau 2022.

https://https://soundcloud.com/nik-cosmo/playbackexhrec/s-9tXqVBjcoPp

In ‘Playback’ a designated place is chosen where the data of natural factors (air quality, temperature, decibel) is collected and its soundscape (the atmospheric sounds) is recorded. The data is made audible in a piece of atmospheric drone-music; here the data of the natural factors determine the aspects of the created sound. This monotonic drone is mixed with the recorded soundscape and is played back to/at the place at a later time. At this later playback-event, the data is collected again and is transcribed into music - live. The participator then can witness the two data-drones (the one from collected and the one from live transcribed natural factors) and the two soundscapes (the pre-recorded one and the one that the place naturally emits) which morph into each other.

Conceptually this project talks about the influence of the past on the perceived presence of the present moment and what happens if past and present are not clearly distinguishable. Through this, ‘Playback’ questions the accustomed view and perception of time as a linear and absolute concept. In this conceptual dissolving of the perception of time the installation leads the participator into a meditative state where past and present morph into each other. This can happen naturally when the mind drifts into a state of trance, for example by perceiving monotonic sounds. The world of thought is brought into the foreground of perception and the mind is confronted with its own more or less hectic patterns. The participant's thoughts and emotional patterns, created in the past, are now confronted by being held in the present moment. Just like that the space is confronted with its own past. Through this process the participant becomes aware of the interplay of esoteric and exoteric sounds, their different perceptions of time, as well as their relationship of their physical body and the surrounding space.

Technically this is achieved by creating sounds out of the data of Arduino sensors through MaxMSP.

The atmospheric-soundscape is recorded with a ‘normal’ microphone.

The playback-event requires a loud stereo PA, so that the mixture of past- and present-soundscape can be achieved in a convincing manner.

The Pre-Recording

The final Performance

Evaluation of the final performance

Presenting my work was a good opportunity to explore what works and what does not. Conceptually as well as technically. And I took it as such. An experiment. To try and to fail and to try again and fail better - next time.

Overall I was satisfied with the result and with the responses that I received. The feeling of “loosing sense of what is really there and what is only in the played back soundscape” and the “sinking into the sounds and becoming aware of what was happening around” where two commonly shared experiences. And where also my intended reactions.

The communication of the ‘melting of past and present’ was not so intuitively accessible for most. I was aware of that before, but it became very clear when talking to the visitors. It was hard to communicate these ideas because of their complexity. And seeing the result helped me to understand how to proceed in the future. Complex ideas like that have to be communicated more clearly and bluntly. They have to be spelled out more, or left out of the work.

Technically the experience was almost as imagined. The stereo PA, that was required to produce the phenomena in the work, was only accessible to me for a short period of time. Because of that I could not find out, until the final presentation, if my idea even worked. I was glad and relieved that it worked almost perfectly. Still, there were some things to work on. In the future I could include the stereo field more. Both while recording and while the playback-event. This could mean having more distance in between the microphones while recording and building up the speakers further away from the audience. Giving the sound more room to dissolve into the surrounding soundscape. Making the two even less distinguishable. Also it would be a possibility to work with more speakers. Either with an immersive system or with placing many speakers consciously into the places, where repeating sounds come from. For example if there is a birds nest, putting a speaker next to the nest. Making it sound like there are birds, even when they are not there in the moment. It would also be interesting to play around with hiding the speakers, to experiment how the experience would change, when there are not as consciously perceived.

Overall I am happy with the exhibition of the work. There are many things that I would do different next time, but building it up helped me a lot to see and to understand what I know now better. Also it helped me to train myself in the organisational part of the work. Which was as complicated as I expected, even though the performance was fairly simple.

Mood Trailer

Concept and audio as of mid January

Sound

Updated list of it's most recent condition:

19.01.2022

Final Audio: https://soundcloud.com/nik-cosmo/playbackaudiotest4-190122/s-B2LKQr2AxV0

15.01.2022

audioTest3: https://soundcloud.com/nik-cosmo/playbackaudiotest3-15012022/s-Q0p4guqoSJv

12.01.2022

audioTest2: https://soundcloud.com/nik-cosmo/playbackaudiotest-2201122/s-QIKZ0m2DYDy (...there is some clipping that is not on purpose...)

audioTest1: https://soundcloud.com/nik-cosmo/playbackaudiotest-220112/s-5UFmo34Evb9

arduino code :

to read-out the sensor data and to make it available for Max

// a place to hold pin values int x = 0;

void setup()

{

Serial.begin(115200);

delay(600);

digitalWrite(13,LOW);

pinMode(13,INPUT);

}

// Check serial buffer for characters

// If an 'r' is received then read the pins

// Read and send analog pins 0-5

void loop()

{

if (Serial.available() > 0){

if (Serial.read() == 'r') {

for (int pin= 0; pin<=5; pin++){

x = analogRead(pin);

sendValue (x);

}

// Read and send digital pins 2-13

// Send a carriage returnt to mark end of pin data.

// add a delay to prevent crashing/overloading of the serial port

for (int pin= 2; pin<=13; pin++){

x = digitalRead(pin);

sendValue (x);

}

Serial.println();

delay (5);

}

}

}

// function to send the pin value followed by a "space".

void sendValue (int x){

Serial.print(x);

Serial.write(32);

}

Max msp (Max for Live) patch:

the sound that is created in Max is a sensor-data controlled sine-oscillator. This simple sine wave is them modified in Ableton Live, to what can be heard in the video example. For that I used different filters, modifications of the frequency spectrum, spaces and other aesthetical effects. Besides that the data-osc. is tripples (in both the 'past' and the 'present' - so in the final listening experience you heard six at the same time). One osc. stays at the original pitch - laying flat and fat in the mid range frequencies, depending on the data that it is given by the arduino. The other two are focused on the highs and lows, to give me more control over the modification of the sound and to give both more if the clearness.

Poster and Flyers