m (→Is that me?) |

m (→Is that me?) |

||

| Line 34: | Line 34: | ||

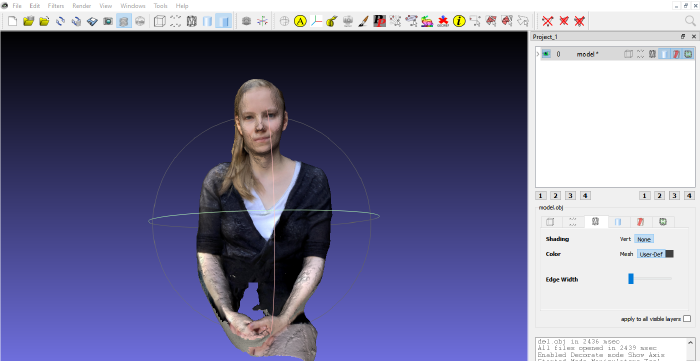

After completing that step, a fellow student, that also took the course, and I used the phone app Trnio to photograph each other’s heads. Out of these photos the app formed a 3D scan of our upper bodies as an OBJ file. | After completing that step, a fellow student, that also took the course, and I used the phone app Trnio to photograph each other’s heads. Out of these photos the app formed a 3D scan of our upper bodies as an OBJ file. | ||

[[File: | [[File:me 1.png|700px]] | ||

<gallery> | <gallery> | ||

| Line 49: | Line 49: | ||

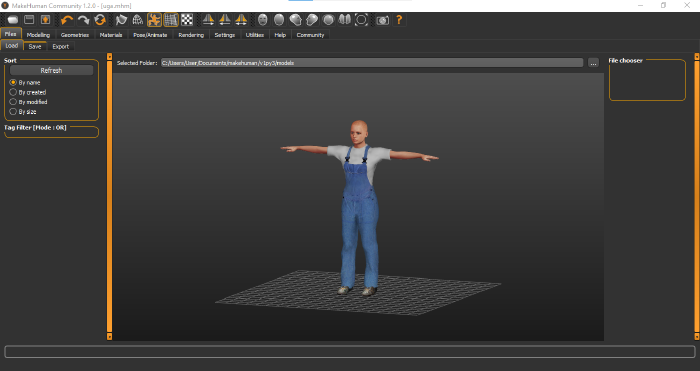

We then used the 3D modelling software MakeHuman to generate a human body that we could attach our head scans to. The MakeHuman model was also exported as an OBJ file. | We then used the 3D modelling software MakeHuman to generate a human body that we could attach our head scans to. The MakeHuman model was also exported as an OBJ file. | ||

[[File:Screenshot | [[File:Screenshot me21.png|700px]] | ||

<gallery> | <gallery> | ||

| Line 61: | Line 61: | ||

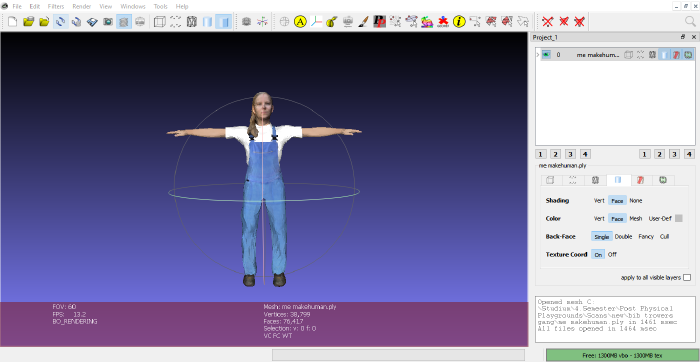

The next step was to utilize MeshLab to edit both models. I trimmed of some parts from my 3D head scan that were not needed, like the chest and the shoulders. I also cut of the head from the MakeHuman character, so the other head could be attached easier. After trimming the models, I rotated my 3D scanned head into the right place and adjusted the size. I trimmed of some more unnecessary parts and smoothed out rough edges. | The next step was to utilize MeshLab to edit both models. I trimmed of some parts from my 3D head scan that were not needed, like the chest and the shoulders. I also cut of the head from the MakeHuman character, so the other head could be attached easier. After trimming the models, I rotated my 3D scanned head into the right place and adjusted the size. I trimmed of some more unnecessary parts and smoothed out rough edges. | ||

[[File:Screenshot | [[File:Screenshot me19.png|700px]] | ||

| Line 68: | Line 68: | ||

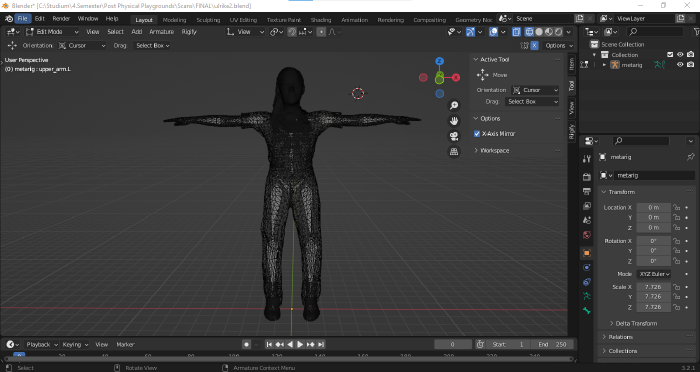

Also using Blender, I rigged my character and exported the model as a FBX file. Avatars for VR-Chat need a certain bone structure so they can be imported properly. Without a rig important features and animations wouldn’t work. | Also using Blender, I rigged my character and exported the model as a FBX file. Avatars for VR-Chat need a certain bone structure so they can be imported properly. Without a rig important features and animations wouldn’t work. | ||

[[File:Screenshot | [[File:Screenshot me14.png|700px]] | ||

<gallery> | <gallery> | ||

Revision as of 16:20, 30 October 2022

Is that me?

Concept:

By participating in the course “Post Physical Playgrounds” I wanted to learn more about the interaction of our real and digital world, as well as how to work with programs that let you design these interactions. During the course we talked about how different people express themselves on the internet and how we humans use the digital spaces to create a digital addition to our real selves or to create a more or less fictional persona. To get a better understanding of this concept we used VR-Chat, a online virtual reality platform.

It is interesting to see how people use these digital social spaces in such varied ways. The Internet, and with it its anonymity, gives the freedom to behave differently and act in a different way from our real-world self. Still not all people use that anonymity to become someone else or even act differing to what they would do in the real world. While using VR-Chat in the course it was pointed out to me that I was very polite when talking to other users. I was not able to overcome of my shyness in an anonymous, digital space. I realized I behaved like I normally would in the real world because that is how I was taught to act around strangers. I acted like this even though these “rules” are not regarded as that important in a digital space.

Out of the insight I gathered over the course and the programs that we were introduced to, I made a digital representation of myself. As my project for this course, I created MYSELF as a playable avatar for VR-Chat. So that I can not only behave like me but also look like me in the digital world. But MYSELF is also available for everyone to use. On the internet it is a kind of a no-go to impersonate someone else, especially without permission. With this project I give people this permission to impersonate ME on VR-Chat. You are allowed to use my avatar and become Ulrike Katzschmann.

Work process:

To create myself a as an avatar for VR-Chat I used Trnio, MakeHuman, MeshLab, Blender and Unity. I used these programs to make a 3D scan of my head, connect it to a body and upload it to VR-Chat. For my project I created a character that is pretty unique for VR-Chat (being a 3D scan of an unknown real-world human, while in VR-Chat most people use avatars of fictional cartoon, videogame, anime or completely made-up characters), using free software that was introduced to me over the time of the course. With these programs you can make good looking 3D scans, where no real prior knowledge is needed. Besides MakeHuman and Unity I have never worked with the other listed programs.

Before even starting with the creation of an avatar, we first made an account for VR-Chat during the course. We had to spent about 7 hours inside of the game before being able to create content for it.

After completing that step, a fellow student, that also took the course, and I used the phone app Trnio to photograph each other’s heads. Out of these photos the app formed a 3D scan of our upper bodies as an OBJ file.

We then used the 3D modelling software MakeHuman to generate a human body that we could attach our head scans to. The MakeHuman model was also exported as an OBJ file.

The next step was to utilize MeshLab to edit both models. I trimmed of some parts from my 3D head scan that were not needed, like the chest and the shoulders. I also cut of the head from the MakeHuman character, so the other head could be attached easier. After trimming the models, I rotated my 3D scanned head into the right place and adjusted the size. I trimmed of some more unnecessary parts and smoothed out rough edges.

With the help of the professor Mr. Brinkmann, who held the course, and Blender we could finally connect the 3D head scan to the MakeHuman body.

Also using Blender, I rigged my character and exported the model as a FBX file. Avatars for VR-Chat need a certain bone structure so they can be imported properly. Without a rig important features and animations wouldn’t work.

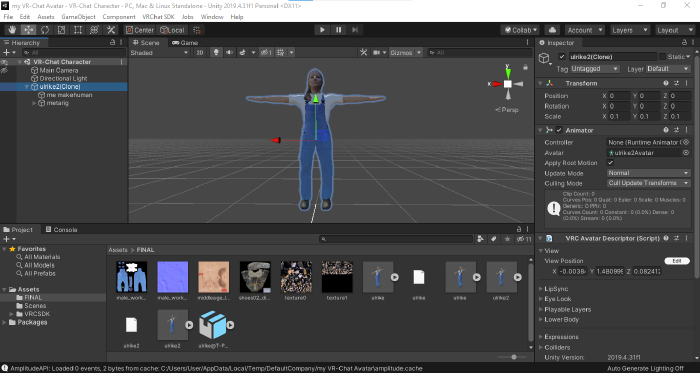

The last step was to upload my avatar to VR-Chat with Unity Version 2019.4.31f1. To properly do that I needed to download the VR-Chats SDK3-Avatars add-on for Unity.

I created a new Unity project and imported the SDK. After setting up my new Unity project I also imported my avatar to the project assets. I checked if the rig was working correctly and added my model to the scene. Then I applied the right textures to the model and also added the VRC Descriptor. For my character I only used the descriptor to set up the viewpoint for the camera the player would look out of when using the avatar.

As the last step I positioned my avatar in front of the main camera inside of the scene. The view of the camera is then used as a display picture for the avatar.

Finally, with the VR-Chat SDK control panel I could build and publish my new avatar to VR-Chat.