FanSymphony

Last summer it was a really warm summer and I spent while I was working a lot of hours with my fan, so I decided to make the fan the main object of my project

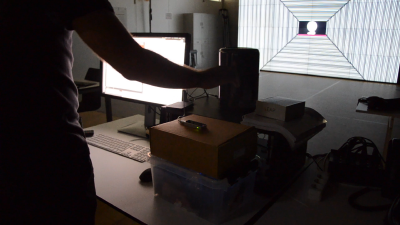

The idea of this project is really simple and more an excuse to work with leap motion, Max/msp and OpenFrameworks together using DBL and specifically the screens and the surround system.

I recorded with a zoom the fan from 4 different angles and I created a 3D object of it. Subsequently, in Ableton Ι edited a little bit the recordings and I created 10 different tracks; 8 with different eq filters and bpm plus 2 tracks only for reverb and delay.

As soon as finished with the editing of the sounds, in Max/Msp I created a patch for the surround system of DBL adjusting each of 8 tracks to a certain speaker. At the same patch I created some eq filters and gain slider for the later live manipulation of the sound.

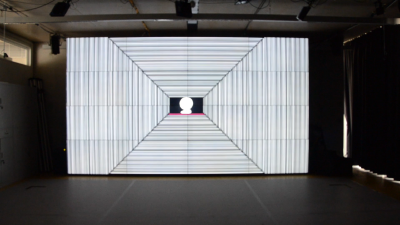

In OpenFrameworks, I loaded the fan in my code and I draw just its white wireframe in a black background. Also, I built a virtual camera for the live process and I created a lot of lines in loop in order to give the illusion of a perspective in a 2D space.

When I finished with the main patch and the main code, I tried to connect and control everything with my leap motion controller using OSC data. I made a sub-patch in Max/Msp that I called distribution. In this patch I received first all the data from the leap motion, I scaled them and afterward I send them via different internal ports to the main max/msp patch and the openFrameworks code.

In max/msp with the leap motion according to the move of my hand at X axis I was manipulating the frequencies of the sounds and at the Z axis the gain, so I could have sonically the same illusion of perspective and space that I had visually

In OpenFrameworks the OSC data that I was receiving from the leap motion, I used them to control the virtual camera in order to have a visual correspondence to the changes of the sound.

video: [1] password FFS