More and more sensors are embedded into our public space to track humans and enable interaction. Many of these interactive setups and used sensors are very costly and for this reason seldom used. We therefore explored in this paoject the possibilities and restrictions of a low-cost thermophile sensor.

Additionally we developed a low cost proof-of-concept prototype and conducted a small study to demonstrate the capabilities of the thermophile sensor.

Hardware

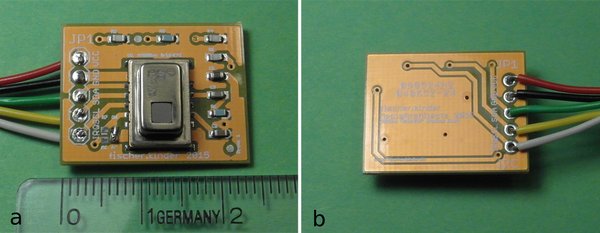

The basis of the project is the AMG8851 thermophile sensor. It is a 8x8 pixel infrared array sensor with I2C interface and a typical framerate of 10 fps.

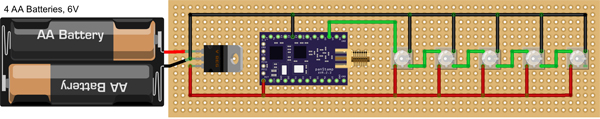

The sensor was combined with an Atmel328P microcontroller and a CC1101 transceiver to create a flexible modular setup. This allowed us to explore the sensor independently from a fixed device configuration. Thus for the final proof-of-concept prototype an independent display module was developed to test a the sensor in a novel interaction szenario.

Form

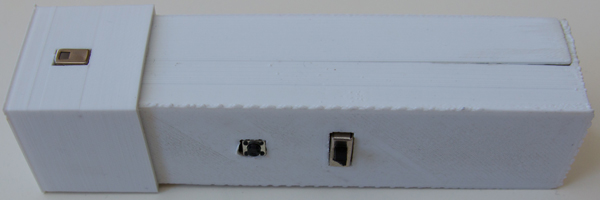

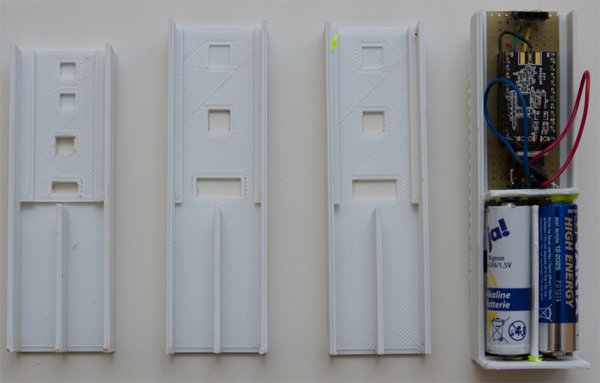

In order to protect and power the sensor module a simple case was designed. This also alowed us to mount the sensor easily and hide the hardware. In prior projects it turned out that behaviour towards exposed hardware is much different than towards a somewhat 'finished' product.

The iterations of the case are shown in the lower pictures.

Software

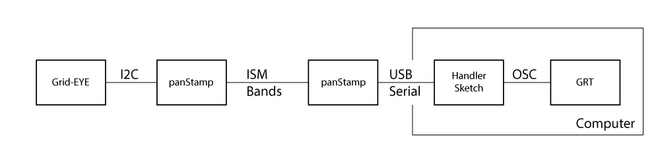

We used different busses for the data communication. The sensor data is wired through an I2C bus to the PanStamp (atmel329p and CC1101 combination) which

transfers it to a modem connected to a PC. The module can be placed within a range from 5 to 10 meters. The reciver modem is connected to a computer by USB.

An application was developed in Processing to read the input from the virtual serial port and forward it via OSC to a Software called Gesture Recognition Toolkit (GRT). In that way various gestutres can be trained for different use cases.

Interaction Design

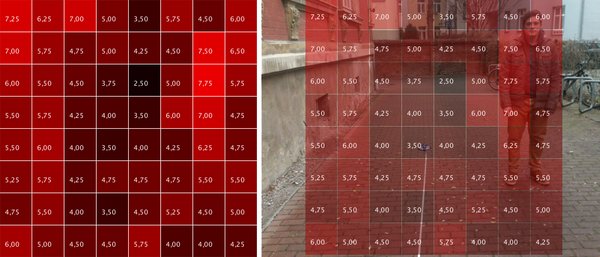

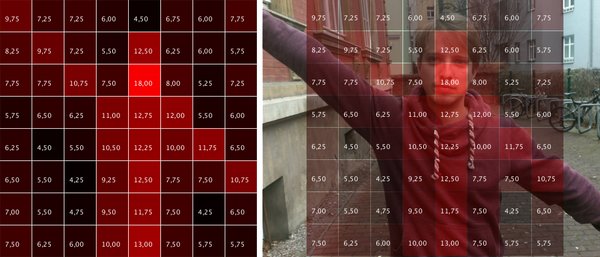

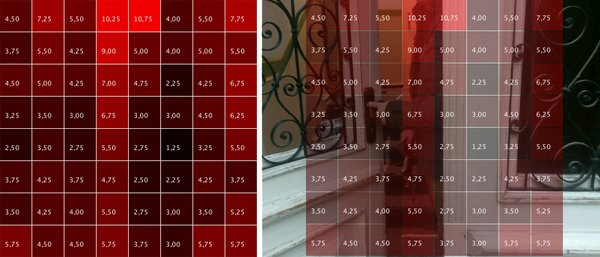

To test various interactions a test software was written. This allowed us to explore various application contexts. The pictures below show some ideas how the sensor could be used.

Evaluation

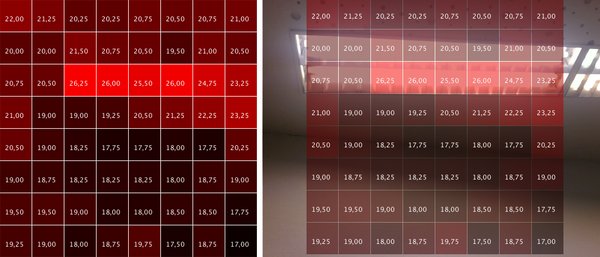

The final idea resembles a sport-on-the-go application, which motivates people to do five quats when they pass by the sign. The application was tested at a bus stop, plaza, walkway and a corner.

Interestingly people actually did this exercise in public space, eventhough one might think norms of public space would inhibit this behaviour.

Weiterführende Links:

Wiki

Projektteilnehmer:

Andreas Berst (M.Sc. Medieninformatik)

Kevin Schminnes (B.Sc. Medieninformatik)

Betreut durch:

Prof. Dr. Eva Hornecker

Dr. Patrick Tobias Fischer